A Framework for Validating Explainable AI in Ophthalmic Ultrasound Image Analysis

This article presents a comprehensive framework for the development and validation of explainable artificial intelligence (XAI) models for ophthalmic ultrasound image detection.

A Framework for Validating Explainable AI in Ophthalmic Ultrasound Image Analysis

Abstract

This article presents a comprehensive framework for the development and validation of explainable artificial intelligence (XAI) models for ophthalmic ultrasound image detection. Aimed at researchers and drug development professionals, it addresses the critical need for transparency and trust in AI-driven diagnostics. The content explores the foundational role of ultrasound in ophthalmology, details the creation of hybrid neuro-symbolic and large language model (LLM) frameworks for interpretable predictions, and provides methodologies for troubleshooting dataset bias and optimizing model generalizability. Furthermore, it establishes rigorous validation protocols, including comparative performance analyses against clinical experts and traditional black-box models, offering a clear pathway for the clinical integration and regulatory approval of trustworthy AI tools in eye care.

The Critical Need for Explainable AI in Ophthalmic Imaging

The Imaging-Rich Landscape of Ophthalmology and AI's Transformative Role

Ophthalmology is fundamentally an imaging-rich specialty, relying heavily on modalities like fundus photography, optical coherence tomography (OCT), and ultrasonography to visualize the intricate structures of the eye. The integration of Artificial Intelligence (AI), particularly deep learning, is now transforming this landscape by enabling automated, precise, and rapid analysis of complex image data [1] [2]. This transformation is especially impactful in the domain of ophthalmic ultrasound, a critical tool for evaluating posterior segment diseases such as retinal detachment, vitreous hemorrhage, and tumours, particularly when ocular opacities prevent the use of standard optical imaging techniques [3]. Within this context, a pressing need has emerged for the validation of explainable AI systems that are not only accurate but also transparent and trustworthy for clinical and research applications. This guide objectively compares the performance of recent AI models and frameworks designed for ophthalmic ultrasound image detection, providing detailed experimental data and methodologies to inform researchers, scientists, and drug development professionals.

Comparative Performance of AI Models in Ophthalmic Ultrasound

Recent studies have developed and validated various AI approaches, from specialized deep learning architectures to automated machine learning (AutoML) platforms. The tables below synthesize quantitative performance data from key experiments for direct comparison.

Table 1: Performance Comparison of Automated Machine Learning (AutoML) Models

| Model Type | Primary Task | Key Performance Metric | Score/Result | Additional Findings |

|---|---|---|---|---|

| Single-Label AutoML [3] | Binary Classification (Normal vs. Abnormal) | Area Under the Precision-Recall Curve (AUPRC) | 0.9943 | Statistically significantly outperformed multi-class and multi-label models in all evaluated metrics (p<0.05). |

| Multi-Class Single-Label AutoML [3] | Classification of Single Pathologies | Area Under the Precision-Recall Curve (AUPRC) | 0.9617 | Pathology classification AUPRCs ranged from 0.9277 to 1.000. |

| Multi-Label AutoML [3] | Detection of Single & Multiple Pathologies | Area Under the Precision-Recall Curve (AUPRC) | 0.9650 | Batch prediction accuracies for various conditions ranged from 86.57% to 97.65%. |

Table 2: Performance of Bespoke Deep Learning and Multimodal Systems

| Model Name / Type | Primary Task | Key Performance Metric | Score/Result | Reported Clinical Use & Limitations |

|---|---|---|---|---|

| OphthUS-GPT (Multimodal) [4] | Automated Report Generation | ROUGE-L / CIDEr | 0.6131 / 0.9818 | >90% of AI-generated reports scored ≥3/5 for correctness by experts. |

| Disease Classification | Accuracy for Common Conditions | >90% (Precision >70%) | Offers intelligent Q&A for report explanation, aiding clinical decision support. | |

| Inception-ResNet Fusion Model [3] | Classification of Ophthalmic Ultrasound Images | Accuracy | 0.9673 | Requires significant coding expertise and computational resources for development. |

| DPLA-Net (Transformer) [3] | Multi-branch Classification | Mean Accuracy | 0.943 | Represents a modern, bespoke architectural approach. |

| Ensemble AI (ImageQC-net) [5] | Body Part & Contrast Classification | Precision & Recall (External Validation) | 99.8% / 99.8% | Reduced image quality check time by ~49% for analysts, demonstrating workflow efficiency. |

Detailed Experimental Protocols and Methodologies

To ensure the reproducibility of results and provide a clear framework for validation, this section details the experimental protocols from the cited studies.

Protocol 1: Development of AutoML Models for Fundus Disease Detection

This protocol is based on the study that developed and validated three AutoML models on the Google Vertex AI platform [3].

- Objective: To evaluate the efficacy of Automated Machine Learning (AutoML) in detecting multiple fundus diseases from ocular B-scan ultrasound images, comparing its performance to bespoke deep-learning models.

- Dataset Curation:

- Source: Images were collected from the Eye and ENT (EENT) Hospital of Fudan University.

- Equipment: All scans were performed using the Aviso Ultrasound Platform A/B with a 10 MHz linear transducer (Quantel Medical).

- Image Acquisition: Patients were instructed to look in primary, upward, downward, nasal, and temporal directions to capture comprehensive views.

- Annotations: An ophthalmologist annotated images based on medical history, preliminary reports, and complementary diagnostics (fundus photography, CT, MRI). Discrepancies were resolved by senior specialists. Pathologies included: Normal (N), Chorioretinal Detachment (CD), Posterior Staphyloma (PSS), Retinal Detachment (RD), Retinal Hole (RH), Vitreous Detachment (VD), Tumours (T), and Vitreous Opacities (VO).

- Data Splits: A training set of 3938 images from 1378 patients was used. Batch prediction tests were performed on a separate set of 336 images from 180 patients.

- Model Development:

- Platform: Google Vertex AI.

- Model Variants:

- Single-Label AutoML: For "Normal" vs. "Abnormality" classification. The "Abnormality" class included images with one or multiple pathologies.

- Multi-Class Single-Label AutoML: For classifying images into one of seven distinct categories (Normal or one of six specific pathologies). Only images with a single label were used.

- Multi-Label AutoML: For detecting the presence of multiple co-existing pathologies in a single image. All images with one or more labels were used.

- Training: Each model underwent three iterations of training. The platform automatically selected the optimal model architecture and hyperparameters based on the dataset.

- Evaluation Metrics: Primary metrics were Area Under the Precision-Recall Curve (AUPRC) and batch prediction accuracy. Statistical significance of performance differences between models was assessed.

Protocol 2: Validation of a Multimodal AI (OphthUS-GPT) for Reporting and Q&A

This protocol outlines the methodology for the OphthUS-GPT system, which integrates image analysis with a large language model [4].

- Objective: To develop and validate OphthUS-GPT, a multimodal AI system that automates diagnostic report generation from ophthalmic B-scan ultrasound images and provides an interactive question-answering feature for clinical decision support.

- Study Design & Dataset:

- Design: Retrospective study.

- Data Source: Affiliated Eye Hospital of Jiangxi Medical College.

- Scope: 54,696 images and 9,392 reports from 31,943 patients collected between 2017-2024.

- System Architecture:

- Stage 1 - Report Generation: Utilizes a Bootstrapping Language-Image Pre-training (BLIP) model to analyze ultrasound images and generate preliminary diagnostic reports that meet medical standards.

- Stage 2 - Intelligent Q&A: Incorporates the DeepSeek-R1-Distill-Llama-8B large language model to provide multi-turn intelligent dialogue, explaining reports to both patients and physicians.

- Evaluation Framework:

- Report Generation: Assessed using text similarity metrics (ROUGE-L, CIDEr), disease classification metrics (accuracy, sensitivity, specificity, precision, F1-score), and expert ophthalmologist ratings for report correctness and completeness on a 5-point scale.

- Question-Answering System: Evaluated by ophthalmologists who rated the AI's answers on criteria including accuracy, completeness, potential for harm, and overall satisfaction. The DeepSeek model's performance was compared against other LLMs like GPT-4.

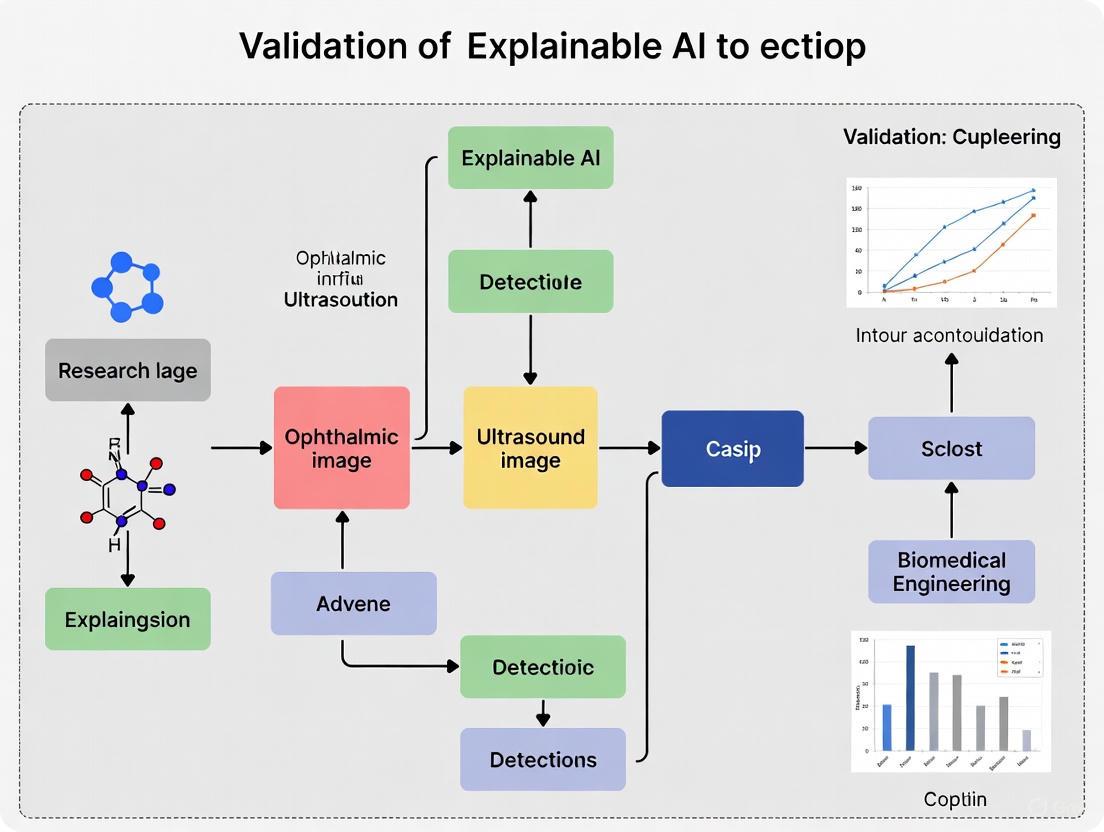

Figure 1: OphthUS-GPT's two-stage workflow for automated reporting and interactive Q&A [4].

Frameworks for Explainable and Fair AI in Medical Imaging

The "black-box" nature of complex AI models is a significant barrier to clinical adoption. Research is increasingly focused on developing explainable AI (XAI) and ensuring algorithmic fairness.

A Novel Explainable AI Framework: One proposed framework for medical image classification integrates statistical, visual, and rule-based methods to provide comprehensive model interpretability [6]. This multi-faceted approach aims to move beyond single-method explanations, offering clinicians a more robust understanding of the AI's decision-making process, which is crucial for validation and trust.

Advancing Equitable AI with Contrastive Learning: A critical challenge in medical AI is the potential for models to perpetuate or amplify biases against underserved populations. A study on chest radiographs proposed a supervised contrastive learning technique to minimize diagnostic bias [7]. The method trains the model by minimizing the distance between image embeddings from the same diagnostic label but different demographic subgroups (e.g., different races), while increasing the distance between embeddings from the same demographic group but different diagnoses. This encourages the model to prioritize clinical features over demographic characteristics, resulting in a reduction of the bias metric (ΔmAUC) from 0.21 to 0.18 for racial subgroups in COVID-19 diagnosis, albeit with a slight trade-off in overall accuracy [7].

Figure 2: Contrastive learning workflow for reducing AI bias in diagnostics [7].

The Scientist's Toolkit: Essential Research Reagents and Materials

For researchers aiming to replicate or build upon the experiments cited in this guide, the following table details key materials and solutions used in the featured studies.

Table 3: Key Research Reagents and Materials for Ophthalmic Ultrasound AI

| Item Name / Category | Specification / Example | Primary Function in Research |

|---|---|---|

| Ultrasound Imaging Platform [3] | Aviso Ultrasound Platform A/B (Quantel Medical) with 10 MHz linear transducer. | Standardized acquisition of ocular B-mode ultrasound images for dataset creation. |

| AutoML Platform [3] | Google Vertex AI Platform. | Enables development of high-performance image classification models by clinicians without extensive coding expertise, automating architecture selection and tuning. |

| Annotation Software & Protocol [3] [5] | Custom protocols using patient medical history, preliminary reports, and multi-modal diagnostics (MRI, CT). | Creation of high-quality ground truth labels by clinical experts, which is essential for supervised model training and validation. |

| Pre-trained Deep Learning Models [4] [5] | BLIP (Bootstrapping Language-Image Pre-training), InceptionResNetV2. | Serves as a foundational backbone for transfer learning, accelerating development and improving performance in tasks like image analysis and classification. |

| Large Language Model (LLM) [4] | DeepSeek-R1-Distill-Llama-8B. | Provides intelligent, interactive question-answering capabilities to explain AI-generated reports and support clinical decision-making. |

| Multimodal Datasets [4] [3] | Curated datasets with tens of thousands of images and paired reports. | Serves as the essential fuel for training and validating complex AI systems, especially multimodal and generative models. |

| Swep | Swep (CAS 1918-18-9) - Chemical Reagent for Research | Swep (CAS 1918-18-9) is a chemical compound supplied for research use only (RUO). Not for human or veterinary diagnostic or therapeutic use. |

| Damgo | Damgo, CAS:78123-71-4, MF:C26H35N5O6, MW:513.6 g/mol | Chemical Reagent |

The objective comparison of AI models for ophthalmic ultrasound reveals a dynamic field where AutoML platforms are achieving diagnostic accuracy comparable to bespoke deep-learning models, thereby democratizing AI development for clinicians [3]. Simultaneously, integrated multimodal systems like OphthUS-GPT are expanding the role of AI from pure image analysis to comprehensive clinical tasks like automated reporting and interactive decision support [4]. The ongoing integration of explainable AI frameworks and bias-mitigation strategies, such as contrastive learning, is critical for validating these technologies [7] [6]. For researchers and drug development professionals, these advancements signal a shift towards more accessible, transparent, and clinically integrated AI tools that promise to enhance the precision and efficiency of ophthalmic imaging research and patient care.

The integration of Artificial Intelligence (AI) into ophthalmic imaging has marked a transformative era in diagnosing and managing eye diseases, with applications expanding into systemic neurodegenerative conditions [8]. However, the "black-box" nature of many complex AI models, where decisions are made without transparent reasoning, remains a significant barrier to their widespread clinical adoption [9] [10]. In high-stakes medical fields, clinicians are justifiably hesitant to trust recommendations without understanding the underlying rationale, as this opacity hampers validation, undermines accountability, and can obscure biases [11] [12]. This challenge is particularly acute for ophthalmic ultrasound and other imaging modalities, where AI promises to enhance early detection of conditions like age-related macular degeneration (AMD) but requires unwavering clinician confidence to be effective [13] [14].

Explainable AI (XAI) has emerged as a critical solution to this problem, aiming to bridge the gap between algorithmic prediction and clinical trust by making AI's decision-making processes transparent and interpretable [9] [10]. The need for XAI is not merely technical but also ethical and regulatory, underscored by frameworks like the European Union's General Data Protection Regulation (GDPR), which emphasizes a "right to explanation" [10]. This guide provides a comprehensive comparison of XAI methodologies, focusing on their validation and application within ophthalmic image analysis. It objectively evaluates the performance of various XAI frameworks against traditional black-box models, detailing experimental protocols and presenting quantitative data to equip researchers and clinicians with the tools needed to critically appraise and implement trustworthy diagnostic AI.

Comparative Analysis of XAI Techniques for Medical Imaging

A diverse array of XAI techniques has been developed to illuminate the inner workings of AI models. These methods can be broadly categorized by their approach, output, and integration with underlying models. The table below summarizes the core XAI methods relevant to ophthalmic image analysis, providing a structured comparison to guide methodological selection.

Table 1: Comparison of Key Explainable AI (XAI) Techniques

| XAI Technique | Type | Core Mechanism | Typical Output | Key Advantages | Key Limitations |

|---|---|---|---|---|---|

| Grad-CAM [10] [15] | Visualization, Model-Specific | Uses gradients in the final convolutional layer to weigh activation maps. | Heatmap highlighting important image regions. | Intuitive visual explanations; easy to implement on CNN architectures. | Explanations are coarse, lacking pixel-level granularity [15]. |

| Pixel-Level Interpretability (PLI) [15] | Visualization, Model-Specific | A hybrid convolutional–fuzzy system for fine-grained, pixel-level analysis. | Detailed pixel-level heatmaps. | High localization precision; superior structural similarity and lower error vs. Grad-CAM [15]. | More computationally intensive than class-activation mapping methods. |

| SHAP [10] | Feature Attribution, Model-Agnostic | Based on cooperative game theory to assign each feature an importance value. | Numerical feature importance scores and plots. | Solid theoretical foundation; provides consistent global and local explanations. | Computationally expensive; less intuitive for direct image interpretation. |

| LIME [10] | Feature Attribution, Model-Agnostic | Creates a local, interpretable surrogate model to approximate black-box model predictions. | Highlights super-pixels or features contributing to a single prediction. | Flexible and model-agnostic; useful for explaining individual cases. | Explanations can be unstable; surrogate model may be an unreliable approximation. |

| Prototype-Based [12] | Example-Based, Model-Specific | Compares input images to prototypical examples learned during training. | "This looks like that" explanations using image patches. | More intuitive, case-based reasoning that can mimic clinical workflow. | Requires specialized model architecture; prototypes may be hard to curate. |

| Neuro-Symbolic Hybrid [13] | Symbolic, Integrated | Fuses neural networks with a symbolic knowledge graph encoding domain expertise. | Predictions supported by explicit knowledge-graph rules and natural language narratives. | High transparency and causal reasoning; >85% of predictions supported by knowledge rules [13]. | Complex to develop and requires extensive domain knowledge formalization. |

Experimental Protocols for XAI Validation in Ophthalmology

Validating an XAI system requires more than just assessing its predictive accuracy; it necessitates a multi-faceted evaluation of its explanations' quality, utility, and impact on human decision-making. Below are detailed protocols for key experiments cited in comparative studies.

Protocol: Evaluating Diagnostic Performance and Explanation Fidelity

This protocol is based on the validation of a hybrid neuro-symbolic framework for predicting AMD treatment outcomes [13].

- Objective: To assess the predictive accuracy of an XAI model and the fidelity of its explanations to established domain knowledge.

- Dataset:

- Cohort: A pilot cohort of patients (e.g., n=10 surgically managed AMD patients).

- Data Types: Multimodal ophthalmic imaging (OCT, fundus fluorescein angiography, ocular B-scan ultrasonography) paired with structured clinical documents [13].

- Preprocessing:

- Imaging: DICOM-based quality control, lesion segmentation, and quantitative biomarker extraction.

- Text: Semantic annotation and mapping to standardized ontologies.

- Model Architecture:

- A hybrid neuro-symbolic model where a knowledge graph, encoding causal ophthalmic relationships, constrains and guides a neural network.

- A fine-tuned Large Language Model (LLM) generates natural-language risk explanations from structured biomarkers and clinical narratives [13].

- Validation Metrics:

- Predictive Performance: Area Under the Receiver Operating Characteristic Curve (AUROC), Area Under the Precision-Recall Curve (AUPRC), and Brier score.

- Explainability Metrics: Percentage of predictions supported by high-confidence knowledge-graph rules; accuracy of LLM-generated narratives in citing key biomarkers [13].

- Key Outcomes from Cited Study: The hybrid model achieved an AUROC of 0.94 and a Brier score of 0.07, with >85% of predictions supported by knowledge-graph rules and >90% of narratives accurately citing biomarkers [13].

Protocol: Human-in-the-Loop Evaluation of Trust and Reliance

This protocol assesses the real-world impact of XAI on clinician performance, adapting a study on sonographer interactions with an XAI model for gestational age estimation [12].

- Objective: To measure the effect of model predictions and explanations on clinician accuracy, trust, and appropriate reliance.

- Study Design: A three-stage reader study with the same clinicians evaluating the same set of images in each stage.

- Stage 1 (Baseline): Clinicians make estimates without AI assistance.

- Stage 2 (AI Prediction): Clinicians make estimates with access to the model's numerical prediction.

- Stage 3 (XAI): Clinicians make estimates with access to both the model prediction and its visual explanations (e.g., heatmaps, prototype comparisons) [12].

- Metrics:

- Performance: Mean Absolute Error (MAE) of clinician estimates compared to ground truth.

- Reliance: The change in a participant's estimate toward or away from the model's prediction.

- Appropriate Reliance: A behavior-based metric categorizing each decision as:

- Appropriate: Reliance when the model was better, or non-reliance when it was worse.

- Under-Reliance: Not relying on the model when it was better.

- Over-Reliance: Relying on the model when it was worse [12].

- Subjective Trust: Post-study questionnaires using Likert scales to gauge perceived usefulness and trust.

- Key Outcomes from Cited Study: While model predictions significantly reduced clinician MAE (from 23.5 to 15.7 days), the addition of explanations had a non-significant further reduction (to 14.3 days). The impact varied significantly between clinicians, with some performing worse with explanations, highlighting the critical need for human-focused evaluation [12].

Visualization of XAI Workflows and Frameworks

The following diagrams, generated using Graphviz DOT language, illustrate the logical relationships and workflows of key XAI validation and framework integration processes.

XAI Validation Pathway for Clinical Trust

Hybrid Neuro-Symbolic AI Framework

Quantitative Performance Comparison of XAI Models

The true test of an XAI system lies in its combined diagnostic performance and explanatory power. The following tables consolidate quantitative data from recent studies to enable direct comparison.

Table 2: Diagnostic Performance of AI/XAI Models in Ophthalmology

| Model / Application | Dataset / Cohort | Key Performance Metrics | Comparative Outcome |

|---|---|---|---|

| Hybrid Neuro-Symbolic (AMD Prognosis) [13] | Pilot cohort (10 patients), multimodal imaging. | AUROC: 0.94, AUPRC: 0.92, Brier Score: 0.07. | Significantly outperformed purely neural and Cox regression baselines (p ≤ 0.01). |

| AI (CNN) for Parkinson's Detection [8] | Retinal OCT images from PD patients and controls. | AUC: 0.918, Sensitivity: 100%, Specificity: ~85%. | Demonstrated high accuracy in detecting retinal changes associated with Parkinson's disease. |

| AI for Alzheimer's Detection [8] | OCT-Angiography analysis of AD patients. | AUC: 0.73 - 0.91. | Successfully identified retinal vascular alterations correlating with cognitive decline. |

| Trilateral Ensemble DL for AD/MCI [8] | OCT imaging in Asian and White populations. | AUC: 0.91 (Asian), 0.84 (White). | Outperformed traditional statistical models (AUC 0.71-0.75). |

Table 3: Explainability and Clinical Utility Metrics

| Model / Technique | Explainability Method | Explainability & Clinical Impact Metrics |

|---|---|---|

| Hybrid Neuro-Symbolic Framework [13] | Knowledge-graph rules + LLM narratives. | >85% of predictions supported by knowledge-graph rules; >90% of LLM narratives accurately cited key biomarkers. |

| Prototype-Based XAI (Gestational Age) [12] | "This-looks-like-that" prototype explanations. | With AI prediction alone: Reduced clinician MAE from 23.5 to 15.7 days.With added explanations: Further non-significant reduction to 14.3 days. High variability in individual clinician response. |

| Pixel-Level Interpretability (PLI) [15] | Pixel-level heatmaps with fuzzy logic. | Outperformed Grad-CAM in Structural Similarity (SSIM), Mean Squared Error (MSE), and computational efficiency on chest X-ray datasets. |

The Scientist's Toolkit: Essential Research Reagents and Materials

Successfully developing and validating XAI systems for ophthalmic imaging requires a suite of specialized tools, datasets, and software. The following table details key components of the research pipeline.

Table 4: Key Research Reagent Solutions for XAI in Ophthalmic Imaging

| Category | Item / Solution | Specification / Function | Example Use Case |

|---|---|---|---|

| Imaging Modalities | Ocular B-scan Ultrasonography | Provides cross-sectional images of the eye; crucial for assessing internal structures, especially when opacity prevents other methods. | Structural assessment for AMD and intraocular conditions [13]. |

| Optical Coherence Tomography (OCT) | High-resolution, cross-sectional imaging of retinal layers; key for quantifying biomarkers like RNFL thickness [8]. | Detection of retinal biomarkers for Alzheimer's and Parkinson's disease [8]. | |

| Fundus Photography | Color or fluorescein angiography images of the retina. | Input for AI models screening for diabetic retinopathy and AMD [14]. | |

| Data & Annotation | Standardized Ontologies (e.g., SNOMED CT) | Structured vocabularies for semantically annotating clinical text and findings. | Mapping clinical narratives to a consistent format for knowledge graph integration [13]. |

| DICOM Standard | Ensures interoperability and quality control of medical images. | Preprocessing and standardizing imaging data from multiple sources [13]. | |

| Software & Models | Convolutional Neural Networks (CNNs) | Deep learning architectures (e.g., VGG19) for feature extraction and image classification. | Base model for image analysis in tasks like disease detection [8] [15]. |

| Knowledge Graph Platforms | Tools for building and managing graphs that encode domain knowledge and causal relationships. | Creating the symbolic reasoning component in a neuro-symbolic hybrid system [13]. | |

| XAI Libraries (e.g., SHAP, LIME, Captum) | Open-source libraries for generating post-hoc explanations of model predictions. | Providing feature attributions or saliency maps for black-box models [10]. | |

| AM966 | AM966, CAS:1228690-19-4, MF:C27H23ClN2O5, MW:490.9 g/mol | Chemical Reagent | Bench Chemicals |

| N6022 | N6022, CAS:1208315-24-5, MF:C24H22N4O3, MW:414.5 g/mol | Chemical Reagent | Bench Chemicals |

Ophthalmic ultrasound, particularly B-scan imaging, represents a critical diagnostic tool for visualizing intraocular structures, especially when optical media opacities like cataracts or vitreous hemorrhage preclude direct examination of the posterior segment. In recent years, artificial intelligence (AI) has emerged as a transformative technology in this domain, offering solutions to longstanding challenges in standardization, interpretation, and accessibility. The integration of AI into ophthalmic ultrasound presents unique opportunities to enhance diagnostic precision, automate reporting, and extend specialist-level expertise to underserved populations. However, this integration also faces significant diagnostic challenges related to data quality, model interpretability, and clinical validation. This guide objectively compares the performance of emerging AI technologies against conventional diagnostic approaches and examines their role within the broader thesis of validating explainable AI for ophthalmic ultrasound image detection research.

Performance Comparison: AI Systems in Ophthalmic Ultrasound

Diagnostic Accuracy and Reporting Capabilities

Table 1: Performance Metrics of AI Systems in Ophthalmic Ultrasound

| AI System / Model | Primary Function | Report Generation Accuracy (ROUGE-L) | Disease Classification Accuracy | Clinical Validation |

|---|---|---|---|---|

| OphthUS-GPT [16] | Automated reporting & Q&A | 0.6131 | >90% (common conditions) | 54,696 images from 31,943 patients |

| CNN Models for Neurodegeneration [8] | PD detection via retinal biomarkers | N/A | AUC: 0.918, Sensitivity: 100%, Specificity: 85% | OCT retinal images from PD patients vs. controls |

| ERNIE Bot-3.5 (Ultrasound Q&A) [17] | Medical examination responses | N/A | Accuracy: 8.33%-80% (varies by question type) | 554 ultrasound examination questions |

| ChatGPT (Ultrasound Q&A) [17] | Medical examination responses | N/A | Lower than ERNIE Bot in many aspects (P<.05) | 554 ultrasound examination questions |

The experimental data reveals that specialized systems like OphthUS-GPT demonstrate superior performance in domain-specific tasks compared to general-purpose AI models. OphthUS-GPT's integration of BLIP for image analysis and DeepSeek for natural language processing enables comprehensive report generation with high accuracy scores (ROUGE-L: 0.6131, CIDEr: 0.9818) [16]. For disease classification, the system achieved precision exceeding 70% for common ophthalmic conditions, with expert assessments rating over 90% of generated reports as clinically acceptable (scoring ≥3/5 for correctness) and 96% for completeness [16].

Comparative studies on AI chatbots for ultrasound medicine reveal significant performance variations based on model architecture and training data. ERNIE Bot-3.5 outperformed ChatGPT in many aspects (P<.05), particularly in handling specialized medical terminology and complex clinical scenarios [17]. Both models showed performance degradation when processing English queries compared to Chinese inputs, though ERNIE Bot's decline was less pronounced, suggesting linguistic and cultural training factors significantly impact diagnostic AI performance [17].

Performance Across Question Types and Clinical Topics

Table 2: AI Performance Variations by Ultrasound Question Type and Topic

| Question Category | Subcategory | AI Performance (Accuracy/Acceptability) | Notable Challenges |

|---|---|---|---|

| Question Type | Single-choice (64% of questions) | Highest accuracy (up to 80%) | Limited data provided |

| True or false questions | Score highest among objective questions | Limited data provided | |

| Short answers (12% of questions) | Acceptability: 47.62%-75.36% | Completeness and logical clarity | |

| Noun explanations (11% of questions) | Acceptability: 47.62%-75.36% | Depth and breadth of explanations | |

| Clinical Topic | Basic knowledge | Better performance | Foundational concepts |

| Ultrasound methods | Better performance | Technical procedures | |

| Diseases and etiology | Better performance | Pathological understanding | |

| Ultrasound signs | Performance decline | Pattern recognition | |

| Ultrasound diagnosis | Performance decline | Complex decision-making |

The performance analysis reveals that AI systems excel in structured tasks with defined parameters but struggle with complex diagnostic reasoning requiring integrative analysis. For subjective questions including noun explanations and short answers, expert evaluations using Likert scales (1-5 points) demonstrated acceptability rates ranging from 47.62% to 75.36%, with assessments based on completeness, logical clarity, accuracy, and depth of understanding [17]. This performance stratification highlights the current limitations of AI in nuanced clinical interpretation compared to its strengths in information retrieval and pattern recognition.

Experimental Protocols and Methodologies

Multimodal AI System Development and Validation

The OphthUS-GPT study exemplifies a comprehensive approach to developing and validating AI systems for ophthalmic ultrasound. The research employed a retrospective design analyzing 54,696 B-scan ultrasound images and 9,392 corresponding reports collected between 2017-2024 from 31,943 patients (mean age 49.14±0.124 years, 50.15% male) [16]. This substantial dataset provided the foundation for training and validating the multimodal AI system.

The experimental protocol involved two distinct assessment components: (1) diagnostic report generation evaluated using text similarity metrics (ROUGE-L, CIDEr), disease classification metrics (accuracy, sensitivity, specificity, precision, F1 score), and blinded ophthalmologist ratings for accuracy and completeness; and (2) question-answering system assessment where ophthalmologists rated AI-generated answers on multiple parameters including accuracy, completeness, potential harm, and overall satisfaction [16]. This rigorous multi-dimensional evaluation framework ensures comprehensive assessment of clinical utility beyond mere technical performance.

For the Q&A component, the DeepSeek-R1-Distill-Llama-8B model was evaluated against other large language models including GPT4o and OpenAI-o1, with results demonstrating comparable performance to these established models while outperforming other benchmark systems [16]. This suggests that strategically distilled, domain-adapted models can achieve competitive performance with reduced computational requirements—a significant consideration for clinical implementation.

Comparative Diagnostic Accuracy Studies

Recent systematic reviews have synthesized evidence on the diagnostic capabilities of AI systems compared to clinical professionals. A comprehensive analysis of 30 studies involving 19 LLMs and 4,762 cases revealed that the optimal model accuracy for primary diagnosis ranged from 25% to 97.8%, while triage accuracy ranged from 66.5% to 98% [18]. Although these figures demonstrate considerable diagnostic capability, the analysis concluded that AI accuracy still falls short of clinical professionals across most domains.

These studies employed rigorous methodologies including prospective comparisons, cross-sectional analyses, and retrospective cohort designs across multiple medical specialties. In ophthalmology specifically, nine studies compared AI diagnostic performance against ophthalmologists with varying expertise levels, from general ophthalmologists to subspecialists in glaucoma and retina [18]. The risk of bias assessment using the Prediction Model Risk of Bias Assessment Tool (PROBAST) indicated a high risk of bias in the majority of studies, primarily due to the use of known case diagnoses rather than real-world clinical scenarios [18]. This methodological limitation highlights an important challenge in validating AI systems for clinical deployment.

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Materials for Ophthalmic AI Validation

| Research Component | Specific Resource | Function/Application | Example Implementation |

|---|---|---|---|

| Dataset | 54,696 B-scan images & 9,392 reports [16] | Training and validation of multimodal AI systems | OphthUS-GPT development |

| AI Architectures | BLIP (Bootstrapping Language-Image Pre-training) [16] | Visual information extraction and integration | OphthUS-GPT image analysis component |

| DeepSeek-R1-Distill-Llama-8B [16] | Natural language processing and report generation | OphthUS-GPT Q&A and reporting system | |

| CNN (Convolutional Neural Networks) [8] | Retinal biomarker detection for neurodegenerative diseases | PD detection from OCT images | |

| Evaluation Metrics | ROUGE-L, CIDEr [16] | Quantitative assessment of report quality | Evaluating diagnostic report generation |

| Accuracy, Sensitivity, Specificity, F1 [16] | Standard classification performance metrics | Disease detection and classification | |

| Likert Scale (1-5) Expert Ratings [17] | Subjective quality assessment of AI outputs | Evaluating completeness, logical clarity, accuracy | |

| Validation Framework | PROBAST (Prediction Model Risk of Bias Assessment Tool) [18] | Methodological quality assessment of diagnostic studies | Systematic reviews of AI diagnostic accuracy |

| Ethical Guidelines | GDPR, HIPAA, WHO AI Ethics Guidelines [19] | Ensuring privacy, fairness, and transparency | Addressing ethical challenges in ophthalmic AI |

| Cpda | Cpda, MF:C20H15ClF2N2O2, MW:388.8 g/mol | Chemical Reagent | Bench Chemicals |

| F-B1 | F-B1, MF:C19H22O5, MW:330.38 | Chemical Reagent | Bench Chemicals |

This toolkit represents essential resources for researchers developing and validating AI systems for ophthalmic ultrasound. The substantial dataset used in OphthUS-GPT development highlights the critical importance of comprehensive, well-curated medical data for training robust AI models [16]. The combination of architectural components demonstrates the trend toward multimodal AI systems that integrate computer vision and natural language processing capabilities for comprehensive clinical support.

The evaluation framework incorporates both quantitative metrics and qualitative expert assessments, reflecting the multifaceted nature of clinical validation. The inclusion of ethical guidelines addresses growing concerns around AI implementation in healthcare, particularly regarding privacy, fairness, and transparency—identified as predominant ethical themes in ophthalmic AI research [19].

Explainable AI Validation in Ophthalmic Ultrasound

The validation of explainable AI represents a critical frontier in ophthalmic ultrasound research, addressing the "black box" problem often associated with complex deep learning models. Current bibliometric analyses reveal that ethical concerns in ophthalmic AI primarily focus on privacy (14.5% of publications), fairness and equality (32.7%), and transparency and interpretability (44.8%) [19]. These ethical priorities vary across imaging modalities, with fundus imaging (59.4%) and OCT (30.9%) receiving the most attention in the literature [19].

The movement toward explainable AI in ophthalmology aligns with broader trends in medical AI validation. While most studies (78.3%) address ethical considerations during diagnostic algorithm development, only 11.5% directly target ethical concerns as their primary focus—though this proportion is increasing [19]. This indicates a growing recognition that performance metrics alone are insufficient for clinical adoption; understanding AI decision-making processes is equally crucial for building trust and facilitating appropriate clinical use.

The integration of AI into ophthalmic ultrasound presents a paradigm shift in ocular diagnostics, offering substantial opportunities to enhance diagnostic accuracy, standardize reporting, and improve healthcare accessibility. Current evidence demonstrates that specialized systems like OphthUS-GPT can generate clinically acceptable reports and provide decision support that complements human expertise. However, significant challenges remain in achieving true explainability, ensuring robustness across diverse populations, and navigating ethical considerations surrounding implementation.

The validation of explainable AI for ophthalmic ultrasound image detection requires multidisciplinary collaboration between clinicians, data scientists, and ethicists. Future research should prioritize the development of standardized validation frameworks that incorporate technical performance, clinical utility, and ethical considerations. As AI technologies continue to evolve, their thoughtful integration into ophthalmic practice holds promise for transforming patient care through enhanced diagnostic capabilities while maintaining the essential human elements of clinical judgment and patient-centered care.

The integration of Artificial Intelligence (AI) into medical diagnostics, particularly in specialized fields like ophthalmology, offers transformative potential for patient care. However, this power brings forth significant ethical responsibilities. For AI systems interpreting ophthalmic ultrasound images—where diagnostic decisions can impact vision outcomes—adherence to core ethical principles is not optional but fundamental to clinical validity and patient safety. This analysis examines the triad of transparency, fairness, and data security as interconnected pillars essential for deploying trustworthy AI in ophthalmic research and drug development. The validation of explainable AI (XAI) models for ocular disease detection provides a critical case study for exploring how these principles are operationalized, measured, and balanced against performance metrics to ensure models are not only accurate but also ethically sound.

Deconstructing the Core Ethical Principles

The Transparency Spectrum: From Black Box to Explainable AI

AI transparency involves understanding how AI systems make decisions, why they produce specific results, and what data they use [20]. In a medical context, this provides a window into the inner workings of AI, helping developers and clinicians understand and trust these systems [20]. Transparency is not a binary state but a spectrum encompassing several levels:

- Algorithmic Transparency focuses on the logic, processes, and algorithms used by AI systems, making the internal workings of models understandable to stakeholders [20].

- Interaction Transparency deals with communication between users and AI systems, creating interfaces that clearly convey how the AI operates and what to expect from interactions [20].

- Social Transparency extends beyond technical aspects to address the broader ethical and societal implications of AI deployment, including potential biases, fairness, and privacy concerns [20].

The pursuit of transparency often centers on developing Explainable AI (XAI), which provides easy-to-understand explanations for its decisions and actions [20]. This stands in stark contrast to "black box" systems, where models are so complex that they provide results without clearly explaining how they were achieved, leading to a lack of trust [20]. In medical applications like ophthalmic ultrasound detection, explainability is crucial for clinical adoption, as practitioners must understand the rationale behind a diagnosis before acting upon it.

Fairness: From Theoretical Concepts to Quantifiable Metrics

Fairness in AI ensures that models do not unintentionally harm certain groups and work equitably for everyone [21]. AI bias occurs when models make unfair decisions based on biased data or flawed algorithms, manifesting as racial, age, socio-economic, or gender discrimination [21]. This bias can infiltrate AI systems during various development stages, including unrepresentative training data, amplified historical biases in the data, or algorithms focused too narrowly on specific outcomes without considering fairness [21].

The conceptual framework for understanding fairness encompasses three complementary perspectives:

- Equality treats everyone the same, using identical criteria for all individuals regardless of background [21].

- Equity recognizes that different people have different needs, aiming to level the playing field by providing tailored support where needed [21].

- Justice examines both how AI is created (procedural justice) and how its outcomes are distributed (distributive justice) [21].

In practice, fairness is evaluated through specific metrics that provide quantifiable measures of potential bias, which will be explored in the experimental validation section.

Data Security: The Foundation of Trustworthy AI

Data security in AI systems involves protecting sensitive information throughout the model lifecycle—from training to deployment. This is particularly critical in healthcare applications handling protected health information (PHI). Security challenges include ensuring patient data privacy while maintaining necessary transparency, protecting against AI supply chain attacks when using open-source models and data, and identifying model vulnerabilities that could be exploited maliciously [22] [20] [23].

A comprehensive security framework for medical AI must address multiple failure categories:

- Abuse Failures: Toxicity, bias, hate speech, violence, and malicious code generation [23].

- Privacy Failures: Personally Identifiable Information (PII) leakage, data loss, and model information leakage [23].

- Integrity Failures: Factual inconsistency, hallucination, and off-topic responses [23].

- Availability Failures: Denial of service and increased computational cost [23].

Experimental Validation: Measuring Ethical Compliance in Ophthalmic AI

Case Study: The DPLA-Net for Ocular Disease Detection

The Dual-Path Lesion Attention Network (DPLA-Net) provides an exemplary case study for examining ethical principle implementation in ophthalmic AI. This deep learning system was designed for screening intraocular tumor (IOT), retinal detachment (RD), vitreous hemorrhage (VH), and posterior scleral staphyloma (PSS) using ocular B-scan ultrasound images [24].

Methodology and Experimental Protocol:

- Data Collection: The multi-center study compiled 6,054 ultrasound images from five clinically confirmed categories (IOT, RD, VH, PSS, and normal eyes) [24].

- Data Partitioning: Images were divided into training, validation, and test sets in a ratio of 7:1:2 [24].

- Preprocessing: Irrelevant features in raw ultrasound images (patient information, device parameters) were removed, and images were center-cropped to 224×224 pixels [24].

- Data Augmentation: To enhance model robustness and address potential biases, researchers employed flip, rotation, affine transformation, and Contrast Limited Adaptive Histogram Equalization (CLAHE) [24].

- Model Architecture: DPLA-Net implemented a dual-path approach with (1) a macro path extracting semantic features and producing coarse predictions, and (2) a micro path utilizing lesion attention maps to focus on skeptical regions for fine diagnosis [24].

- Validation: Performance was evaluated through an independent test set of 1,296 images and compared against diagnoses from six ophthalmologists (two senior, four junior) [24].

Table 1: Performance Metrics of DPLA-Net for Ocular Disease Detection

| Disease Category | Area Under Curve (AUC) | Sensitivity | Specificity |

|---|---|---|---|

| Intraocular Tumor (IOT) | 0.988 | Not Reported | Not Reported |

| Retinal Detachment (RD) | 0.997 | Not Reported | Not Reported |

| Posterior Scleral Staphyloma (PSS) | 0.994 | Not Reported | Not Reported |

| Vitreous Hemorrhage (VH) | 0.988 | Not Reported | Not Reported |

| Normal Eyes | 0.993 | Not Reported | Not Reported |

| Overall System | 0.943 (Accuracy) | 99.7% | 94.5% |

Table 2: Clinical Utility Assessment of DPLA-Net Assistance

| Clinician Group | Accuracy Without AI | Accuracy With AI | Time Per Image (Seconds) |

|---|---|---|---|

| Junior Ophthalmologists (n=4) | 0.696 | 0.919 | 16.84±2.34s to 10.09±1.79s |

| Senior Ophthalmologists (n=2) | Not Reported | Not Reported | Not Reported |

The study demonstrated that DPLA-Net not only achieved high diagnostic accuracy but also significantly improved the efficiency and accuracy of junior ophthalmologists, reducing interpretation time from 16.84±2.34 seconds to 10.09±1.79 seconds per image [24].

Quantifying Fairness: Metrics and Methodologies

To ensure equitable performance across patient demographics, researchers must employ specific fairness metrics during model validation:

Table 3: Essential Fairness Metrics for Medical AI Validation

| Metric | Formula | Use Case | Limitations |

|---|---|---|---|

| Statistical Parity/Demographic Parity | P(Outcome=1∣Group=A) = P(Outcome=1∣Group=B) | Hiring algorithms, loan approval systems | May not account for differences in group qualifications [21] |

| Equal Opportunity | P(Outcome=1∣Qualified=1,Group=A) = P(Outcome=1∣Qualified=1,Group=B) | Educational admission, job promotions | Requires accurate measurement of qualification [21] |

| Equality of Odds | P(Outcome=1∣Actual=0,Group=A) = P(Outcome=1∣Actual=0,Group=B) AND P(Outcome=1∣Actual=1,Group=A) = P(Outcome=1∣Actual=1,Group=B) | Criminal justice, medical diagnosis | Difficult to achieve in practice; may conflict with accuracy [21] |

| Predictive Parity | P(Actual=1∣Outcome=1,Group=A) = P(Actual=1∣Outcome=1,Group=B) | Loan default prediction, healthcare treatment | May not address underlying data distribution disparities [21] |

| Treatment Equality | Ratio of FPR/FNR balanced across groups | Predictive policing, fraud detection | Complex to calculate and interpret [21] |

For ophthalmic AI applications, these metrics should be calculated across relevant demographic groups (age, gender, ethnicity) and clinical characteristics to identify potential disparities in diagnostic performance.

Transparency and Explainability Methodologies

The DPLA-Net study incorporated explainability through lesion attention maps that highlighted regions of interest in ultrasound images, similar to heatmaps used in other medical AI systems [24] [25]. This approach provides visual explanations for model decisions, allowing clinicians to verify that the AI is focusing on clinically relevant areas.

Additional XAI techniques suitable for ophthalmic AI include:

- Saliency Maps: Visualizing which areas of an input image most influenced the model's decision

- Feature Importance Analysis: Identifying which input features contribute most to predictions

- Counterfactual Explanations: Showing how minimal changes to input would alter the model's output

- Confidence Calibration: Ensuring the model's confidence scores accurately reflect likelihood of correctness

Implementation Framework: Operationalizing Ethics in AI Development

The Research Reagent Solutions Toolkit

Table 4: Essential Tools for Ethical AI Development in Medical Imaging

| Tool/Category | Specific Examples | Function in Ethical AI Development |

|---|---|---|

| Fairness Metric Libraries | Fairlearn (Microsoft), AIF360 (IBM), Fairness Indicators (Google) | Provide standardized metrics and algorithms to detect, quantify, and mitigate bias in models [21] |

| Model Validation Platforms | Galileo, Scikit-learn, TensorFlow Model Analysis | Offer comprehensive validation workflows to detect overfitting, measure performance, and ensure generalization [26] |

| Security Validation Tools | AI Validation (Cisco), AI Validation (Robust Intelligence) | Automatically test for security vulnerabilities, privacy failures, and model integrity [22] [23] |

| Explainability Frameworks | LIME, SHAP, Captum | Generate post-hoc explanations for model predictions to enhance transparency [20] |

| Data Annotation Platforms | Labelbox, Scale AI, Prodigy | Enable creation of diverse, accurately labeled datasets with documentation of labeling protocols |

| LsbB | LsbB Bacteriocin | LsbB is a leaderless Class II bacteriocin for antimicrobial mechanism research. This product is For Research Use Only. Not for human or veterinary use. |

| AQ4 | AQ4, CAS:70476-63-0, MF:C22H28N4O4, MW:412.5 g/mol | Chemical Reagent |

Workflow for Ethical AI Model Development

The following diagram illustrates a comprehensive workflow for developing ethical AI models in ophthalmic imaging that integrates transparency, fairness, and security considerations throughout the development lifecycle:

Regulatory Compliance and Standards

The ethical development of medical AI must align with emerging regulatory frameworks and standards:

- EU AI Act: Classifies medical AI as high-risk, requiring conformity assessments, risk mitigation systems, quality management, and transparency obligations [20] [27].

- Transparency in Frontier Artificial Intelligence Act (TFAIA): Mandates comprehensive disclosures for large foundation models, including training data, capabilities, safety practices, and risk assessments [27].

- General Data Protection Regulation (GDPR): Includes provisions for data protection, privacy, consent, and transparency, particularly relevant for handling patient data [20].

- Health Insurance Portability and Accountability Act (HIPAA): Sets standards for protecting sensitive patient data in the United States.

Compliance with these frameworks necessitates documentation of model limitations, performance characteristics across subgroups, data provenance, and ongoing monitoring protocols.

Comparative Analysis of AI Models in Medical Imaging

Performance-Bias Tradeoffs in Model Selection

When selecting AI models for medical applications, researchers must balance raw performance against ethical considerations. The following diagram illustrates the decision framework for evaluating this balance:

Benchmarking Ophthalmic AI Performance

Table 5: Comparative Performance of Medical AI Systems in Ophthalmology

| AI System | Modality | Target Conditions | Reported AUC | Explainability Features | Fairness Validation |

|---|---|---|---|---|---|

| DPLA-Net [24] | B-scan Ultrasound | IOT, RD, VH, PSS | 0.943-0.997 | Lesion attention maps, dual-path architecture | Multi-center data, not fully detailed |

| Thyroid Eye Disease XDL [25] | Facial Images | Thyroid Eye Disease | 0.989-0.997 | Heatmaps highlighting periocular regions | Not specified |

| Typical Screening AI | Fundus Photography | Diabetic Retinopathy | 0.930-0.980 | Saliency maps, feature importance | Varies by implementation |

The validation of explainable AI for ophthalmic ultrasound image detection represents a microcosm of broader challenges in medical AI. As demonstrated through the DPLA-Net case study and supporting frameworks, prioritizing transparency, fairness, and data security requires methodical integration of ethical considerations throughout the AI development lifecycle—not as an afterthought but as foundational requirements.

For researchers, scientists, and drug development professionals, this approach necessitates:

- Implementing comprehensive fairness assessments using standardized metrics across relevant demographic and clinical subgroups

- Building explainability directly into model architectures rather than relying solely on post-hoc interpretations

- Establishing robust data security protocols that protect patient privacy while enabling appropriate transparency

- Maintaining detailed documentation of model limitations, performance characteristics, and development processes

- Engaging in continuous monitoring and validation to detect performance degradation or emerging biases

The future of trustworthy AI in ophthalmology and beyond depends on this multidisciplinary approach that harmonizes technical excellence with ethical rigor. By adopting the frameworks, metrics, and methodologies outlined here, the research community can advance AI systems that are not only diagnostically accurate but also transparent, equitable, and secure—thereby fulfilling the promise of AI to enhance patient care without compromising ethical standards.

Building Transparent AI: Architectures and Workflows for Ophthalmic Ultrasound

The integration of mechanistic knowledge with data-driven learning represents a frontier in developing trustworthy artificial intelligence for high-stakes domains like medical imaging. Hybrid Neuro-Symbolic AI architectures address this integration by combining the pattern recognition strengths of neural networks with the transparent reasoning capabilities of symbolic AI [28]. This synthesis aims to overcome the limitations of purely neural approaches—their "black-box" nature and lack of explainability—and purely symbolic systems—their brittleness and inability to learn from raw data [29].

In ophthalmic diagnostics, particularly for complex modalities like ultrasound imaging, this hybrid approach offers a promising path toward clinically adoptable AI systems. By encoding domain knowledge about anatomical structures, disease progression, and physiological relationships into symbolic frameworks, while leveraging neural networks for perceptual tasks like feature extraction from images, neuro-symbolic systems can provide both high accuracy and transparent reasoning [13]. This dual capability is particularly valuable for validating AI systems for ophthalmic ultrasound detection, where clinicians require not just predictions but evidence-based explanations to trust and effectively utilize algorithmic outputs [9].

Comparative Performance Analysis of Neuro-Symbolic Architectures

Quantitative Performance Metrics Across Applications

Table 1: Performance comparison of neuro-symbolic architectures across domains

| Application Domain | Architecture Type | Key Performance Metrics | Compared Baselines | Explainability Metrics |

|---|---|---|---|---|

| AMD Treatment Prognosis [13] | Knowledge-guided LLM | AUROC: 0.94 ± 0.03, AUPRC: 0.92 ± 0.04, Brier Score: 0.07 | Pure neural networks, Cox regression | >85% predictions supported by knowledge-graph rules; >90% LLM explanations accurately cited biomarkers |

| Microgrid Load Restoration [30] | Neural-symbolic control | Restoration success: 91.7%, Critical load fulfillment: >95%, Average actions per event: <2 | Conventional control schemes | Transparent, rule-compliant recovery with physical feasibility checks |

| Continual Learning [31] | Brain-inspired CL framework | Superior performance on compositional benchmarks, minimal forgetting | Neural-only continual learning | Knowledge retention via symbolic reasoner |

Advantages Over Pure Paradigms

Table 2: Capability comparison of AI paradigms

| Capability | Symbolic AI | Neural Networks | Neuro-Symbolic AI |

|---|---|---|---|

| Interpretability | High (explicit rules) | Low (black box) | High (explainable reasoning) [29] |

| Data Efficiency | Low (manual coding) | Low (requires large datasets) | High (learning guided by knowledge) [28] |

| Reasoning Ability | High (logical inference) | Low (pattern matching) | High (structured reasoning) [32] |

| Handling Uncertainty | Low (brittle) | High (probabilistic) | Medium (constrained learning) |

| Knowledge Integration | High (explicit) | Low (implicit in weights) | High (both explicit and implicit) [13] |

| Adaptability | Low (static rules) | High (learning) | Medium (rule refinement) |

Experimental Protocols and Methodologies

Ophthalmic AMD Prognosis Framework

The neuro-symbolic framework for Age-related Macular Degeneration (AMD) prognosis exemplifies a rigorously validated methodology for medical applications [13]. The experimental protocol encompassed:

Data Collection and Preprocessing: A pilot cohort of ten surgically managed AMD patients (six men, four women; mean age 67.8 ± 6.3 years) provided 30 structured clinical documents and 100 paired imaging series. Imaging modalities included optical coherence tomography, fundus fluorescein angiography, scanning laser ophthalmoscopy, and ocular/superficial B-scan ultrasonography. Texts were semantically annotated and mapped to standardized ontologies, while images underwent rigorous DICOM-based quality control, lesion segmentation, and quantitative biomarker extraction [13].

Knowledge Graph Construction: A domain-specific ophthalmic knowledge graph encoded causal disease and treatment relationships, enabling neuro-symbolic reasoning to constrain and guide neural feature learning. This graph incorporated established ophthalmological knowledge including drusen progression patterns, retinal pigment epithelium degeneration pathways, and neovascularization mechanisms [13].

Integration and Training: A large language model fine-tuned on ophthalmology literature and electronic health records ingested structured biomarkers and longitudinal clinical narratives through multimodal clinical-profile prompts. The hybrid architecture was trained to produce natural-language risk explanations with explicit evidence citations, with the symbolic component ensuring logical consistency with domain knowledge [13].

Validation Methodology: Performance was evaluated on an independent test set using standard metrics (AUROC, AUPRC, Brier score) alongside explainability-specific metrics measuring rule support and explanation accuracy. Statistical significance testing (p ≤ 0.01) confirmed superiority over pure neural and classical Cox regression baselines [13].

Microgrid Control Validation Protocol

The microgrid restoration study employed a distinct validation approach suitable for its domain [30]:

Synthetic Scenario Generation: Researchers created synthetic fault scenarios simulating equipment failures, islanding events, and demand fluctuations across a 24-hour operational timeline. This comprehensive testing environment evaluated system resilience under diverse failure conditions.

Dual-Component Architecture: Neural networks proposed potential recovery actions based on pattern recognition from historical data, while finite state machines applied logical rules and power flow limits before action execution. This separation ensured all implemented actions were physically feasible and compliant with operational constraints.

Success Metrics: The primary evaluation metric was restoration success rate, with secondary measures including critical load fulfillment percentage and action efficiency (number of actions required per event). The symbolic component's role as a "gatekeeper" provided transparent validation of all neural suggestions [30].

Architectural Frameworks and Implementation

Conceptual Foundation: The Dual-Process Architecture

The theoretical foundation for neuro-symbolic integration draws heavily from cognitive science's dual-process theory, which describes human reasoning as comprising two distinct systems [28] [32]. System 1 (neural) is fast, intuitive, and subconscious—exemplified by pattern recognition in deep learning. System 2 (symbolic) is slow, deliberate, and logical—exemplified by rule-based reasoning. Neuro-symbolic architectures explicitly implement both systems, with neural components handling perceptual tasks and symbolic components managing reasoning tasks [28].

Integration Patterns for Neuro-Symbolic AI

Research has identified multiple architectural patterns for integrating neural and symbolic components, each with distinct characteristics and suitability for different applications [32]:

Symbolic[Neural] Architecture: Symbolic techniques invoke neural components for specific subtasks. Exemplified by AlphaGo, where Monte Carlo tree search (symbolic) invokes neural networks for position evaluation. This pattern maintains symbolic control while leveraging neural capabilities for perception or evaluation.

Neural | Symbolic Architecture: Neural networks interpret perceptual data as symbols and relationships that are reasoned about symbolically. The Neuro-Symbolic Concept Learner follows this pattern, with neural components extracting symbolic representations from raw data for subsequent logical reasoning.

Neural[Symbolic] Architecture: Neural models directly call symbolic reasoning engines to perform specific actions or evaluate states. Modern LLMs using plugins to query computational engines like Wolfram Alpha exemplify this approach, maintaining neural primacy while accessing symbolic capabilities when needed.

Research Reagent Solutions for Neuro-Symbolic Experimentation

Table 3: Essential tools and platforms for neuro-symbolic research

| Tool/Category | Specific Examples | Function/Purpose | Application Context |

|---|---|---|---|

| Knowledge Representation | AllegroGraph [32], Ontologies | Structured knowledge storage and retrieval | Encoding domain knowledge (e.g., ophthalmology) |

| Differentiable Reasoning | Scallop [32], Logic Tensor Networks [32], DeepProbLog [32] | Integrating logical reasoning with gradient-based learning | Training systems with logical constraints |

| Neuro-Symbolic Programming | SymbolicAI [32] | Compositional differentiable programming | Building complex neuro-symbolic pipelines |

| Multimodal Data Processing | DICOM viewers, NLP pipelines | Handling medical images and clinical text | Processing ophthalmic data (e.g., AMD study [13]) |

| Evaluation Frameworks | XAI metrics, Rule support scoring | Quantifying explainability and reasoning quality | Validating clinical trustworthiness |

Implementation Workflow for Ophthalmic Application

The application of neuro-symbolic architectures to ophthalmic ultrasound detection follows a structured workflow that ensures both performance and explainability:

Hybrid neuro-symbolic architectures represent a significant advancement for validating explainable AI in ophthalmic ultrasound detection research. By integrating mechanistic knowledge of ocular anatomy and disease pathology with data-driven learning from medical images, these systems address the critical need for both accuracy and transparency in clinical AI [13] [9].

The experimental data demonstrates that neuro-symbolic approaches can achieve superior performance compared to pure neural or symbolic baselines while providing explicit reasoning pathways that clinicians can understand and trust [13]. The quantified explainability metrics—such as knowledge-graph rule support and accurate biomarker citation—provide validation mechanisms essential for regulatory approval and clinical adoption [13].

For ophthalmic ultrasound specifically, future research directions include developing specialized knowledge graphs encoding ultrasound-specific biomarkers, creating integration mechanisms optimized for ultrasound artifact interpretation, and establishing validation protocols specific to ophthalmic imaging characteristics. As these architectures mature, they offer a promising pathway toward FDA-approved AI diagnostic systems that combine the perceptual power of deep learning with the transparent reasoning required for clinical trust.

Leveraging Large Language Models (LLMs) for Generating Clinician-Readable Risk Narratives

Within the critical field of ophthalmic diagnostics, the validation of explainable artificial intelligence (XAI) models, particularly for complex imaging modalities like ultrasound, presents a significant challenge. While these models can achieve high diagnostic accuracy, translating their numerical outputs into clinically actionable insights remains a hurdle. This is where Large Language Models (LLMs) offer a transformative potential. By generating clinician-readable risk narratives, LLMs can bridge the gap between an XAI model's detection of a pathological feature and a comprehensive, interpretable report that integrates this finding with contextual clinical knowledge. This guide explores the application of LLMs for this specific purpose, comparing their performance and outlining the experimental protocols necessary for their rigorous validation in ophthalmic ultrasound image analysis research.

The Role of LLMs in Ophthalmic Diagnostic Workflows

Large Language Models are advanced AI systems trained on vast amounts of text data, enabling them to understand, generate, and translate language with high proficiency [33]. In ophthalmology, their potential extends beyond patient education and administrative tasks to become core components of diagnostic systems [33]. When integrated with XAI for image analysis, LLMs can be tasked with interpreting the XAI's outputs—such as heatmaps from a Class Activation Mapping (CAM) technique that highlight suspicious regions in an ophthalmic ultrasound scan—and weaving them into a coherent narrative [34]. This narrative can succinctly describe the detected anomaly, quantify its risk level based on learned medical literature, suggest differential diagnoses, and even recommend subsequent investigations. For instance, a model could generate a report stating: "The explainable AI algorithm identified a hyperreflective, elevated lesion in the peripheral retina, measuring 3.2 mm in height. The associated CAM heatmap indicates high confidence in this finding. The features are consistent with a retinal detachment, conferring a high risk of vision loss if not managed urgently. Differential diagnoses include choroidal melanoma. Urgent referral to a vitreoretinal specialist is recommended." This moves the output from a simple "pathology detected" to a risk-stratified, clinically contextualized summary that supports decision-making for researchers and clinicians.

Comparative Performance of LLMs in Clinical Tasks

To objectively evaluate the potential of LLMs for generating risk narratives, it is essential to review their demonstrated performance in analogous clinical and data-summarization tasks. The following table summarizes key performance metrics from recent studies and applications.

Table 1: Performance of LLMs in Clinical and Data Interpretation Tasks

| Application / Study | LLM(s) Used | Key Performance Metric | Result | Context / Task |

|---|---|---|---|---|

| General Ophthalmology Triage [33] | GPT-4 | Triage Accuracy | 96.3% | Analyzing textual symptom descriptions to determine urgency and need for care. |

| General Ophthalmology Triage [33] | Bard | Triage Accuracy | 83.8% | Analyzing textual symptom descriptions to determine urgency and need for care. |

| Corneal Disease Diagnosis [33] | GPT-4 | Diagnostic Accuracy | 85% | Diagnosing corneal infections, dystrophies, and degenerations from text-based case descriptions. |

| Glaucoma Diagnosis [33] | ChatGPT | Diagnostic Accuracy | 72.7% | Diagnosing primary and secondary glaucoma from case descriptions, performing similarly to senior ophthalmology residents. |

| Ophthalmology Rare Disease Diagnosis [33] | GPT-4 | Diagnostic Accuracy | 90% (in ophthalmologist scenario) | Accuracy highly dependent on input data quality; best with detailed, specialist-level findings. |

| AI Medical Image Analysis (SLIViT) [35] | Specialized Vision Transformer | High Accuracy (specifics not given) | Outperformed disease-specific models | Expert-level analysis of 3D medical images (including retinal scans) using a model pre-trained on 2D data. |

The data indicates that advanced LLMs like GPT-4 can achieve a high degree of accuracy in tasks requiring medical reasoning from structured text inputs. Their performance is competitive with human practitioners in specific diagnostic and triage scenarios, establishing their credibility as tools for generating reliable clinical content. The success, however, is contingent on the quality and depth of the input information [33]. This is a critical consideration when using LLMs to interpret the outputs of an ophthalmic ultrasound XAI model; the narrative's quality will depend on both the LLM's capabilities and the richness of the feature data extracted by the XAI system.

Experimental Protocols for Validating LLM-Generated Narratives

Validating an LLM-generated risk narrative is a multi-stage process that requires careful experimental design to ensure clinical relevance, accuracy, and utility. The following workflow outlines a robust methodology for such validation in the context of ophthalmic ultrasound.

Phase 1: Data Collection & Curation

A dataset of ophthalmic ultrasound images, representative of various conditions (e.g., retinal detachment, vitreous hemorrhage, intraocular tumors) and normal anatomy, must be assembled. Essential associated data includes:

- Patient Demographics: Age, sex, relevant medical history (e.g., diabetes, hypertension).

- Clinical Context: Presenting symptoms, visual acuity, prior interventions.

- Definitive Diagnoses: Confirmed through gold-standard methods like histopathology (where applicable) or longitudinal clinical follow-up.

Phase 2: Establish Ground Truth

A panel of at least two experienced ophthalmologists, blinded to the LLM's output, independently reviews each complete case (images + clinical data). They draft a "gold standard" risk narrative for each image. In cases of disagreement, a third senior expert makes the final determination [34]. These human-generated narratives serve as the benchmark for evaluating the LLM.

Phase 3: XAI Image Analysis & Feature Extraction

The ophthalmic ultrasound images are processed by the XAI model (e.g., a CNN with a Grad-CAM component [34]). The model outputs its classification (e.g., "normal," "pathological") and, crucially, the explainable heatmap highlighting the region of interest. Quantitative features from the heatmap and image (e.g., lesion size, reflectivity, location coordinates) are extracted into a structured data format.

Phase 4: LLM Narrative Generation

The structured data from Phase 3 is fed into a prompt engineered for the LLM. The prompt instructs the model to generate a concise, clinician-readable risk narrative. For example: "You are an ophthalmic specialist. Based on the following data from an ultrasound scan analysis, generate a clinical risk narrative. Data: [Insert structured data, e.g., classification='Retinal Detachment', confidence=0.96, location='superotemporal', size='>3mm', associated_subretinal_fluid=true]." The LLM then produces its version of the narrative.

Phase 5: Blinded Clinical Evaluation

The LLM-generated narratives and the expert-panel "gold standard" narratives are presented in a randomized and blinded order to a separate group of clinical evaluators (ophthalmologists and researchers). They score each narrative on several criteria using Likert scales (e.g., 1-5), as detailed in the metrics table below.

Phase 6: Quantitative Metric Analysis

The scores from the evaluators are compiled and analyzed statistically. Key performance indicators (KPIs) are calculated to provide a quantitative comparison of the LLM's performance against the ground truth.

Table 2: Key Performance Indicators for LLM-Generated Narrative Validation

| Performance Indicator | Description | Method of Calculation |

|---|---|---|

| Clinical Accuracy | Measures the factual correctness of the medical content. | Average evaluator score on a Likert scale; compared to ground truth. |

| Narrative Readability | Assesses the clarity, structure, and fluency of the generated text. | Average evaluator score using standardized readability metrics or Likert scales. |

| Clinical Actionability | Evaluates how directly the narrative suggests or implies next steps. | Average evaluator score on a Likert scale regarding usefulness for decision-making. |

| Risk Stratification Concordance | Measures if the narrative's implied risk level (low/medium/high) matches the ground truth. | Percentage agreement or Cohen's Kappa with expert panel risk assessment. |

| Error Rate | Quantifies the frequency of hallucinations or major factual errors. | Percentage of narratives containing one or more significant inaccuracies. |

Essential Research Reagent Solutions for Implementation

Building and validating a system for LLM-generated risk narratives requires a suite of core tools and resources. The following table details these essential components.

Table 3: Key Research Reagents and Tools for LLM-XAI Integration

| Item / Solution | Function in the Workflow | Specific Examples / Notes |

|---|---|---|

| Curated Ophthalmic Ultrasound Dataset | Serves as the foundational input for training and validating the XAI and LLM systems. | Must include images paired with comprehensive clinical data and definitive diagnoses. Size and diversity are critical for model robustness. |

| Explainable AI Model (XAI) | Performs the primary image analysis, detecting and localizing pathologies in ultrasound scans. | Convolutional Neural Networks (CNNs) with Class Activation Mapping (CAM) techniques like Grad-CAM [34]. EfficientNet architectures are commonly used. |

| Large Language Model (LLM) | Generates the clinician-readable risk narratives from the structured outputs of the XAI model. | General-purpose models (e.g., GPT-4, Claude, Gemini) or domain-specialized models fine-tuned on medical literature [33]. |

| Annotation & Evaluation Platform | Facilitates the blinded review and scoring of narratives by human experts. | Custom web interfaces or platforms like REDCap that allow for randomized, blinded presentation of narratives and collection of Likert-scale scores. |

| Statistical Analysis Software | Used to compute performance metrics and determine the statistical significance of results. | Python (with scikit-learn, SciPy), R, or SAS. Used for calculating inter-rater reliability (e.g., Cohen's Kappa), confidence intervals, and p-values. |

The integration of LLMs with explainable AI for ophthalmic ultrasound presents a promising path toward more intelligible and trustworthy diagnostic systems. By employing rigorous experimental protocols and objective performance comparisons, researchers can develop and validate tools that transform raw image analysis into clear, actionable clinical risk narratives, thereby enhancing both research validation and potential future clinical decision-making.

The integration of multimodal data represents a paradigm shift in medical artificial intelligence (AI), addressing the inherent limitations of single-modality analysis. Multimodal data fusion systematically combines information from diverse sources including medical images, clinical narratives, and structured electronic health records to create comprehensive patient representations. This approach is particularly valuable in ophthalmology, where diagnostic decisions often rely on synthesizing information from multiple imaging technologies and clinical assessments [36] [37]. The fundamental premise is that different modalities provide complementary information: ultrasound offers internal structural data, optical coherence tomography (OCT) provides high-resolution cross-sectional imagery, and clinical narratives contribute contextual patient information that guides interpretation [38] [39].