Validating Machine Learning Models for Ventricular Tachycardia Ablation: From Algorithm Development to Clinical Integration

This article provides a comprehensive framework for the development and validation of machine learning (ML) models aimed at improving outcomes in ventricular tachycardia (VT) ablation.

Validating Machine Learning Models for Ventricular Tachycardia Ablation: From Algorithm Development to Clinical Integration

Abstract

This article provides a comprehensive framework for the development and validation of machine learning (ML) models aimed at improving outcomes in ventricular tachycardia (VT) ablation. It explores the foundational clinical challenges that motivate ML applications, details the methodological pipeline from data preparation to model selection, and addresses critical troubleshooting aspects like handling class imbalance and ensuring model interpretability. Furthermore, it outlines rigorous internal, external, and real-world validation paradigms, including comparative analyses against traditional statistical methods and clinical benchmarks. Designed for researchers and drug development professionals, this review synthesizes current evidence and best practices to guide the creation of robust, clinically translatable ML tools that can enhance risk stratification, procedural planning, and long-term prognosis for patients undergoing VT ablation.

The Clinical Imperative: Foundational Challenges in Ventricular Tachycardia Ablation Driving ML Innovation

Machine learning has revolutionized cardiovascular prognostication, yet a significant gap persists in understanding long-term heart failure and mortality risks following catheter ablation for ventricular tachyarrhythmias. While existing models largely target peri-procedural complications, recurrence, or immediate procedural success [1], patients undergoing ablation remain susceptible to cerebrovascular events and cumulative excess mortality—hazards seldom quantified in contemporary literature. This prognostic gap limits clinicians' ability to deliver truly personalized follow-up care for a growing population of ablation recipients [1].

The integration of machine learning into cardiac electrophysiology research represents a paradigm shift, offering powerful tools to decipher complex patterns in multidimensional patient data. This review examines the current landscape of machine learning applications for predicting long-term outcomes post-ablation, with particular focus on model architectures, performance benchmarks, and methodological frameworks for translating algorithmic predictions into clinically actionable insights.

Comparative Performance of Machine Learning Models

Model Architectures and Performance Metrics

Table 1: Machine learning model performance for predicting three-year outcomes after PVC ablation

| Prediction Task | Best Performing Model | ROC AUC | Alternative Models | Sampling Method | Key Predictors |

|---|---|---|---|---|---|

| Three-year heart failure | LightGBM with ROSE | 0.822 | Logistic Regression, Decision Tree, Random Forest, XGBoost | ROSE | Age, prior HF, malignancy, ESRD |

| Three-year mortality | Logistic Regression with ROSE | 0.886 | LightGBM with ROSE (AUC: 0.882) | ROSE | Age, prior HF, malignancy, ESRD |

| VT ablation target localization | Random Forest | 0.821 | Other ML algorithms | None | EGM features from substrate mapping |

Multiple studies have demonstrated the superior performance of ensemble methods and gradient boosting algorithms for long-term outcome prediction. In a nationwide cohort of 4,195 patients who underwent PVC ablation, LightGBM with random over-sampling examples (ROSE) achieved the highest ROC AUC (0.822) for predicting three-year heart failure, while logistic regression with ROSE and LightGBM with ROSE showed balanced performance for three-year mortality prediction with ROC AUCs of 0.886 and 0.882, respectively [1]. Pairwise DeLong tests indicated these leading models formed a high-performing cluster without significant differences in ROC AUC [1].

For the specialized task of ventricular tachycardia ablation target localization, random forest algorithms have demonstrated exceptional capability. In a porcine model of chronic myocardial infarction, random forest classification based on unipolar signals from sinus rhythm mapping achieved an AUC of 0.821 with sensitivity and specificity of 81.4% and 71.4%, respectively, for identifying critical sites for ablation [2]. This approach analyzed 46 signal features representing functional, spatial, spectral, and time-frequency properties from 35,068 electrograms [2].

Addressing Class Imbalance in Clinical Datasets

The challenge of class imbalance—where adverse events are relatively rare—represents a critical methodological consideration in prognostic model development. Studies have systematically compared techniques such as synthetic minority over-sampling technique (SMOTE) and random over-sampling examples (ROSE) to address this limitation [1]. For predicting three-year outcomes post-ablation, ROSE consistently yielded superior performance with both logistic regression and LightGBM models, suggesting the importance of tailored sampling strategies for specific clinical endpoints [1].

Stacking ensemble models that integrate multiple base learners have also shown promise for mortality prediction in complex cardiac patients. In patients with heart failure and atrial fibrillation, a stacking model that combined Random Forest, XGBoost, LightGBM, and K-Nearest Neighbor algorithms achieved an AUC of 0.768 in the testing set, outperforming individual base classifiers [3].

Experimental Protocols and Methodological Frameworks

Cohort Selection and Feature Engineering

Table 2: Methodological approaches for dataset construction in ablation outcome studies

| Study Component | NHIRD Cohort Study [1] [4] | Porcine VT Model [2] | AF Mortality Prediction [5] |

|---|---|---|---|

| Population/Sample | 4,195 adults with PVC ablation | 13 pigs with chronic MI | 18,727 hospitalized AF patients |

| Data Sources | Taiwan National Health Insurance Research Database | Multipolar catheters (Advisor HD Grid) | Electronic medical records |

| Key Variables | Demographics, comorbidities, medications | 46 EGM features | 79 clinical variables |

| Outcome Measures | 3-year HF and all-cause mortality | Localized VT critical sites | In-hospital cardiac mortality |

| Class Handling | SMOTE and ROSE | Not applicable | Downsampling and class weighting |

The foundation of robust machine learning models begins with rigorous cohort selection and feature engineering. The National Health Insurance Research Database (NHIRD) study implemented a PRISMA-style flow diagram for patient selection, identifying adults with PVC who underwent catheter ablation between 2004 and 2016 [1]. Exclusion criteria specifically removed patients with atrial fibrillation, atrial flutter, or paroxysmal supraventricular tachycardia within 180 days before enrollment to focus the analytical cohort [1]. Baseline demographic and clinical data encompassed age, gender, comorbidities including ventricular tachycardia, acute coronary syndrome, hypertension, diabetes, and various cardiac medications [1].

In the porcine VT ablation target localization study, researchers employed a sophisticated feature extraction pipeline computing 46 signal features representing functional, spatial, spectral, and time-frequency properties from each bipolar and unipolar electrogram [2]. Mapping sites within 6 mm from critical VT circuit components (early, mid-, and late diastolic) were considered potential ablation targets, creating a labeled dataset for supervised learning [2].

Model Training and Validation Protocols

A consistent theme across high-performing studies is the implementation of rigorous validation frameworks. The NHIRD study employed stratified five-fold cross-validation using area under the receiver operating characteristic curve (ROC AUC) [1]. Because rare events can bias ROC analysis, researchers also examined precision-recall (PR) curves as a complementary performance metric [1]. This dual-assessment approach provides a more comprehensive evaluation of model performance on imbalanced datasets.

For the in-hospital mortality prediction study in AF patients, researchers implemented a five-fold cross-validation technique with careful hyperparameter optimization [5]. The dataset was partitioned with 80% for training and 20% for independent validation, with continuous variables showing less than 3% missing data imputed using median values [5]. This methodology ensured robustness despite the real-world nature of the electronic health record data.

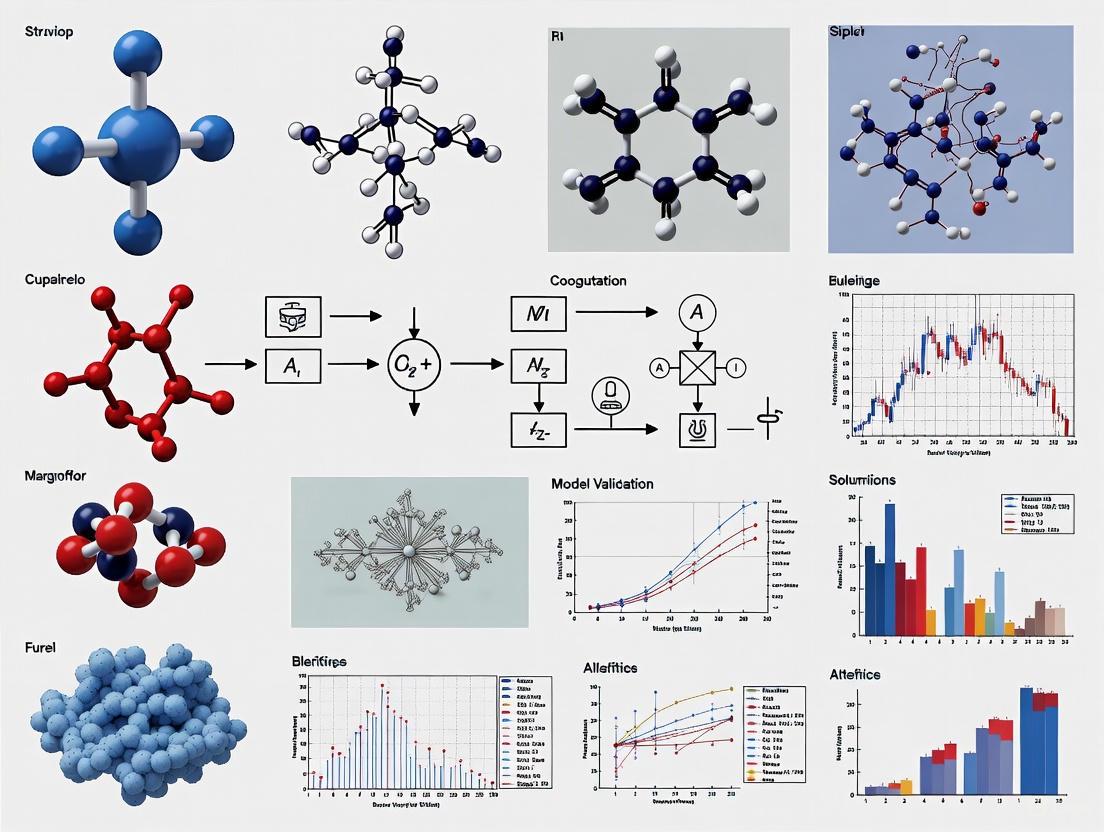

Figure 1: Machine learning workflow for ablation outcome prediction

Table 3: Essential research reagents and computational tools for ablation outcome studies

| Tool Category | Specific Resource | Application in Research | Representative Use Case |

|---|---|---|---|

| Data Sources | Taiwan NHIRD | Nationwide cohort studies | Long-term outcomes in 4,195 PVC ablation patients [1] |

| Mapping Systems | EnSite Precision with Advisor HD Grid | High-density substrate mapping | Collection of 56 substrate maps and 35,068 EGMs [2] |

| ML Algorithms | LightGBM, XGBoost, Random Forest | Outcome prediction and target localization | Three-year HF prediction (AUC: 0.822) [1] |

| Interpretation | SHAP (SHapley Additive exPlanations) | Model explainability | Quantifying feature contributions [1] |

| Sampling Methods | SMOTE, ROSE | Addressing class imbalance | Improving sensitivity for rare events [1] |

The research toolkit for machine learning in ablation outcomes encompasses both data resources and analytical methods. The Taiwan National Health Insurance Research Database (NHIRD) represents a particularly valuable resource, encompassing over 99% of Taiwan's 23 million residents and providing comprehensive coverage of healthcare services across medical centers, regional hospitals, and primary care clinics [1] [4]. This population breadth enables investigation of rare outcomes and long-term trajectories.

For electrophysiological feature extraction, high-density mapping systems such as the Advisor HD Grid with EnSite Precision provide the resolution necessary for machine learning approaches [2]. These systems enable collection of tens of thousands of electrical signals for analysis of functional, spatial, spectral, and time-frequency properties that inform ablation target identification [6].

Interpretability frameworks, particularly SHAP (SHapley Additive exPlanations), have emerged as critical components for clinical translation of machine learning models. By quantifying feature contributions and directionality at both cohort and patient levels, SHAP values help bridge the gap between algorithmic predictions and clinical decision-making [1] [5].

The integration of machine learning into ventricular tachycardia ablation research has generated powerful tools for addressing long-term prognostic gaps in heart failure and mortality. Modern ensemble methods, particularly LightGBM and random forest, consistently demonstrate superior performance for both outcome prediction and ablation target localization. The methodological consistency across studies—including rigorous validation frameworks, appropriate handling of class imbalance, and implementation of model explanation techniques—provides a template for future research in this domain.

As the field progresses, key challenges remain in transporting these models across healthcare systems and integrating them into clinical workflows. The promising performance of explainable models like logistic regression with advanced sampling techniques suggests a path forward that balances predictive power with interpretability. Future research directions should focus on external validation across diverse populations, real-world implementation in electronic health record systems, and prospective evaluation of model-guided clinical decision-making for post-ablation care planning.

Ventricular tachycardia (VT) in the setting of structural heart disease is a life-threatening arrhythmia that poses a significant challenge for clinical management. The heterogeneity of the electrophysiological substrate formed after myocardial infarction plays a crucial role in the development and perpetuation of reentrant VT circuits. Characterization of this substrate heterogeneity, particularly as influenced by infarct location, has become a central focus in developing effective ablation strategies. The complex architectural organization of scar tissue, border zones, and surviving myocardial channels creates the necessary milieu for reentry to occur, with critical isthmus sites often located in scar border zones that harbor abnormal electrograms [7] [8]. This review comprehensively compares current technologies and methodologies for characterizing VT substrate heterogeneity, with particular emphasis on how infarct location influences substrate properties and the subsequent implications for ablation therapy. We examine the experimental protocols, performance metrics, and clinical validation of approaches ranging from novel digital twin technology and machine learning algorithms to advanced electrogram mapping techniques, providing researchers and clinicians with a structured framework for evaluating these rapidly evolving tools in the context of personalized medicine for VT ablation.

Comparative Analysis of VT Substrate Characterization Technologies

The table below summarizes the quantitative performance data and key characteristics of major technologies for VT substrate characterization.

Table 1: Performance Comparison of VT Substrate Characterization Technologies

| Technology | Primary Methodology | Sensitivity (%) | Specificity (%) | AUC | Spatial Agreement | Key Limitations |

|---|---|---|---|---|---|---|

| Heart Digital Twins [7] | MRI-based computational modeling | 81.3 | 83.8 | - | κ=0.46 (moderate) | Limited spatial resolution; Computational intensity |

| Machine Learning (EGM Analysis) [2] | Random forest on electrogram features | 81.4 | 71.4 | 0.821 | - | Limited clinical validation; Animal model data |

| Multi-domain ML with Ensemble Trees [9] | Time, frequency, time-scale, and spatial feature analysis | - | - | - | Accuracy: 93% (cross-val) 84% (leave-one-subject-out) | Small patient cohort (n=9); Single-center study |

| Vector Field Heterogeneity Mapping [10] | Omnipolar mapping of propagation discontinuities | - | - | - | Significant differences between isthmus and normal tissue (p<0.001) | Substantial site overlap; Not stand-alone |

| Conventional Substrate Mapping [8] | Bipolar voltage criteria (scar <0.5 mV, border zone 0.5-1.5 mV) | - | - | - | Established clinical standard | Limited functional assessment; Directional sensitivity |

Table 2: Target Identification Capabilities by Mapping Approach

| Mapping Approach | Critical Site Identification | Infarct Location Considerations | Clinical Validation |

|---|---|---|---|

| Local Abnormal Ventricular Activities (LAVA) [11] | Low-amplitude, high-frequency potentials after or within far-field EGM | Effective for endocardial and epicardial substrates; Non-ischemic and ischemic cardiomyopathy | Elimination correlated with reduced VT recurrence/death (HR 0.49) |

| Late Potentials (LPs) [11] | Signals occurring after terminal portion of surface QRS | Identifies slow conduction regions across infarct locations | 90.5% freedom from VT recurrence with complete LP elimination |

| Isochronal Late Activation Maps (ILAM) [11] | Closely packed isochrone lines indicating slow conduction | Highlights conduction barriers specific to infarct geometry | 75% reduction in VT recurrence compared to standard mapping |

| High-Density Multipolar Mapping [8] [11] | Uncovering low-voltage EGMs and conduction channels | Reveals detailed architecture regardless of infarct location | 97% freedom from device-detected therapies with Advisor HD Grid |

Experimental Protocols for VT Substrate Assessment

Digital Twin Creation and Simulation

The protocol for heart digital twin generation begins with acquisition of 3D late gadolinium-enhanced cardiac magnetic resonance (LGE-CMR) images using either 3T or 1.5T scanners, adapted for patients with cardiac devices [7]. Following image acquisition, myocardial tissue is categorized through semi-automated segmentation with landmark control points placed at various endocardial and epicardial surfaces, with boundaries automatically defined using a variational implicit method. Finite-element meshes with approximately 400 µm resolution are generated, containing ~4 million individual nodes [7]. Fiber directionality is overlaid using a validated rule-based approach, and tissue characteristics (healthy tissue, border zone, dense scar) are superimposed using signal thresholding via the full-width half-maximum approach. Electrophysiological properties are applied to each tissue region: healthy tissue uses the 10 Tusscher ionic model, border zones incorporate longer action potential duration and reduced conduction velocity based on experimental models, and wavefront propagation is simulated by solving the reaction-diffusion partial differential equation using openCARP software on parallel computing systems [7]. VT induction is simulated through pacing protocols applied sequentially to 7 left ventricular sites based on a condensed American Heart Association 17-segment model, with preferential projection onto the closest scar border zone. Pacing delivers a train of 6 beats at 600 ms cycle length followed by up to 3 extrastimuli, with reentry defined as at least 2 rotational cycles at the same site [7].

Machine Learning Model Development

The development of machine learning algorithms for VT substrate characterization follows structured protocols depending on the data modality. For electrogram-based classification, as implemented in the porcine model study, data collection involves invasive electrophysiological studies using multipolar catheters during sinus rhythm and pacing from multiple sites [2]. A total of 46 signal features representing functional, spatial, spectral, and time-frequency properties are computed from each bipolar and unipolar electrogram. For the detection of arrhythmogenic sites in post-ischemic VT, features are extracted across multiple domains: time domain (peak-to-peak amplitude, fragmentation measure), frequency domain, time-scale domain, and spatial domain [9]. The dataset construction involves careful annotation by experienced electrophysiologists blinded to case details and potential positions, using specialized MATLAB graphical interfaces. The machine learning workflow employs a training-validation-testing design with random sampling of patients into respective cohorts (approximately 81%, 9%, and 10% splits). Model training iteratively tests multiple classifiers (random forest, ensemble trees, logistic regression) with performance evaluation through area under the curve (AUC) calculations from internal validation datasets to determine optimal discretization cutoff thresholds [2] [12]. For the 12-lead ECG classification of outflow tract VT origins, the protocol implements a multistage scheme with automated feature extraction from standard ECGs, incorporating features from both sinus rhythm and PVC/VT QRS complexes [12].

High-Density Functional Substrate Mapping

Functional substrate mapping protocols utilize high-density multipolar catheters with closely spaced electrodes (2-6-2 mm spacing) to acquire detailed electroanatomical maps during sinus rhythm [8] [11]. The mapping procedure begins with system setup using 3D electroanatomical mapping systems (CARTO, EnSite Precision, or Rhythmia). The protocol involves obtaining a geometry of the cardiac chamber of interest, followed by high-density mapping with the multipolar catheter ensuring stable catheter contact and position. Points are acquired with a projection distance below 8 mm for accurate spatial localization [9]. During map acquisition, specific attention is directed toward identifying regions of slow conduction characterized by local abnormal ventricular activities (LAVA), late potentials (LPs), and fractionated electrograms. Functional assessment may be enhanced through pacing protocols using short-coupled extrastimuli to uncover hidden slow conduction areas not apparent during baseline rhythm [11]. The definition of unexcitable scar is confirmed by the absence of visible electrograms and lack of local pacing capture, particularly when using mapping catheters with smaller and narrower-spaced bipolar electrodes [8].

Diagram 1: Integrated Workflow for VT Substrate Characterization Technologies

Impact of Infarct Location on Substrate Heterogeneity

The location of myocardial infarction significantly influences the characteristics of the resulting arrhythmogenic substrate, with specific implications for mapping and ablation strategies. Septal infarcts create particularly challenging substrates due to the complex transmural architecture and involvement of the conduction system [8]. In these cases, high-density mapping with multipolar catheters has demonstrated superior capability in identifying conducting channels through the septum that may be missed by conventional point-by-point mapping [8]. Anteroseptal scars specifically require careful differentiation between endocardial and epicardial substrates, with unipolar voltage mapping playing a crucial role in detecting epicardial VT substrate in patients with non-ischemic left ventricular cardiomyopathy [2].

Inferior wall infarcts often exhibit more predictable transmmural patterns but may involve the papillary muscles and peri-valvular regions, creating complex three-dimensional reentry circuits [8]. The functional properties of these substrates demonstrate location-specific characteristics, with inferior scars showing greater prevalence of late potentials in the peri-infarct zone compared to anterior scars [11]. Apical infarcts create substrates with distinct functional properties, often exhibiting smaller critical isthmuses that require higher mapping density for accurate identification [8]. The recent advent of omnipolar mapping technology has proven particularly valuable in characterizing apical substrates by providing voltage, timing, and activation direction independent of catheter orientation [11].

The heterogeneity within infarct border zones also demonstrates location-dependent patterns. Anterior infarcts typically show more extensive border zones with greater electrogram fragmentation compared to inferior infarcts [8]. Vector field heterogeneity mapping has revealed that the entrance sites of VT isthmuses exhibit significantly higher heterogeneity values (0.61 ± 0.24) compared to exit sites (0.44 ± 0.27), with these patterns showing consistent location-specific variations [10]. These findings highlight the importance of tailored mapping approaches based on infarct location to optimize identification of critical ablation targets.

Research Reagent Solutions for VT Substrate Investigation

Table 3: Essential Research Materials for VT Substrate Characterization Studies

| Category | Specific Product/Technology | Research Application | Key Features |

|---|---|---|---|

| Electroanatomical Mapping Systems | CARTO 3 (Biosense Webster) [8] [9] | 3D substrate mapping and navigation | Integration of anatomical and electrophysiological data; Ripple mapping capability |

| EnSite Precision (Abbott) [2] [11] | High-density automated mapping | Advisor HD Grid compatibility; Wavefront direction analysis | |

| Rhythmia (Boston Scientific) [11] | Ultra-high-density mapping | Automatic signal annotation; Lumipoint algorithm | |

| Mapping Catheters | PentaRay (Biosense Webster) [8] [9] | High-resolution substrate mapping | 2-6-2 mm electrodes; Multiple splines for comprehensive coverage |

| Advisor HD Grid (Abbott) [2] [11] | Direction-agnostic mapping | 16 electrodes in 4x4 configuration; 3 mm interelectrode spacing | |

| Octaray (Biosense Webster) [11] | High-density activation mapping | 2-5 mm interelectrode spacing; 48 electrodes total | |

| Computational Modeling Tools | openCARP [7] | Digital twin creation and simulation | Open-source platform for cardiac electrophysiology simulation |

| MATLAB with Custom GUI [9] | Electrogram analysis and annotation | Development of specialized interfaces for signal classification | |

| Imaging Modalities | 3T/1.5T Cardiac MRI [7] | Preprocedural scar characterization | Late gadolinium enhancement for scar visualization |

| Intracardiac Echocardiography [12] | Real-time anatomical guidance | Identification of anatomical structures during ablation |

Discussion and Future Directions

The characterization of VT substrate heterogeneity relative to infarct location represents a critical frontier in personalizing ablation therapy for ventricular arrhythmias. Our analysis demonstrates that while conventional bipolar voltage mapping remains the established standard for substrate assessment, emerging technologies each offer distinct advantages for specific aspects of substrate characterization. Heart digital twins provide unparalleled capability for preprocedural planning and non-invasive identification of VT circuits, achieving sensitivity of 81.3% and specificity of 83.8% for detecting critical VT sites [7]. However, their current limitations in spatial resolution (κ coefficient of 0.46 for agreement with clinical VT sites) and computational demands present barriers to widespread clinical implementation [7].

Machine learning approaches applied to electrogram analysis demonstrate robust performance in automated identification of arrhythmogenic sites, with ensemble tree classifiers achieving 93% accuracy in cross-validation and 84% in leave-one-subject-out validation [9]. The random forest model applied to unipolar signals from sinus rhythm maps provided an AUC of 0.821 with sensitivity of 81.4% and specificity of 71.4% [2]. These approaches show particular promise for reducing operator dependence and procedural time, though they remain limited by dataset sizes and need for broader clinical validation.

The impact of infarct location on substrate characterization efficacy is evident across all technologies. High-density mapping with multipolar catheters has demonstrated remarkable success in addressing the challenges of complex infarct geometries, with one study reporting 97% freedom from device-detected therapies over mean follow-up of 372 days when using the Advisor HD Grid catheter [11]. This represents a substantial improvement over conventional point-by-point mapping (33% freedom from therapies) and even Pentaray mapping (64% freedom from therapies) [11]. The superior performance of high-density mapping in these scenarios highlights the critical importance of mapping resolution and density for accurately characterizing the complex substrate heterogeneity associated with different infarct locations.

Future research directions should focus on integrating multiple complementary technologies into unified platforms that leverage the strengths of each approach. The combination of digital twin preprocedural planning with high-density functional mapping and machine learning-based electrogram classification represents a promising pathway toward comprehensive substrate characterization. Additionally, further investigation is needed to develop infarct location-specific algorithms that optimize mapping and ablation strategies based on the unique characteristics of anterior, inferior, septal, and lateral infarcts. As these technologies continue to evolve and validate in larger clinical trials, their integration into clinical practice promises to significantly improve outcomes for patients with scar-related ventricular tachycardia.

Ventricular tachycardia (VT) is a life-threatening cardiac condition, and catheter ablation remains a cornerstone of its treatment. However, the procedure is plagued by high recurrence rates, often exceeding 50% within one year post-procedure, primarily due to the difficulty in accurately locating critical sites responsible for arrhythmogenesis [13]. The clinical workflow for VT ablation encompasses two critical phases: pre-procedural planning and intra-operative guidance. Traditionally, both phases have relied heavily on electrophysiologists' expertise and conventional substrate mapping techniques, which often depend on single-parameter analysis such as low-voltage areas or delayed potentials [14].

The emergence of machine learning (ML) models is poised to redefine this workflow. These computational approaches offer the potential to extract hidden patterns from complex electrophysiological data, enabling more precise identification of ablation targets. This guide provides an objective comparison of traditional workflows against novel ML-based approaches, with a specific focus on the validation of ML models for VT ablation surgery research. We present structured experimental data and detailed methodologies to equip researchers and scientists with the analytical framework necessary to evaluate these emerging technologies.

Workflow Comparison: Traditional vs. ML-Augmented Approaches

The standard clinical workflow for VT ablation and the emerging ML-augmented alternative represent two distinct paradigms in procedural planning and execution. The table below systematically compares their characteristics across key stages of the procedure.

Table 1: Comparison of Traditional and ML-Augmented VT Ablation Workflows

| Workflow Stage | Traditional Workflow | ML-Augmented Workflow | Key Differentiators |

|---|---|---|---|

| Pre-procedural Planning | Analysis of pre-operative MRI/CT scans; manual review of electroanatomic maps (EAM); subjective identification of low-voltage zones and abnormal potentials. | Automated analysis of EAMs using ML models; extraction of multi-domain features from intracardiac electrograms (EGMs); data-driven prediction of critical sites. | Shift from subjective, single-parameter analysis to objective, multi-parametric prediction. |

| Target Identification | Relies on visual inspection of EAMs for scar and border zones; focal activation mapping during VT; pace mapping. | ML model (e.g., Random Forest) processes 46+ EGM features to classify and predict arrhythmogenic sites with a probabilistic output. | Moves beyond geometric and activation-based mapping to a feature-based, algorithmic classification. |

| Intra-operative Guidance | Real-time EAM creation; fluoroscopic/electroanatomic navigation; manual annotation of ablation lesions. | Real-time visualization of ML-predicted targets overlaid on the EAM; potential for dynamic updates based on new data points. | Provides a quantitative, continuously updated roadmap, potentially reducing subjective interpretation during the procedure. |

| Post-procedural Validation | Acute procedural success defined by non-inducibility of VT; long-term follow-up for recurrence via Holter monitoring. | Correlation of ML-predicted ablation sites with acute termination sites and long-term clinical outcomes; model refinement based on recurrence data. | Enables a feedback loop for model validation and improvement, linking specific mapped features to clinical success. |

Performance Data: Quantitative Comparison of Mapping Strategies

The efficacy of a mapping and ablation strategy is ultimately quantified by its accuracy and predictive power. The following table summarizes key performance metrics from recent studies, comparing traditional substrate mapping with the novel multi-feature machine learning approach.

Table 2: Quantitative Performance Metrics of Target Identification Strategies

| Mapping Strategy | AUC (Area Under Curve) | Sensitivity | Specificity | Key Predictive Features | Validation Model |

|---|---|---|---|---|---|

| Traditional Low-Voltage Mapping | 0.67 [14] | Not Specified | Not Specified | Bipolar/Unipolar Voltage | Chronic MI Porcine Model |

| ML-Based Multi-Feature Mapping (Random Forest) | 0.821 [14] [2] | 81.4% [13] [2] | 71.4% [13] [2] | Repolarization Time (RT), High-Frequency Components (R120-160), Spatial Repolarization Heterogeneity (GradARI) [14] | Chronic MI Porcine Model |

Experimental Protocols for ML Model Validation

A critical understanding of ML model performance requires a detailed examination of the experimental methodologies used for their development and validation. The following section outlines the core protocols from a seminal study in the field.

Data Acquisition and Pre-processing

- Animal Model: The protocol was developed and validated in a chronic myocardial infarction (MI) porcine model (n=13), chosen for its physiological similarity to human cardiac size and function [14] [13] [2].

- Electrophysiological Data Collection: Fifty-six substrate maps were acquired using a high-density multipolar catheter (Advisor HD Grid) under the EnSite Precision system. Data included 35,068 intracardiac electrograms (EGMs) recorded during sinus rhythm and pacing from multiple sites (left, right, and biventricular) [14] [2].

- Ground Truth Definition: Ventricular tachycardia was induced in all subjects, leading to the mapping and precise localization of 36 VT circuits. Critical sites within the circuit (e.g., exhibiting early, mid, or late diastolic components) were identified. Mapping points within a 6 mm radius of these sites were defined as positive samples (potential ablation targets) for the ML model [2].

Feature Engineering and Model Training

- Feature Extraction: A custom MATLAB algorithm was used to extract 46 distinct features from each bipolar and unipolar EGM signal. These features spanned multiple domains [14]:

- Functional: Activation time (AT), repolarization time (RT).

- Spatial: Repolarization dispersion (GradARI).

- Spectral & Time-Frequency: Central frequency (fU), signal energy in specific bands (e.g., E0-160, R120-160).

- Model Development: Several machine learning models were trained and evaluated. The Random Forest classifier, an ensemble learning method, demonstrated superior performance, particularly when trained on unipolar EGM signals from sinus rhythm maps [14] [2]. Unipolar signals are thought to provide a more comprehensive picture by capturing both local and far-field electrical activity [14].

The workflow for this experimental protocol is visualized below.

For researchers aiming to replicate or build upon this work, the following table details key materials and computational tools referenced in the foundational studies.

Table 3: Essential Research Reagents and Solutions for VT Ablation ML Research

| Item | Specification / Function | Experimental Role |

|---|---|---|

| Chronic Myocardial Infarction Porcine Model | Large animal model with induced MI to simulate human ischemic cardiomyopathy and VT substrate. | Provides a physiologically relevant platform for data acquisition and model validation [14] [2]. |

| High-Density Grid Catheter | Advisor HD Grid Catheter (e.g., 16 electrodes). | Enables high-resolution, simultaneous acquisition of intracardiac electrograms from multiple vectors for detailed substrate mapping [14] [2]. |

| Electroanatomic Mapping System | EnSite Precision or comparable system. | Provides the platform for 3D spatial localization of mapping points, signal recording, and visualization of substrate maps [2]. |

| Custom MATLAB Algorithm | Algorithm for extracting 46 multi-domain features from EGM signals. | Converts raw EGM signals into a structured feature set that serves as the input for machine learning models [14]. |

| Machine Learning Algorithms | Random Forest, Logistic Regression, etc. (via Scikit-learn, R, or similar). | Classifies mapping points as targets or non-targets based on the input features; Random Forest demonstrated top performance in initial studies [14] [2] [15]. |

Analytical Framework and Future Directions

The logical relationship between EGM features, the ML model, and the clinical outcome is central to understanding this technology. The following diagram illustrates this pathway and its potential future evolution.

The path forward for ML in VT ablation is rich with potential. Future developments are likely to focus on the integration of AI with Digital Twin technology, creating patient-specific virtual heart models that incorporate scar anatomy, fiber orientation, and simulated electrical propagation to refine target prediction beyond statistical correlations [14]. Furthermore, the advent of 5G technology promises to facilitate real-time remote collaboration and guidance, potentially standardizing and democratizing expert-level procedural planning and support [16]. As these models evolve, a critical focus will remain on rigorous validation in human randomized controlled trials and the seamless integration of these computational tools into existing clinical workflows, ensuring they augment rather than disrupt the electrophysiologist's decision-making process.

In the field of ventricular tachycardia (VT) ablation, the precise definition of a "successful ablation site" is the cornerstone for developing and validating new targeting technologies, particularly machine learning (ML) models. The gold standard serves as the fundamental ground truth against which the performance of all predictive algorithms is measured. However, establishing this standard is complex, as it is not a single entity but a concept defined through a convergence of evidence from various mapping techniques and procedural outcomes. This guide provides a comparative analysis of the methodologies and technologies used to define and target these critical sites, framing the discussion within the broader need for robust validation in computational research.

Comparative Analysis of Ground-Truth Definitions

The definition of a successful ablation site varies significantly depending on the mapping strategy and technological approach employed. The table below synthesizes the performance data and defining characteristics of the primary methods used in contemporary practice and research.

Table 1: Comparative Performance of Ablation Target Localization Methods

| Method / Technology | Key Defining Metric for Success | Reported Performance/Accuracy | Primary Clinical Context | Key Limitations |

|---|---|---|---|---|

| In-Silico Pace-Mapping [17] | Distance between computed pacing site and visual exit site (ground truth). | High-Res Scar: 7.3 ± 7.0 mmLow-Res Scar: 8.5 ± 6.5 mmNo-Scar: 13.3 ± 12.2 mm | Pre-procedural planning in patient-specific computational models. | Relies on the accuracy of the underlying heart model and scar reconstruction. |

| Machine Learning (Random Forest on EGMs) [2] | Automated localization of VT critical sites based on electrogram features. | AUC: 0.821Sensitivity: 81.4%Specificity: 71.4% | Intra-procedural target identification from substrate maps in a porcine model. | Model trained and validated in an animal model; requires human clinical validation. |

| Entrainment Mapping [18] | Concealed fusion with PPI - TCL < 30 ms and S-QRS < 50% of TCL. | Success rates up to 70% for RF ablation at defined sites. | Intra-procedural mapping of hemodynamically stable, reentrant VT. | Infeasible for unstable VT; prone to confusion from bystander sites. |

| Paced Field Ablation (PFA) - VCAS Trial [19] | Freedom from VT recurrence at follow-up. | 78% freedom from VT. | Treatment of scar-related VT with a novel contact-force PFA system. | Early-stage data (first-in-human trial); two of 22 patients had significant worsening of heart failure. |

| Activation Mapping [18] | Identification of the earliest presystolic electrogram preceding the QRS complex (for focal VT) or the critical isthmus (for reentry). | N/A (Qualitative assessment) | Intra-procedural mapping of hemodynamically stable VT. | Feasibility can be as low as 10-30% due to VT instability. |

Detailed Experimental Protocols

To ensure the reproducibility of ML validation studies, a clear understanding of the experimental protocols used to establish ground truth is essential. The following section details the methodologies from key cited works.

Protocol 1: In-Silico Pace-Mapping for VT Exit Site Localization

This protocol outlines a computational method for identifying VT exit sites, which can serve as a pre-procedural, non-invasive ground truth [17].

- Objective: To investigate how the anatomical detail of scar reconstructions within computational heart models influences the ability of in-silico pace mapping to identify VT origins.

- Materials: Patient-specific heart models were reconstructed from high-resolution contrast-enhanced cardiac magnetic resonance (CMR) from 15 patients.

- Workflow:

- Model Creation & VT Simulation: Patient-specific models were created from CMR. VT was simulated in these high-resolution models.

- Scar Alteration: The scar anatomy in the models was altered to mimic low-quality imaging and the absence of scar data.

- Pace-Mapping Simulation: The ECG of each simulated VT was used as input. The models were then paced from 1,000 random sites surrounding the infarct.

- Correlation & Accuracy Assessment: Correlations between the VT and paced ECGs were computed. The accuracy was assessed by measuring the distance (d) between visually identified exit sites (ground truth) and the pacing locations with the strongest correlation.

Protocol 2: Machine Learning for Ablation Target Localization from Substrate Maps

This protocol describes the development of an ML model that uses electrogram features to localize VT critical sites in a pre-clinical animal model [2].

- Objective: To propose a machine learning approach for improved identification of ablation targets based on intracardiac electrograms (EGMs) features.

- Materials: 13 pigs with chronic myocardial infarction; Advisor HD grid multipolar catheter with EnSite Precision mapping system.

- Workflow:

- Data Acquisition: 56 substrate maps and 35,068 EGMs were collected during sinus rhythm and pacing.

- Ground Truth Definition: 36 VTs were induced and mapped. Sites within 6 mm of a confirmed critical site (e.g., early, mid, or late diastolic components of the circuit) were labeled as positive ablation targets.

- Feature Extraction: Forty-six signal features representing functional, spatial, spectral, and time-frequency properties were computed from each bipolar and unipolar EGM.

- Model Training & Validation: Several machine learning models were trained to classify sites as targets or non-targets. The random forest model was identified as the best performer and validated.

Protocol 3: VT Simulation-Guided Transcoronary Ethanol Ablation

This case report protocol illustrates a hybrid approach using simulation to plan an alternative ablation strategy when conventional approaches fail [20].

- Objective: To utilize VT simulation to identify an epicardial re-entry circuit and guide a transcoronary venous ethanol ablation.

- Materials: Late gadolinium-enhanced cardiac magnetic resonance (LGE-CMR) imaging; mapping and ablation catheters; equipment for coronary venography and ethanol infusion.

- Workflow:

- Simulation: A patient-specific heart model was constructed from LGE-CMR. The simulation identified a sustained epicardial re-entry in the apical region that was not accessible via standard endocardial ablation.

- Endocardial Ablation Attempt: Conventional endocardial mapping and radiofrequency ablation were attempted but failed to eliminate the VT.

- Coronary Venous Mapping & Ethanol Ablation: The coronary venous system was mapped. A branch coursing through the simulated epicardial target region was identified. Ethanol was infused into this branch, resulting in the disappearance of premature beats and non-inducibility of VT.

The following workflow diagram synthesizes the key steps from these experimental protocols, highlighting the role of computational and mapping data in defining the ablation target.

The Scientist's Toolkit: Essential Research Reagents & Materials

For researchers designing experiments to validate new ML models or ablation technologies, the following table catalogues critical tools and their functions as derived from the analyzed studies.

Table 2: Key Research Reagent Solutions for VT Ablation Studies

| Tool / Technology | Function in Research | Example Use Case |

|---|---|---|

| Late Gadolinium-Enhanced CMR (LGE-CMR) | Provides high-resolution 3D scar anatomy, differentiating core scar from border zone. | Reconstruction of patient-specific computational models for VT simulation [17] [20]. |

| Multipolar Mapping Catheter (e.g., Advisor HD Grid) | High-density acquisition of intracardiac electrograms (EGMs) for substrate characterization. | Collecting EGM signal features for machine learning model training [2]. |

| 3D Electroanatomic Mapping System (EAM) | Integrates electrical data with anatomical geometry to create a 3D substrate map. | Core platform for intra-procedural mapping and annotation of ground truth sites [2] [18]. |

| Computational Modeling & Simulation Software | Enables in-silico testing of arrhythmia mechanisms and ablation strategies without patient risk. | Assessing the robustness of pace-mapping to image quality [17] and planning ablation [20]. |

| Pulsed Field Ablation (PFA) System | A non-thermal ablation energy source that may create more predictable, full-thickness lesions. | Evaluating a new technology's efficacy in treating scar-related VT (e.g., VCAS Trial) [19]. |

| BVFP | BVFP, MF:C13H8BrF3N2O, MW:345.11 g/mol | Chemical Reagent |

| Sodium difluoro(oxalato)borate | Sodium difluoro(oxalato)borate, CAS:1016545-84-8, MF:C2BF2NaO4, MW:159.82 g/mol | Chemical Reagent |

Establishing the gold standard for successful VT ablation sites is a multi-faceted process. No single method operates in isolation; rather, the most reliable ground truth emerges from the convergence of pre-procedural computational simulations, intra-procedural mapping data (activation, pace, and entrainment), and acute procedural outcomes. As novel technologies like machine learning and pulsed field ablation continue to evolve, their validation will depend on a critical comparison against this composite standard. The experimental protocols and tools detailed in this guide provide a framework for researchers to rigorously assess new targeting strategies, ultimately accelerating the development of more effective and personalized therapies for ventricular tachycardia.

Methodological Pipeline: Building and Applying ML Models for VT Ablation

The validation of machine learning models for ventricular tachycardia (VT) ablation surgery research represents a critical frontier in precision cardiology. Accurately predicting patient-specific risks and outcomes, such as procedural success, recurrence of arrhythmias, or long-term complications, is essential for improving clinical decision-making. This guide provides a structured, objective comparison of common machine learning algorithms, from the foundational logistic regression to advanced ensembles like XGBoost and LightGBM, within this specific clinical context. We summarize quantitative performance data from recent studies, detail experimental protocols, and provide visual resources to inform researchers and clinicians in their model selection process.

Performance Benchmarking in VT Ablation Research

The selection of an optimal algorithm is contingent on the specific clinical endpoint. The following tables consolidate performance metrics from recent studies, providing a direct comparison of logistic regression, decision trees, random forest, XGBoost, and LightGBM.

Table 1: Benchmarking Model Performance for Various Cardiovascular Endpoints

| Clinical Endpoint | Best Performing Model(s) | Key Performance Metrics (AUROC) | Comparative Model Performance |

|---|---|---|---|

| 3-Year Heart Failure (Post-PVC Ablation) | LightGBM [1] | 0.822 (with ROSE) | LightGBM > XGBoost > Random Forest > Logistic Regression > Decision Tree |

| 3-Year Mortality (Post-PVC Ablation) | Logistic Regression, LightGBM [1] | 0.886, 0.882 (both with ROSE) | Logistic Regression ≈ LightGBM > XGBoost > Random Forest > Decision Tree |

| Malignant Ventricular Arrhythmia (MVA) (Post-AMI) | LightGBM [21] | 0.827 (Internal Validation) | LightGBM > XGBoost > Random Forest |

| In-Hospital Death (Post-AMI) | Random Forest [21] | 0.784 (Internal Validation) | Random Forest > XGBoost > LightGBM |

| Atrial Fibrillation Recurrence (Post-Ablation) | LightGBM [22] | 0.848 (Testing Set) | LightGBM > SVM > AdaBoost > Gradient Boosting |

| Etiological Diagnosis of VT | XGBoost [23] | Precision: 88.4%, Recall: 88.5%, F1: 88.4% | XGBoost > Other Models Tested |

Table 2: Architectural and Practical Comparison of XGBoost and LightGBM

| Aspect | XGBoost | LightGBM |

|---|---|---|

| Tree Growth Strategy | Level-wise (builds trees breadth-first) [24] [25] | Leaf-wise (builds trees depth-first, focusing on promising leaves) [24] [25] |

| Handling of Categorical Features | Requires pre-processing (e.g., one-hot encoding) [25] | Native support (can specify categorical columns) [25] |

| Computational Efficiency | Slower training speed on large datasets, more memory-intensive [24] [25] | Faster training speed, lower memory usage [24] [25] |

| Overfitting Tendency | More robust on smaller datasets due to level-wise growth [24] | Can overfit on small datasets; controlled with max_depth [24] [25] |

| Ideal Use Case | Smaller datasets, high-stakes scenarios requiring model robustness [24] | Large-scale datasets, high-dimensional/sparse data, rapid prototyping [24] |

Detailed Experimental Protocols from Cited Studies

To ensure reproducible and clinically relevant model validation, the following methodologies are commonly employed in the field.

Data Preprocessing and Class Imbalance Handling

Cardiovascular outcome datasets often suffer from class imbalance (e.g., few patients experience mortality). To address this, studies use sophisticated techniques within a cross-validation framework to avoid biased performance estimates [1] [22].

- Synthetic Minority Over-sampling Technique (SMOTE): Generates synthetic examples of the minority class in the feature space [1] [22].

- Random Over-Sampling Examples (ROSE): Creates a smoothed bootstrap sample by generating new cases from a kernel density estimate of the classes [1].

Model Training and Validation Framework

A robust validation strategy is non-negotiable for clinical machine learning models. The stratified five-fold cross-validation approach is a gold standard [1] [21] [22].

- Cohort Splitting: The patient dataset is first randomly split into a training set (typically 70-80%) and a held-out testing set (20-30%). This split is often stratified to preserve the proportion of the outcome class in both sets [22].

- Cross-Validation on Training Set: The training set is further divided into five folds. The model is trained on four folds and validated on the remaining one; this process is repeated five times so that each fold serves as the validation set once. Performance metrics (e.g., AUC) are averaged over the five iterations [1].

- Hyperparameter Tuning: This is performed within the cross-validation loop to select the parameters that yield the best average validation performance.

- Final Evaluation: The final model, trained on the entire training set with the best parameters, is evaluated on the untouched testing set to report an unbiased estimate of its performance [22].

Model Interpretability and Clinical Explanation

For clinical adoption, model predictions must be interpretable. SHapley Additive exPlanations (SHAP) is the dominant method used to quantify the contribution of each feature to an individual prediction, aligning model outputs with clinical knowledge [1] [26] [22]. For example, studies have consistently identified age, prior heart failure, and specific comorbidities like malignancy and end-stage renal disease as the most influential predictors for long-term heart failure risk after ablation, validating the model's clinical face-validity [1].

Workflow Diagram for Model Benchmarking

The following diagram illustrates the logical workflow for benchmarking machine learning algorithms in clinical research, from data preparation to model selection.

Building and validating machine learning models for clinical research requires a suite of computational and data resources.

Table 3: Essential Research Reagents and Solutions

| Tool/Resource | Function/Benefit | Example Use in Context |

|---|---|---|

| Structured Clinical Datasets | Provides labeled data for model training and testing. | Nationwide claims databases (e.g., NHIRD [1]) or single-center EHR data [23] with ICD codes for patient cohort identification. |

| SHAP (SHapley Additive exPlanations) | Explains model output by quantifying feature contribution for each prediction [26] [23]. | Identifies key predictors (e.g., BNP, NLR [22]) for VT recurrence, fostering clinical trust and model validation. |

| Synthetic Minority Over-sampling (SMOTE) | Addresses class imbalance by generating synthetic minority class samples [1] [22]. | Improves model sensitivity in predicting rare but critical events like mortality or malignant arrhythmias. |

| Stratified K-Fold Cross-Validation | Robust validation technique that preserves class distribution across folds [1] [21]. | Provides a reliable estimate of model generalizability and mitigates overfitting during algorithm benchmarking. |

| High-Performance Computing (GPU) | Accelerates the training process of computationally intensive ensemble models [24] [25]. | Essential for rapid iteration and hyperparameter tuning of XGBoost (using tree_method='gpu_hist') and LightGBM (using device='gpu'). |

The benchmarking data clearly indicates that no single algorithm dominates all clinical prediction tasks in VT ablation research. While LightGBM demonstrates superior speed and often leads in performance for large datasets predicting heart failure and arrhythmia recurrence, XGBoost provides robust and highly accurate models for etiological diagnosis and other tasks. Notably, the transparent Logistic Regression baseline remains highly competitive for certain endpoints like mortality prediction, especially when paired with resampling techniques. The ultimate algorithm selection must be guided by the specific clinical question, dataset size and structure, and the imperative for model interpretability. A rigorous, protocol-driven approach to validation and explanation is paramount for the successful translation of these models into clinical research and practice.

The management of ventricular tachycardia (VT) and premature ventricular complexes (PVCs) has entered a transformative phase with the integration of artificial intelligence (AI) and machine learning (ML) models. These computational approaches are revolutionizing the prediction of arrhythmia origins, recurrence risks post-ablation, and long-term clinical complications. For researchers and drug development professionals, understanding these key prediction tasks is critical for developing targeted therapies and improving patient stratification. ML models leverage complex electrophysiological data, imaging parameters, and clinical variables to generate predictive insights that surpass traditional statistical methods, offering unprecedented opportunities for personalized medicine in cardiology.

The validation of these ML models requires rigorous comparison against established diagnostic and prognostic methods. This guide provides a comprehensive comparison of model performances, experimental protocols, and essential research tools, framing the discussion within the broader thesis of ML model validation for VT ablation research. By objectively analyzing the data and methodologies, we aim to establish a framework for evaluating the clinical readiness and implementation potential of these emerging technologies.

Prediction Task I: Localizing Arrhythmia Origins

Accurately determining the anatomical origin of ventricular arrhythmias is fundamental for successful ablation therapy. Traditional approaches rely on electrocardiographic (ECG) characteristics and invasive mapping, but ML algorithms are demonstrating superior capabilities in processing complex spatial and signal data.

Electrocardiographic Predictors and Features

The 12-lead surface ECG remains the initial diagnostic tool for approximating VT/PVC origins. Specific features provide localization clues, particularly for arrhythmias originating from challenging regions like the left ventricular summit (LVS). Table 1 summarizes key ECG characteristics and their predictive values for localization.

Table 1: ECG Predictors for Localizing Ventricular Arrhythmia Origins

| Predictor | Anatomical Implication | Predictive Performance | Clinical Utility |

|---|---|---|---|

| Maximum Deflection Index (MDI) >0.54 [27] | Suggests epicardial origin | Sensitivity: ~71-81%; Specificity: ~71-81% [27] [2] | Differentiates epicardial from endocardial sites |

| Q-wave ratio in aVL/aVR >1.85 [27] | Indicates origin in accessible LVS area | Sensitivity: 100%; Specificity: 72% when combined with other criteria [27] | Guides decision for epicardial access |

| "Breakthrough pattern" in V2 [27] | Suggests septal origin near LAD | Not quantified | Identifies challenging sites near coronary arteries |

| Pseudodelta wave >34 ms [27] | Indicates epicardial origin | Not quantified | Supports epicardial origin hypothesis |

| R-wave ratio in V1/V2 [27] | Differentiates RVOT from LVOT origins | Not quantified | Distinguishes right/left outflow tracts |

Machine Learning Approaches for Automated Localization

ML models trained on intracardiac electrogram features can automatically identify ablation targets. A recent study developed and validated an ML approach for locating VT ablation targets from substrate maps in a porcine model of chronic myocardial infarction [2].

Experimental Protocol:

- Animal Model: Thirteen pigs with chronic myocardial infarction.

- Data Acquisition: Multipolar catheters (Advisor HD Grid, EnSite Precision) were used to collect 56 substrate maps and 35,068 intracardiac electrograms (EGMs) during sinus rhythm and pacing from multiple sites.

- Feature Extraction: Forty-six signal features representing functional, spatial, spectral, and time-frequency properties were computed from each bipolar and unipolar EGM.

- Ground Truth: Thirty-six VTs were localized and mapped with early, mid-, and late diastolic components of the circuit. Mapping sites within 6 mm from critical sites were labeled as potential ablation targets.

- Model Training: Several ML models were developed and compared, including random forest, logistic regression, and others.

The random forest classifier achieved the best performance using unipolar signals from sinus rhythm maps, with an area under the curve (AUC) of 0.821, sensitivity of 81.4%, and specificity of 71.4% [2]. This demonstrates the potential of ML to augment clinical decision-making during substrate-based ablation procedures.

Figure 1: Machine Learning Workflow for VT Localization. This diagram illustrates the experimental pipeline for developing ML models to localize VT ablation targets from substrate maps in a porcine model.

Prediction Task II: Estimating Recurrence Risk After Ablation

Predicting the likelihood of arrhythmia recurrence after catheter ablation is crucial for patient selection, follow-up planning, and clinical trial design. Recurrence rates vary significantly based on underlying cardiomyopathy, procedural success, and patient characteristics.

Recurrence Rates Across Patient Populations

Table 2 compares VT recurrence rates across different patient populations and ablation contexts, providing essential benchmarking data for model validation.

Table 2: VT/PVC Recurrence Rates After Catheter Ablation

| Patient Population | Recurrence Rate | Follow-up Duration | Predictors of Recurrence |

|---|---|---|---|

| Ischemic Cardiomyopathy (ICMP) [28] | 54.8% | 36 months | Older age, lower LVEF, more comorbidities, higher number of inducible VTs |

| Non-Ischemic Cardiomyopathy (NICMP) [28] | 38.9% | 36 months | Less frequent than ICMP |

| Pediatric PVCs [29] | 42.5% (persistent) | 45 months | Older age at onset, female sex |

| ICMP with first-line ablation [30] | 50.7% (composite endpoint) | 4.3 years | Not specified |

| ICMP with first-line AAD [30] | 60.6% (composite endpoint) | 4.3 years | Not specified |

Machine Learning for Recurrence Prediction

A dedicated study designed an ML model specifically to determine the recurrence rate of PVCs and idiopathic VT after radiofrequency catheter ablation [31]. While complete performance metrics are not provided in the available excerpt, the study compares multiple ML approaches including logistic regression (LR), decision trees (DT), support vector machines (SVM), multilayer perceptron (MLP), and extreme gradient boosting (XGBoost) [31]. This represents a direct application of ML to the recurrence prediction task, moving beyond traditional clinical factor analysis.

Prediction Task III: Forecasting Long-Term Complications

Beyond arrhythmia recurrence, predicting long-term clinical outcomes including mortality, cardiomyopathy development, and drug-related adverse events is essential for comprehensive risk assessment.

Mortality and Adverse Event Rates

Table 3 compares long-term outcomes after VT ablation and antiarrhythmic drug therapy, providing critical data for prognostic model validation.

Table 3: Long-Term Complications and Outcomes After VT Therapy

| Outcome Measure | ICM Patients | NICM Patients | Therapy Context |

|---|---|---|---|

| Overall Mortality [28] | 22% | 7% | VT ablation |

| Cardiac Mortality [28] | 19% | 6% | VT ablation |

| All-cause Death (Ablation) [30] | 22.2% | Not reported | First-line therapy |

| All-cause Death (AAD) [30] | 25.4% | Not reported | First-line therapy |

| PVC-Induced Cardiomyopathy Risk [27] | 12-15% over 1-2 years | Not specified | High PVC burden (>20%) |

| Major Bleeding (Ablation) [30] | 1% | Not reported | Procedure-related |

| Drug-Related Adverse Events [30] | 21.6% | Not reported | Amiodarone or sotalol |

PVC-Induced Cardiomyopathy Prediction

A high PVC burden is a recognized risk factor for developing cardiomyopathy. Studies report that 10-15% of patients with PVCs from the LVS develop PVC-induced cardiomyopathy, particularly with daily PVC burden exceeding 20% [27]. In pediatric populations, a high initial PVC burden (≥25%) is associated with persistent PVCs and potential ventricular dysfunction [29].

The VANISH2 trial provides crucial comparative data on first-line ablation versus antiarrhythmic drugs, demonstrating that catheter ablation reduces the composite endpoint of death, VT storm, appropriate ICD shock, or treated sustained VT (50.7% vs. 60.6%; HR, 0.75) compared to AAD therapy in ischemic cardiomyopathy patients [30].

Figure 2: PVC Complication Pathway and Outcomes. This diagram illustrates the progression from high PVC burden to cardiomyopathy and subsequent treatment outcomes.

The Scientist's Toolkit: Essential Research Reagents and Materials

Advancing research in VT/PVC prediction requires specialized tools and platforms. Table 4 catalogs essential research reagents and their applications in experimental protocols.

Table 4: Essential Research Reagents and Platforms for VT/PVC Research

| Reagent/Platform | Specification | Research Application |

|---|---|---|

| Multipolar Catheter [2] | Advisor HD Grid | High-density electrophysiological mapping |

| Electroanatomic Mapping System [2] | EnSite Precision | 3D reconstruction of cardiac geometry and substrate |

| Signal Processing Software [2] | Custom MATLAB/Python | Extraction of 46 EGM features (functional, spatial, spectral, time-frequency) |

| Machine Learning Libraries [31] | Scikit-learn, XGBoost | Implementation of LR, DT, SVM, MLP, XGBoost algorithms |

| Porcine MI Model [2] | Chronic myocardial infarction | Validation of ablation target localization algorithms |

| Holter Monitoring System [29] | 24-hour ambulatory ECG | PVC burden quantification and morphology analysis |

| qc1 | qc1, MF:C23H16F3N3O2S, MW:455.5 g/mol | Chemical Reagent |

| Mafp | Mafp, MF:C21H36FO2P, MW:370.5 g/mol | Chemical Reagent |

The prediction of VT/PVC origins, recurrence risk, and long-term complications represents a critical frontier in clinical electrophysiology. Traditional clinical factors provide foundational prognostic information, but ML approaches demonstrate emerging superiority in processing complex electrophysiological signals for precise localization and personalized risk assessment. The validation of these models requires rigorous benchmarking against the performance metrics and experimental protocols outlined in this guide. As the field advances, standardized evaluation frameworks will be essential for translating algorithmic predictions into improved clinical outcomes for patients with ventricular arrhythmias.

The volume and complexity of patient data in electrophysiology have grown exponentially, creating significant cognitive burden for clinicians navigating fragmented electronic health record (EHR) interfaces during complex procedures such as ventricular tachycardia (VT) ablation [32]. In high-pressure environments like the electrophysiology laboratory, where time-critical decisions must be made based on rapidly accessible information, poor EHR usability and unfiltered data presentation contribute to inefficiencies, potential errors, and clinician burnout [32]. Patient-centered dashboards that automatically extract and visually organize relevant clinical data offer a promising strategy to mitigate these challenges by supporting clinical reasoning and rapid comprehension [32]. For VT ablation research and practice, integrating machine learning (ML) risk prediction models directly into EHR dashboards represents a transformative approach to personalizing procedural planning and long-term management. This guide objectively compares the current landscape of EHR integration frameworks, visualization strategies, and validation methodologies for procedural decision support in ablation therapy.

EHR Dashboard Design Frameworks for Procedural Support

Core Design Principles and Data Filtering Methodologies

Effective EHR dashboards for procedural support employ either rule-based systems or AI-driven models to filter and prioritize clinically relevant parameters from extensive patient records [32]. These systems emphasize alignment with clinicians' cognitive workflows, presenting key parameters such as medications, allergies, vital signs, past medical history, and care directives through intuitive visual interfaces [32].

The design processes often incorporate user-centered and iterative methods, though the rigor of evaluation varies widely across implementations [32]. Successful dashboards function as a central visual interface that interprets and displays vital performance measurements and patient information, breaking down complex siloed data into simplified visual forms such as charts, graphs, and summary tables [33]. These systems provide role-specific views that present only relevant KPIs to different users (physicians, nurses, administrators), with real-time data refresh capabilities and drill-down functionalities for accessing detailed patient records [33].

Quantitative Assessment of Dashboard Efficacy

Table 1: Measured Outcomes of Implemented EHR Dashboard Systems

| Implementation Setting | Productivity Metric | Improvement Percentage | Key Functionalities |

|---|---|---|---|

| General Provider Practices | Administrative & Clinical Task Completion | 40% faster [33] | Centralized task automation, real-time patient flow tracking, rapid analytics visualization |

| UCHealth Nursing Staff | Initial Training Satisfaction | 75% increase [34] | Asynchronous learning integration, multiple access points for educational materials |

| UCHealth Nursing Staff | Self-Reported Efficiency | 27% increase [34] | Workflow-embedded training resources, just-in-time information access |

| M Health Fairview | Net EHR Experience Score (NEES) | 19-point higher vs. peers [34] | Centralized learning library, support chat, provider efficiency sessions |

| M Health Fairview | EHR-Enabled Efficiency Agreement | 15 percentage-point higher [34] | Single source of truth architecture, workflow-integrated training |

Machine Learning Model Performance for Ablation Risk Stratification

Comparative Algorithm Performance in Cardiovascular Applications

ML-based prediction models have demonstrated superior discriminatory performance compared to conventional risk scores across multiple cardiovascular applications. A 2025 systematic review and meta-analysis of 10 studies (n=89,702 individuals) found that ML-based models significantly outperformed conventional risk scores for predicting major adverse cardiovascular and cerebrovascular events (MACCEs) in patients with acute myocardial infarction who underwent percutaneous coronary intervention [35].

Table 2: Machine Learning vs. Conventional Risk Score Performance for Cardiovascular Event Prediction

| Prediction Task | ML Model Type | Conventional Comparator | Performance Metric | ML Performance | Conventional Score Performance |

|---|---|---|---|---|---|

| Mortality post-PCI | Random Forest, Logistic Regression [35] | GRACE, TIMI [35] | ROC AUC | 0.88 (95% CI 0.86-0.90) [35] | 0.79 (95% CI 0.75-0.84) [35] |

| 3-Year HF after PVC Ablation | LightGBM with ROSE [1] | Logistic Regression Baseline [1] | ROC AUC | 0.822 [1] | Not specified |

| 3-Year Mortality after PVC Ablation | LightGBM with ROSE [1] | Logistic Regression with ROSE [1] | ROC AUC | 0.882 [1] | 0.886 [1] |

For predicting three-year heart failure after premature ventricular contraction (PVC) ablation, the LightGBM model with random over-sampling examples (ROSE) achieved the highest ROC AUC at 0.822, while for three-year mortality, both logistic regression with ROSE and LightGBM with ROSE showed balanced performance with ROC AUCs of 0.886 and 0.882, respectively [1]. Pairwise DeLong tests indicated these leading models formed a high-performing cluster without significant differences in ROC AUC [1].

Key Predictors in Ablation Risk Stratification Models

Explainability analysis through SHAP (SHapley Additive exPlanations) values identified age, prior heart failure, malignancy, and end-stage renal disease as the most influential predictors for long-term outcomes after PVC ablation [1]. Similarly, the systematic review by [35] identified age, systolic blood pressure, and Killip class as top-ranked predictors of mortality in both ML and conventional risk scores. These findings highlight that the most robust predictors across models primarily comprise nonmodifiable clinical characteristics, suggesting an important limitation in current modeling approaches that largely exclude psychosocial and behavioral variables [35].

Experimental Protocols for ML Model Validation in VT Ablation Research

Dataset Curation and Preprocessing Methodology

The development of robust ML models for VT ablation research requires meticulous dataset curation. The study protocol in [1] utilized a nationwide claims database (National Health Insurance Research Database) encompassing 4195 adults who underwent PVC ablation. To address class imbalance—a critical challenge in rare event prediction—the researchers implemented two sophisticated sampling techniques: Synthetic Minority Over-sampling Technique (SMOTE) and Random Over-Sampling Examples (ROSE) [1].

The model comparison framework evaluated five supervised algorithms: logistic regression, decision tree, random forest, XGBoost, and LightGBM [1]. Discrimination was assessed by stratified five-fold cross-validation using the area under the receiver operating characteristic curve (ROC AUC). Given that rare events can bias ROC analysis, the protocol additionally examined precision-recall (PR) curves for a more comprehensive performance assessment [1].

Validation Standards and Integration Workflows

To ensure clinical relevance and translational potential, ML models for VT ablation require rigorous validation frameworks. The TRIPOD+AI (Transparent Reporting of a Multivariable Prediction Model for Individual Prognosis or Diagnosis + AI) checklist provides essential guidance for reporting standards [35]. Additionally, the Prediction Model Risk of Bias Assessment Tool (PROBAST) and CHARMS (Checklist for Critical Appraisal and Data Extraction for Systematic Reviews of Prediction Modelling Studies) offer structured methodologies for quality appraisal [35].

Successful integration of validated models into clinical workflows follows a structured pathway from development to implementation, with continuous validation checkpoints to ensure real-world performance. This workflow encompasses data extraction, model application, clinical decision support, and outcomes tracking, creating a closed-loop system for model refinement and validation.

Diagram 1: ML Model Development and Clinical Integration Workflow. This workflow outlines the comprehensive process from data extraction through clinical implementation, highlighting critical validation checkpoints and the continuous learning cycle essential for maintaining model performance in real-world settings.

Table 3: Essential Resources for VT Ablation Prediction Research

| Resource Category | Specific Tool/Solution | Research Application Function |

|---|---|---|

| Data Standards | FHIR (Fast Healthcare Interoperability Resources) APIs [36] | Enables structured data formatting for seamless exchange of lab results, prescriptions, and clinical notes across systems |

| Class Imbalance Handling | SMOTE (Synthetic Minority Over-sampling Technique) [1] | Generates synthetic examples of minority classes to address bias in rare event prediction |

| Class Imbalance Handling | ROSE (Random Over-Sampling Examples) [1] | Creates artificial cases based on the original data distribution to balance dataset classes |

| Model Explainability | SHAP (SHapley Additive exPlanations) [1] | Quantifies feature contributions and directionality at cohort and patient levels for model transparency |

| Performance Validation | Stratified k-Fold Cross-Validation [1] | Maintains class distribution across folds for robust performance estimation on imbalanced datasets |

| Performance Metrics | Precision-Recall (PR) Curves [1] | Provides complementary assessment to ROC AUC for models predicting rare events |

| EHR Integration Framework | Role-Based Access Controls [36] | Ensures appropriate data visibility across research team roles while maintaining security compliance |

| 3D Mapping Integration | EnSite Precision Mapping System [37] | Provides electroanatomical mapping data for procedure planning and outcome correlation |

Implementation Challenges and Interoperability Considerations

Technical and Adoption Barriers

Despite promising performance metrics, implementing ML-enhanced dashboards faces significant challenges. Vendor lock-in and closed ecosystems present substantial barriers, with 68% of private clinics using legacy EHRs reporting costs exceeding £20,000 annually for third-party integration tools [36]. Data silos and inconsistent formats further complicate implementation, with one rheumatology clinic reporting 15 hours weekly spent manually reconciling mismatched lab results and EHR entries [36].

Additionally, model opacity reduces clinician trust and hinders adoption, as many complex algorithms exhibit black-box behavior that limits interpretability [1]. Transportability and stability are challenged by data heterogeneity, label noise, and tuning sensitivity, which can induce overfitting despite strong retrospective metrics [1]. Privacy and governance constraints further limit data sharing, and even federated approaches show inconsistent cross-institutional performance [1].

Interoperability Solutions for Research Environments

Effective interoperability solutions form the foundation for successful ML model integration. Cloud-based EHR platforms with native FHIR support reduce patient onboarding delays by 35% and significantly decrease lab sync errors [36]. The RESTful API architecture of FHIR standards enables real-time data exchange between EHRs, research databases, and visualization tools, creating a seamless pipeline for model input and output [36].

Modern interoperability solutions also incorporate granular access controls that allow researchers to access specific data elements while maintaining compliance with GDPR, HIPAA, and institutional review board requirements [36]. These technical capabilities, combined with phased implementation rollouts that start with core functionality before adding advanced analytics, reduce adoption barriers and support longitudinal research initiatives across multiple institutions [36].