Wavelet Transform in Medical Imaging: From Denoising to AI-Driven Diagnostics

This article provides a comprehensive exploration of wavelet transform techniques and their revolutionary impact on medical imaging.

Wavelet Transform in Medical Imaging: From Denoising to AI-Driven Diagnostics

Abstract

This article provides a comprehensive exploration of wavelet transform techniques and their revolutionary impact on medical imaging. Tailored for researchers and drug development professionals, it delves into the foundational principles of multi-resolution analysis, showcasing cutting-edge applications in image denoising, compression, registration, and segmentation. The review systematically compares wavelet-based methods with traditional approaches, evaluates their performance using established clinical metrics, and addresses key optimization challenges for real-world deployment. By synthesizing recent advances and future directions, this work serves as a critical resource for leveraging wavelet transforms to enhance diagnostic accuracy, streamline data management, and accelerate innovation in biomedical research.

The Core Principles of Wavelet Transform and Multi-Resolution Analysis

For medical imaging researchers, the choice of signal transformation technique is paramount for tasks ranging from image compression and denoising to segmentation and registration. The Discrete Wavelet Transform (DWT) and Fourier Transform represent two fundamental mathematical approaches to analyzing image data, each with distinct advantages and limitations. The Fourier Transform decomposes a signal into constituent sinusoids of varying frequencies, providing a global frequency representation but lacking time localization [1]. In contrast, the DWT decomposes signals using localized wavelets—oscillations that are limited in duration—enabling simultaneous time-frequency analysis through multi-resolution analysis [2] [1]. This fundamental difference in approach has significant implications for medical image processing, where preserving both spatial and frequency information is often critical for diagnostic accuracy.

Theoretical Comparison: DWT vs. Fourier Transform

Table 1: Fundamental Properties of DWT and Fourier Transform

| Property | Discrete Wavelet Transform (DWT) | Fourier Transform |

|---|---|---|

| Domain Analysis | Time-frequency localization | Global frequency |

| Resolution | Variable time-frequency resolution | Uniform frequency resolution |

| Basis Functions | Localized wavelets (e.g., Haar, Daubechies) | Infinite sinusoids |

| Information Capture | Captures transient events and local features | Captures periodic patterns |

| Computational Complexity | O(N) for certain cases [2] | O(N log N) for FFT [3] |

| Invariance | Shift-variant (standard DWT) | Shift-invariant |

| Medical Imaging Strengths | Edge detection, compression, denoising | MRI reconstruction, spectroscopy, noise resilience |

The mathematical underpinnings of each transform directly inform their application strengths. The Fourier Transform's global frequency analysis makes it ideal for characterizing periodic structures and stationary patterns, and it forms the mathematical foundation for Magnetic Resonance Imaging (MRI) and Fourier Transform Infrared (FTIR) spectroscopy [4] [5]. The DWT's multi-resolution capability allows it to hierarchically decompose an image into approximation (low-frequency) and detail (high-frequency) coefficients across scales, preserving structural information like edges and textures crucial for diagnostic interpretation [2] [6]. This multi-scale representation enables progressive image transmission and scalable compression, valuable for telemedicine applications.

Performance Metrics and Quantitative Comparison

Table 2: Performance Comparison in Medical Imaging Applications

| Application | Transform Method | Key Metrics | Reported Performance |

|---|---|---|---|

| Medical Image Denoising | Block-based Discrete Fourier Cosine Transform (DFCT) [7] | SNR, PSNR, Image Quality (IM) | Consistently outperformed global DWT across all noise types (Gaussian, Uniform, Poisson, Salt-and-Pepper) |

| Medical Image Denoising | Global DWT Approach [7] | SNR, PSNR, Image Quality (IM) | Inferior to block-based DFCT across all tested noise models |

| Medical Image Compression | DWT + Cross-Attention Learning [8] | PSNR, SSIM, MSE | Superior to JPEG2000 and BPG on LIDC-IDRI, LUNA16, and MosMed datasets |

| Medical Image Segmentation | FFTMed (Fourier-based) [9] | Dice Score, Computational Efficiency | Competitive accuracy with significantly lower computational overhead and enhanced adversarial noise resilience |

| Computational Duration | FFT [3] | Execution Time (Theoretical) | O(N log N) complexity |

| Computational Duration | DWT [3] | Execution Time (Theoretical) | O(N) complexity for certain cases (e.g., Haar wavelet) |

Recent comparative studies reveal nuanced performance characteristics. For image denoising, contrary to the common hypothesis favoring wavelets, a 2025 study found that a block-based Discrete Fourier Cosine Transform (DFCT) approach consistently outperformed a global DWT approach across multiple noise types and metrics [7]. The superior performance was attributed to DFCT's localized processing strategy, which better preserves fine details by operating on small image blocks and adapting to local statistics without introducing global artifacts [7]. However, in compression applications, hybrid frameworks combining DWT with deep learning modules demonstrate state-of-the-art performance, outperforming standard codecs like JPEG2000 [8].

Application Protocols in Medical Imaging Research

Protocol I: Wavelet-Based Medical Image Denoising and Enhancement

This protocol details a hybrid approach combining undecimated DWT (UDWT) with wavelet coefficient mapping for simultaneous denoising and contrast enhancement [6].

Title: Wavelet-Based Denoising and Enhancement Workflow

Step-by-Step Methodology:

- Image Decomposition: Perform 2D Undecimated Discrete Wavelet Transform (UDWT) on the original medical image using the Db2 wavelet basis function up to resolution level 2. UDWT provides shift-invariance compared to standard DWT [6].

- Hierarchical Correlation Calculation: For the three detailed coefficient subbands (horizontal, vertical, diagonal), calculate correlation values between level 1 and level 2 coefficients using:

ImgCor(p,q) = |Coef_lev1(p,q) × Coef_lev2(p,q)|[6]. - Adaptive Threshold Determination:

- Find the maximum correlation value in each row of the correlation image for each subband.

- Compute the mean (

Mean_max) of these maximum values. - Eliminate correlation values greater than

0.8 × Mean_max(considered signal). - Compute standard deviation (σ) from remaining correlations.

- Set threshold

THR = 1.6 × σ[6].

- Coefficient Thresholding: Apply the threshold to level 1 detail coefficients. If

|Coef_lev1 × Coef_lev2| ≥ THR, retainCoef_lev1; otherwise, set to zero [6]. - Initial Reconstruction: Perform inverse UDWT using the approximation coefficients of level 1 and the modified detail coefficients to reconstruct the denoised image.

- Contrast Enhancement: Apply a sigmoid-type mapping function to the wavelet coefficients of the denoised image:

w_output_j = a × [1 / (1 + 1/exp((w_input_j - c)/b))] × w_input_j [%], wherea = 2^(-(j-1)N). This weights lower-decomposition levels more heavily to enhance edges [6]. - Final Image Reconstruction: Perform an inverse DWT to generate the final denoised and contrast-enhanced medical image.

Protocol II: Deep Learning-Based Medical Image Compression Using DWT

This protocol employs DWT within a deep learning framework for superior compression performance while preserving diagnostic regions [8].

Title: DWT Deep Learning Compression Pipeline

Step-by-Step Methodology:

- Multi-resolution Decomposition: Decompose input medical images into multi-resolution frequency sub-bands (LL, LH, HL, HH) using Discrete Wavelet Transform (DWT) [8].

- Cross-Attention Feature Prioritization: Process sub-bands through a Cross-Attention Learning (CAL) module that dynamically weights feature maps, emphasizing regions with high diagnostic information (e.g., lesions, tissue boundaries) while reducing redundancy in less critical areas [8].

- Probabilistic Feature Representation: Encode the weighted features using a lightweight Variational Autoencoder (VAE) to create a robust probabilistic latent space representation, refining features before final encoding [8].

- Entropy Encoding and Storage: Apply entropy coding (e.g., arithmetic coding) to the quantized latent representation to produce the final compressed bitstream for efficient transmission or storage [8].

Protocol III: Fourier-Based Medical Image Segmentation (FFTMed)

This protocol outlines a Fourier domain approach for lightweight and noise-resilient medical image segmentation [9].

Title: FFTMed Segmentation Framework

Step-by-Step Methodology:

- Domain Transformation: Convert input medical images from the spatial domain to the frequency domain using a 2D Fast Fourier Transform (FFT) [9].

- High-Frequency Filtering: Discard a portion of the high-frequency components in the first half of the network to reduce noise and computational load, leveraging the inherent noise resilience of the frequency domain [9].

- Frequency Attention Processing: Process the frequency data through Encoder Frequency Modules (EFM) that utilize frequency attention mechanisms to capture long-range dependencies and comprehensive amplitude-phase information [9].

- Feature Aggregation: Replace standard max-pooling with a Hybrid Kernel Aggregation Anti-Aliasing Module to preserve critical spectral details during down-sampling [9].

- Output Refinement: Integrate a refinement module that iteratively enhances the predicted probability maps to mitigate potential blurring or ringing artifacts, ensuring accurate final segmentation [9].

The Scientist's Toolkit: Essential Research Reagents and Materials

Table 3: Essential Research Materials and Computational Tools

| Item/Reagent | Function/Application | Specification Notes |

|---|---|---|

| Haar Wavelet | Lossless image decomposition for registration [10], simple denoising | Orthogonal, symmetric, single-level discontinuity; ideal for edge detection and structural preservation. |

| Daubechies (Db2/Db4) | Medical image denoising [6] and compression [8] | Compact support with vanishing moments; balances smoothness and localization. |

| Symlet (Sym4) | Feature extraction in physiological signals like ECG [1] | Near-symmetric; improves signal reconstruction quality for feature detection. |

| Fast Fourier Transform (FFT) | Foundation for MRI reconstruction [4], frequency-domain segmentation [9] | Efficient O(N log N) algorithm; transforms spatial data to global frequency representation. |

| Discrete Fourier Cosine Transform (DFCT) | Block-based medical image denoising [7] | Localized frequency processing; excels in preserving fine details without global artifacts. |

| Benchmark Datasets (LIDC-IDRI, LUNA16, MosMed) | Training and validation of compression/denoising algorithms [8] | Publicly available curated medical images (CT scans) with annotations for standardized comparison. |

| Adversarial Noise Benchmark Datasets | Evaluating model robustness and noise resilience [9] | Custom datasets incorporating various noise levels (Gaussian, salt-and-pepper) for stress-testing. |

The comparative analysis reveals that neither DWT nor Fourier transforms represent universally superior solutions for medical imaging; rather, they offer complementary strengths. DWT excels in applications requiring spatial localization and multi-resolution analysis, such as image compression and registration, where preserving edges and structural hierarchies is paramount [8] [10]. Fourier-based methods demonstrate superior performance in global frequency analysis and noise resilience, making them ideal for MRI reconstruction and adversarial attack resistance [9] [4]. Emerging research indicates that hybrid approaches, which integrate the strengths of both transforms with deep learning architectures, represent the most promising future direction. These include wavelet-guided ConvNeXt for registration [10], Fourier-based lightweight segmentation networks [9], and cross-attention wavelet frameworks for compression [8], ultimately advancing the precision and efficiency of medical image analysis for improved diagnostic outcomes.

Multi-Resolution Analysis (MRA), particularly through the Discrete Wavelet Transform (DWT), provides a powerful framework for decomposing medical images into constituent frequency sub-bands, enabling specialized processing of anatomical features at different scales [8]. This decomposition separates an image into a multi-scale representation comprising approximation coefficients (low-frequency components carrying broad structural information) and detail coefficients (high-frequency components containing fine textures, edges, and diagnostic details) [8] [11]. Unlike traditional Fourier methods that offer only frequency localization, DWT delivers both frequency and spatial localization, allowing researchers to isolate and analyze specific image features within particular spatial regions [12]. This capability is particularly valuable in medical imaging, where diagnostically relevant information is often concentrated in specific frequency ranges and anatomical locations.

The mathematical foundation of DWT involves projecting an image onto a set of basis functions derived from a mother wavelet through scaling and translation operations [11]. This process generates a hierarchical decomposition across multiple resolution levels, with each level further separating frequency components. For medical image analysis, this multi-scale approach enables researchers to develop algorithms that selectively process or enhance features based on their clinical significance [8] [13]. The practical implementation typically utilizes filter banks with low-pass and high-pass filters to separate frequency components, followed by downsampling to create the multi-resolution pyramid [14]. This technical foundation supports diverse medical imaging applications including compression, synthesis, and denoising, which will be explored in subsequent sections of this document.

Key Applications in Medical Imaging Research

Image Compression for Telemedicine

Wavelet-based multi-resolution analysis enables advanced medical image compression by separating image content into frequency sub-bands that can be selectively quantized based on their diagnostic importance [8]. Recent research incorporates cross-attention learning (CAL) modules with DWT to create hybrid compression frameworks that dynamically weight feature maps, prioritizing clinically relevant regions such as lesions or tissue boundaries [8]. This approach achieves superior rate-distortion optimization compared to conventional codecs like JPEG2000 and H.265/HEVC, significantly reducing storage and transmission bandwidth requirements while preserving diagnostic integrity [8]. The integration of Variational Autoencoders (VAEs) further enhances compression efficiency by providing a probabilistic latent space for entropy coding, making these methods particularly valuable for cloud-based healthcare platforms and real-time telemedicine applications [8].

Multi-Modal Medical Image Synthesis

Dual-branch wavelet encoding architectures leverage MRA to address the challenging problem of multi-modal medical image synthesis, where missing imaging modalities are generated from available data [14]. These systems employ wavelet multi-scale downsampling (Wavelet-MS-Down) modules that perform near-lossless feature dimensionality reduction by separately processing low-frequency structural contours and high-frequency anatomical details [14]. The decomposition enables targeted processing of different frequency components, with deformable cross-attention feature fusion (DCFF) modules facilitating deep interaction between features extracted from different source modalities [14]. This approach has demonstrated particular effectiveness in brain MRI synthesis, where it successfully generates missing sequences (T1, T1ce, T2, FLAIR) by exploiting complementary information across modalities while preserving high-frequency pathological features essential for diagnostic accuracy [14].

Medical Image Denoising

Wavelet-based MRA provides an effective framework for medical image denoising through thresholding of detail coefficients in the transform domain [11]. The approach leverages the statistical properties of wavelet coefficients, where noise typically distributes across coefficients differently from anatomical structures [12]. Recent advancements combine DWT with Bayesian-optimized bilateral filtering to achieve enhanced denoising performance, particularly for Low-Dose Computed Tomography (LDCT) images corrupted by Gaussian noise [12] [11]. The bilateral filter's parameters are optimized using Bayesian methods to maintain optimal balance between noise suppression and edge preservation [12]. Studies demonstrate that DWT-based denoising achieves superior quantitative results, with PSNR values up to 33.85 dB and SSIM of 0.7194 at noise level σ=10, outperforming other transform domain methods like PCA, MSVD, and DCT [11].

Tumor Diagnosis and Characterization

Topological Data Analysis (TDA) combined with wavelet transforms has emerged as a novel approach for extracting robust imaging biomarkers for tumor diagnosis [15] [16]. The WT-TDA algorithm leverages wavelet-based MRA to enhance topological feature representation in ultrasound images, effectively capturing multiscale pathological patterns associated with malignancy [15] [16]. By analyzing persistent homology across wavelet-decomposed sub-bands, the method identifies topological features (connected components, loops, voids) that correlate with histological diagnosis [16]. This approach has demonstrated exceptional diagnostic performance across multiple tumor types, achieving test accuracies of 0.932, 0.805, and 0.888 for breast, thyroid, and kidney cancers, respectively [15]. The method provides enhanced interpretability through SHAP analysis, identifying clinically relevant topological features that serve as quantitative biomarkers for malignant transformation [16].

Multi-Modal Image Fusion

Wavelet-based MRA enables effective fusion of complementary information from different imaging modalities, such as combining anatomical details from CT with functional information from PET [13]. The Wavelet Attention network (WTA-Net) incorporates spatial-channel attention mechanisms within the wavelet domain to selectively enhance diagnostically relevant features during fusion [13]. This approach processes individual frequency sub-bands with specialized attention modules, improving information entropy by 34.76% for PET components and 12.7% for CT components compared to standard wavelet decomposition [13]. The method effectively preserves metabolic activity information from PET while maintaining anatomical context from CT, creating fused images with comprehensive diagnostic information that supports improved clinical decision-making [13].

Table 1: Quantitative Performance of Wavelet-Based Medical Imaging Applications

| Application Domain | Performance Metrics | Reported Values | Datasets Validated |

|---|---|---|---|

| Image Compression [8] | PSNR, SSIM, MSE | Superior to JPEG2000 and BPG | LIDC-IDRI, LUNA16, MosMed |

| Image Denoising [11] | PSNR: 33.85 dB, SSIM: 0.7194 | SNR: 28.50 dB (σ=10) | SARS-CoV-2 CT-scan dataset |

| Tumor Diagnosis [15] | Accuracy: 0.932, 0.805, 0.888 | AUC: 0.915, 0.805, 0.889 | Breast, Thyroid, Kidney Ultrasound |

| Image Fusion [13] | Information Entropy improved 34.76% (PET) | Spatial Frequency improved 49.4% (CT) | Brain MRI, PET/CT datasets |

Experimental Protocols

Protocol 1: Wavelet-Based Medical Image Compression

Objective: To implement a hybrid compression framework combining DWT with cross-attention learning for diagnostic image compression.

Materials and Reagents:

- Medical image dataset (LIDC-IDRI, LUNA16, or MosMed)

- Python 3.8+ with PyWavelets, TensorFlow 2.8+

- High-performance computing workstation with GPU (NVIDIA RTX 3080+ recommended)

Methodology:

- Image Preprocessing: Normalize input images to [0,1] range and resize to dimensions divisible by 2^n, where n is the desired decomposition levels.

- Wavelet Decomposition: Apply 2D DWT with Daubechies-4 (db4) wavelets to decompose each image into approximation (LL), horizontal (HL), vertical (LH), and diagonal (HH) sub-bands at 3 decomposition levels [8].

- Cross-Attention Learning: Process approximation sub-band through CAL module with dynamic feature weighting:

- Compute query (Q), key (K), and value (V) projections from different feature dimensions

- Calculate attention weights: Attention(Q,K,V) = softmax(QK^T/√d)V

- Apply weights to emphasize diagnostically relevant regions [8]

- Variational Autoencoder Processing: Encode weighted features through lightweight VAE with bottleneck dimension of 128 for latent representation refinement.

- Entropy Coding: Apply arithmetic coding to quantized coefficients for final bitstream generation.

- Reconstruction: Reverse the process using Inverse DWT (IDWT) for image reconstruction.

Validation Metrics: Calculate PSNR, SSIM, and MSE between original and reconstructed images. Compare with JPEG2000 and BPG codecs at equivalent bit rates [8].

Protocol 2: Wavelet-Based Medical Image Denoising

Objective: To implement DWT-based denoising with Bayesian-optimized bilateral filtering for LDCT images.

Materials and Reagents:

- LDCT dataset (e.g., SARS-CoV-2 CT-scan dataset)

- MATLAB R2023a with Wavelet Toolbox

- Bayesian optimization toolbox

Methodology:

- Noise Characterization: Estimate noise parameters from homogeneous image regions using method of moments.

- Wavelet Decomposition: Apply 2D DWT with Symlets-8 (sym8) wavelets at 4 decomposition levels [11].

- Thresholding: Implement BayesShrink adaptive thresholding for detail coefficients:

- Estimate noise variance σₙ² from HH sub-band: σₙ² = median(|HH₁|)/0.6745

- Calculate signal variance for each sub-band: σₓ² = max(σₛ² - σₙ², 0)

- Compute threshold: T = σₙ²/σₓ

- Apply soft thresholding to detail coefficients [12]

- Bayesian-Optimized Bilateral Filtering:

- Define objective function for parameter optimization: f(σs, σr) = -PSNR(denoised_image)

- Use Bayesian optimization with Gaussian process priors over 30 iterations to find optimal spatial (σs) and range (σr) parameters [12]

- Apply optimized bilateral filter as post-processing step

- Reconstruction: Apply IDWT to thresholded coefficients and bilateral-filtered image.

Validation Metrics: Calculate PSNR, SNR, and SSIM at noise levels σ=10,20,30,40. Compare with PCA, MSVD, and DCT methods [11].

Table 2: Research Reagent Solutions for Wavelet-Based Medical Image Analysis

| Research Reagent | Function | Application Examples |

|---|---|---|

| PyWavelets Library | Python DWT implementation | Multi-resolution decomposition for compression, denoising |

| Daubechies Wavelets (db4) | Orthogonal wavelet with 4 vanishing moments | Medical image compression [8] |

| Symlets Wavelets (sym8) | Near-symmetric orthogonal wavelets | Image denoising with reduced phase distortion [11] |

| Bayesian Optimization Toolbox | Parameter optimization for bilateral filtering | Denoising parameter selection [12] |

| Cross-Attention Modules | Dynamic feature weighting | Region-of-interest emphasis in compression [8] |

| Topological Data Analysis Library | Persistent homology computation | Tumor biomarker extraction [15] [16] |

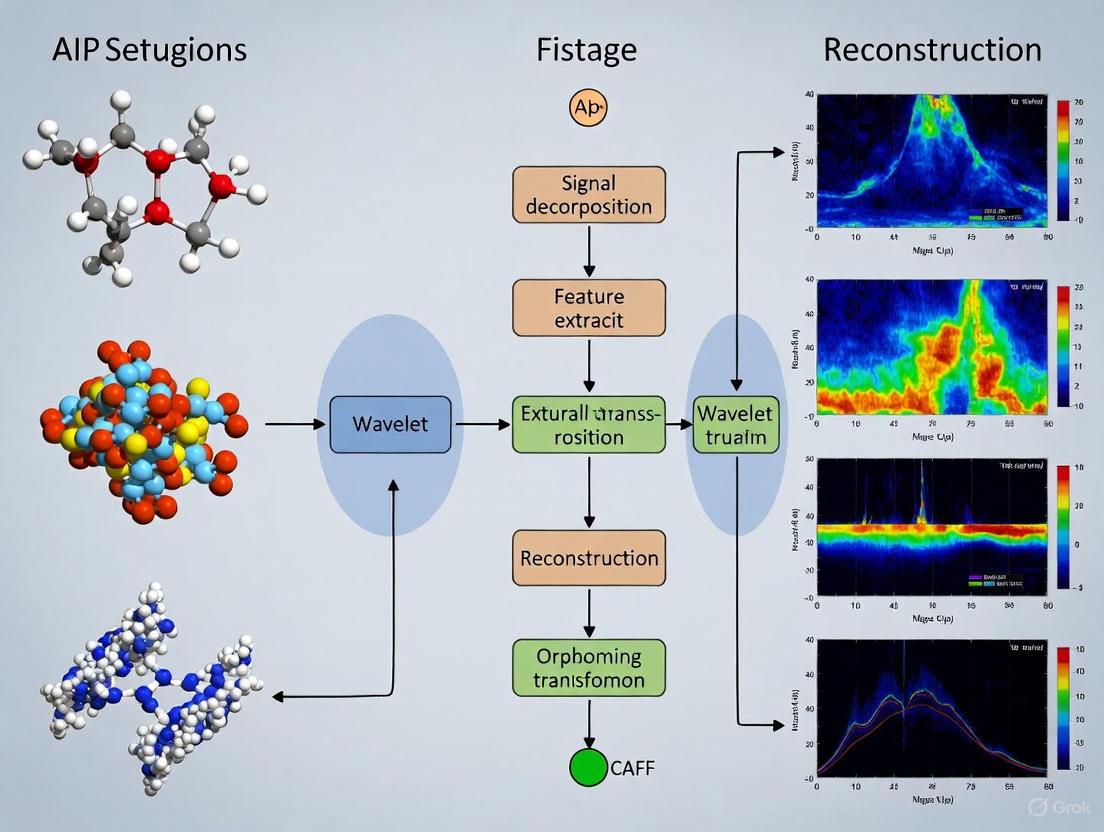

Visualization of Workflows

Wavelet-Based Medical Image Compression Workflow

Wavelet-Based Medical Image Denoising Protocol

Multi-resolution analysis through wavelet transform represents a versatile and powerful framework for advancing medical imaging research. By decomposing images into frequency sub-bands, researchers can develop specialized algorithms that selectively process clinically relevant information while suppressing noise and redundant data [8] [11]. The protocols outlined in this document provide practical methodologies for implementing wavelet-based approaches across key applications including compression, denoising, synthesis, and diagnostic biomarker extraction [8] [14] [15]. The quantitative results demonstrate consistent performance advantages over traditional methods, while the visualization workflows offer clear implementation guidance. As medical imaging continues to evolve toward precision medicine and quantitative biomarkers, wavelet-based MRA will remain an essential tool for extracting clinically meaningful information from medical images across scales and modalities.

Why Wavelets? Advantages for Preserving Spatial and Diagnostic Information

In medical imaging, the integrity of spatial and diagnostic information is paramount. Wavelet transform-based techniques have emerged as a powerful solution, uniquely capable of preserving critical image details that other methods often compromise. Unlike traditional Fourier-based analyses that provide only global frequency information, wavelets offer multi-resolution analysis, allowing for the simultaneous examination of an image's global structure and local fine details. This capability is fundamental for clinical applications, where the preservation of edges, textures, and subtle pathological features directly impacts diagnostic accuracy. This document outlines the core advantages of wavelet transforms and provides detailed protocols for their application in medical imaging research, supporting a broader thesis on their transformative role in the field.

Core Advantages and Quantitative Performance

The principal advantage of wavelet transforms lies in their ability to perform localized frequency analysis. An image is decomposed into different frequency sub-bands at multiple scales, allowing for targeted processing. Clinically significant high-frequency components, such as tissue boundaries and micro-calcifications, can be preserved or enhanced, while noise in similar frequency ranges can be selectively attenuated.

The table below summarizes quantitative evidence demonstrating the effectiveness of wavelet-based methods across diverse medical imaging tasks.

Table 1: Quantitative Performance of Wavelet-Based Methods in Medical Imaging

| Application Area | Key Methodology | Reported Performance Metrics | Preservation of Diagnostic Information |

|---|---|---|---|

| Hyperspectral Data Compression [17] | Daubechies wavelet transformation with spectral cropping and scale matching. | Achieved up to 32× compression (96.88% reduction) with minimal loss of spectral/spatial data. | Preserved original wavelength scale for straightforward spectral interpretation; improved contrast and noise reduction. |

| Medical Image Compression [8] [18] | Hybrid DWT/Cross-Attention Learning & SWT/GLCM/SDAE frameworks. | Superior PSNR and SSIM vs. JPEG2000/BPG; PSNR up to 50.36 dB, MS-SSIM of 0.9999 [18]. | Cross-attention and texture-aware encoding dynamically prioritize clinically relevant regions (e.g., lesions). |

| MRI Brain Denoising [19] | Systematic evaluation of wavelets (e.g., bior6.8) with universal thresholding. | Optimal PSNR: 27.38 dB (σ=10); 25.25 dB (σ=15). Optimal SSIM: 0.647 (σ=10); 0.589 (σ=15). | Effectively preserved essential anatomical structures while removing Gaussian noise. |

| CT Image Denoising [11] | Discrete Wavelet Transform (DWT) with thresholding. | Achieved PSNR of 33.85 dB, SSIM of 0.7194 at noise level σ=10, outperforming PCA, MSVD, and DCT. | Superior noise suppression while preserving critical edge information in LDCT images. |

| Brain Tumor Segmentation [20] | Adaptive Discrete Wavelet Decomposition & Iterative Axial Attention. | Average Dice scores of 85.0% (BraTS2020) and 88.1% (FeTS2022) with only 5.23 million parameters. | Preserved finer structural details of tumor sub-regions (e.g., enhanced tumors, edemas). |

Conceptual Workflow: Multi-Resoluti on Analysis

The following diagram illustrates the fundamental process of a 2D Discrete Wavelet Transform (DWT) for image analysis, which enables the preservation of spatial-diagnostic information.

Multi-Scale Wavelet Decomposition Workflow: This process shows how an image is recursively separated into approximation and detail coefficients, enabling analysis and processing at multiple resolutions.

The Scientist's Toolkit: Research Reagent Solutions

Successful implementation of wavelet-based medical image analysis requires a combination of computational tools and data resources.

Table 2: Essential Research Toolkit for Wavelet-Based Medical Imaging

| Tool/Reagent | Function & Utility | Exemplars & Notes |

|---|---|---|

| Wavelet Families | Basis functions for decomposition; choice impacts smoothness, symmetry, and reconstruction. | Daubechies (dbN): Compact support, orthogonal [17] [19]. Biorthogonal (biorN.N): Linear phase, symmetry ideal for denoising [19]. Symlets (symN): Near-symmetry, good for general analysis [19]. |

| Thresholding Functions | Nonlinear operators to suppress noise in wavelet domain. | Soft Thresholding: Continuous shrinkage, smoother results [19] [21]. Hard Thresholding: Preserves large coefficients better but can be discontinuous [19] [21]. |

| Performance Metrics | Quantify denoising, compression, and segmentation efficacy. | PSNR: Measures noise suppression [19]. SSIM/MS-SSIM: Assesses perceptual structural fidelity [8] [18] [19]. Dice Score: Evaluates segmentation accuracy [20]. |

| Benchmark Datasets | Standardized data for training, validation, and comparative analysis. | Brain MRIs: BraTS2020 [14] [20], IXI [14] [10]. General Compression: DIV2K, CLIC [18]. CT Scans: SARS-CoV-2 CT-scan dataset [11]. |

Detailed Experimental Protocols

Protocol 1: Wavelet-Based Medical Image Denoising

This protocol is adapted from systematic evaluations for denoising MRI brain images and CT scans [19] [11].

1. Objectives: To effectively suppress additive Gaussian noise in medical images while preserving critical diagnostic features such as edges and textures.

2. Materials and Reagents:

- Datasets: Brain tumor MRI dataset from figshare (3,064 images) [11] or a public SARS-CoV-2 CT-scan dataset [11].

- Software: Python with PyWavelets (

pywt) library, NumPy, OpenCV, or equivalent MATLAB toolboxes. - Wavelet Options: Daubechies (

db3), Symlet (sym4), or Biorthogonal (bior6.8) [19].

3. Experimental Procedure:

1. Preprocessing: Load the medical image. Normalize pixel intensities to a [0, 255] range if required [19].

2. Noise Simulation (For Validation): To a clean image, add Gaussian noise with mean (μ) = 0 and standard deviation (σ) = 10, 15, or 25 to simulate realistic noise conditions [19].

3. Wavelet Decomposition: Apply a 2-level 2D Discrete Wavelet Transform (DWT) using a selected wavelet (e.g., bior6.8) [19]. This generates one approximation sub-band (LL) and three detail sub-bands (LH, HL, HH) per level.

4. Thresholding:

* Calculate Threshold: Compute the universal threshold, τ, for each detail sub-band using the formula: τ = σ_noise * sqrt(2 * log(N)), where N is the number of coefficients in the sub-band [19].

* Apply Threshold Function: Apply soft thresholding to the detail coefficients (LH, HL, HH) of all decomposition levels. Soft thresholding is defined as: c_hat = sign(c) * max(0, |c| - τ) [19].

5. Image Reconstruction: Perform an inverse DWT using the original approximation coefficients and the modified (thresholded) detail coefficients to reconstruct the denoised image.

4. Data Analysis:

* Calculate PSNR and SSIM between the denoised image and the clean ground truth image [19] [11].

* For σ = 15, the target PSNR and SSIM using bior6.8 with universal thresholding should be approximately 25.25 dB and 0.589, respectively [19].

Protocol 2: Wavelet-Based Compression of Hyperspectral Imaging (HSI) Data

This protocol is based on a scale-preserving compression method for VNIR and SWIR hyperspectral data [17].

1. Objectives: To achieve high-compression ratios for large HSI datasets while preserving the original wavelength scale and critical spectral-spatial information.

2. Materials and Reagents:

- Data: Hyperspectral data cubes (e.g., medical HSI for diagnostic purposes).

- Software: Python with PyWavelets, NumPy, and SciPy.

- Wavelet: Daubechies wavelets.

3. Experimental Procedure: 1. Spectral Wavelet Transformation: Apply a 1D wavelet transform along the spectral dimension of the HSI data cube for dimensionality reduction [17]. 2. Spectral Cropping: Eliminate spectral bands with low-intensity signals, which contribute less diagnostically relevant information [17]. 3. Scale Matching: Implement a mapping function to ensure the compressed data's wavelength scale accurately corresponds to the original data, enabling direct spectral interpretation [17]. 4. Encoding & Storage: Use standard entropy coding (e.g., Huffman coding) on the processed wavelet coefficients before storage or transmission [17].

4. Data Analysis: * Calculate the compression ratio (original size / compressed size). * Evaluate spectral fidelity by comparing extracted spectral signatures from original and compressed data. * Assess spatial feature retention using metrics like SSIM. The target is up to 32× compression with minimal loss of important data [17].

Protocol 3: Integration of Wavelets with Deep Learning for Segmentation

This protocol leverages a lightweight framework for 3D brain tumor segmentation that integrates adaptive discrete wavelet decomposition [20].

1. Objectives: To improve the accuracy and efficiency of segmenting tumor sub-regions from 3D MRI data by capturing multi-scale features in the frequency domain.

2. Materials and Reagents:

- Datasets: BraTS2020 or FeTS2022 multi-modal MRI datasets [20].

- Model Framework: A U-Net-like architecture with a custom wavelet decomposition module.

- Wavelet: 3D Discrete Wavelet Transform.

3. Experimental Procedure: 1. Network Architecture: * Encoder: Replace standard pooling/downsampling layers with an Adaptive Wavelet Decomposition (AWD) module. This module uses 3D DWT to decompose the feature maps into low-frequency (approximation) and high-frequency (detail) sub-bands, preserving multi-scale information without data loss [20]. * Bottleneck: Incorporate an attention mechanism (e.g., Iterative Axial Factorization Attention) to model long-range dependencies efficiently [20]. * Decoder: Use a multi-scale feature fusion decoder (MSFFD) that progressively upsamples and aligns features from the encoder and bottleneck using skip connections [20]. 2. Training: Train the model using a combined loss function (e.g., Dice loss and Cross-Entropy loss) on annotated 3D MRI volumes.

4. Data Analysis: * Evaluate segmentation performance using the Dice Similarity Coefficient for the whole tumor (WT), tumor core (TC), and enhancing tumor (ET). * The target Dice scores on BraTS2020 should be competitive with state-of-the-art methods, approximately 85.0%, while maintaining a low parameter count (~5.23 million) [20].

Future Directions

The integration of wavelet transforms with deep learning represents the frontier of medical image analysis. Future research will focus on developing end-to-end learnable wavelet kernels, where the optimal wavelet bases for specific imaging modalities or diagnostic tasks are learned directly from the data, rather than being pre-defined. Furthermore, the application of hybrid wavelet-attention models, as seen in segmentation and synthesis tasks [14] [20], is poised to expand into other areas like disease prognostication and treatment monitoring, enhancing the role of wavelets in computational pathology and personalized medicine.

Key Wavelet Families and Their Characteristics for Medical Image Processing

Wavelet transforms have become a cornerstone of modern medical image processing, providing a powerful mathematical framework for multi-resolution analysis. Unlike traditional Fourier methods, wavelets excel at representing local features in both spatial and frequency domains, making them ideal for analyzing complex anatomical structures and pathological signatures in medical images [22]. The selection of an appropriate wavelet family—each with distinct characteristics—is critical for optimizing performance in applications ranging from denoising and compression to feature extraction and image synthesis [23]. This article provides a comprehensive overview of key wavelet families and establishes detailed experimental protocols for their application in medical imaging research, framed within the broader context of wavelet transform-based techniques for medical imaging research.

Key Wavelet Families and Their Mathematical Properties

Fundamental Properties of Wavelets

A wavelet family is defined by its mother wavelet ψ(x), which must satisfy specific mathematical conditions to ensure stable inversion: normalized energy (∫|ψ(x)|²dx = 1), finite energy (∫|ψ(x)|dx < ∞), and a zero mean (∫ψ(x)dx = 0) [22]. These conditions enable the wavelet transform to analyze signal fluctuations without altering the total signal flux. Additional properties tailored to specific applications include continuity, differentiability, compact support, and vanishing moments [22].

Prominent Wavelet Families in Medical Imaging

Table 1: Characteristics of Major Wavelet Families in Medical Imaging

| Wavelet Family | Key Members | Symmetry | Vanishing Moments | Support Width | Orthogonality | Primary Medical Applications |

|---|---|---|---|---|---|---|

| Haar | haar, db1 | Symmetric | 1 | 1 | Orthogonal | Image fusion [23], didactic visualization [22] |

| Daubechies | db2-db20 | Asymmetric | N (order) | 2N-1 | Orthogonal | Denoising [6], compression [24] |

| Symlets | sym2-sym20 | Near symmetric | N (order) | 2N-1 | Orthogonal | General processing [23] |

| Coiflets | coif1-coif5 | Near symmetric | 2N (order) | 6N-1 | Orthogonal | Denoising [6] |

| Biorthogonal | bior1.1-bior6.8 | Symmetric | Customizable | Variable | Biorthogonal | Image reconstruction [23] |

| Reverse Biorthogonal | rbio1.1-rbio6.8 | Symmetric | Customizable | Variable | Biorthogonal | CT-MRI fusion [23] |

| Discrete Meyer | dmey | Symmetric | - | - | Orthogonal | Specialized analysis [23] |

The Haar wavelet represents one of the simplest and oldest orthonormal wavelets, with a discontinuous structure resembling a step function. Its scaling function φ(t) equals 1 for 0 ≤ t ≤ 1 and 0 elsewhere, providing a piecewise constant approximation that is valuable for didactic purposes but limited in representing continuous signals smoothly [22] [23].

The Daubechies family (dbN), developed by Ingrid Daubechies, offers compactly supported orthonormal wavelets with increasing smoothness as the order N increases. The db1 variant is functionally identical to the Haar wavelet. Higher-order Daubechies wavelets (e.g., db2-db20) provide better frequency localization and are frequently employed in medical image denoising and compression tasks [22] [6] [24].

Biorthogonal wavelets utilize different wavelet functions for decomposition and reconstruction, achieving perfect reconstruction while maintaining symmetry and linear phase properties critical for image reconstruction without artifact introduction [23]. This family is particularly valuable in medical image compression applications where visual fidelity must be preserved [24].

Symlets and Coiflets represent modifications of the Daubechies family optimized for increased symmetry while maintaining orthogonality. Symlets offer near-symmetry, while Coiflets were designed at the request of Ronald Coifman to feature scaling functions with vanishing moments, making them suitable for denoising applications where phase preservation is important [6] [23].

Wavelet Selection Guidelines

Selection of an appropriate wavelet family depends on specific application requirements:

- Denoising: Daubechies (db2) and Coiflets have demonstrated superior performance in medical image denoising, particularly with undecimated discrete wavelet transform variants [6].

- Image Fusion: Haar, biorthogonal 1.1 (bior1.1), and reverse biorthogonal 1.1 (rbio1.1) have outperformed other families in CT-MRI fusion tasks [23].

- Compression: Biorthogonal wavelets are preferred for their symmetric, linear-phase properties that minimize reconstruction artifacts [24].

- Feature Extraction: Wavelets with higher vanishing moments (e.g., higher-order Daubechies) better represent complex textures in radiomics analysis [25].

Experimental Protocols for Medical Image Processing

Protocol 1: Wavelet-Based Medical Image Denoising

This protocol details a modified undecimated discrete wavelet transform (UDWT) approach for medical image denoising, combining shift invariance with effective noise suppression [6].

Research Reagent Solutions

- Software Environment: MATLAB or Python with PyWavelets library.

- Wavelet Function: Daubechies order 2 (db2) wavelet filters [6].

- Input Data: Medical images (e.g., mammograms, CT slices) in DICOM format.

- Computational Resources: Standard workstation with sufficient RAM for image series processing.

Procedure

- Image Decomposition: Perform 2-level 2D stationary wavelet decomposition on the noisy medical image using db2 filters to obtain approximation (LL) and detail (LH, HL, HH) coefficients for each level without downsampling.

Hierarchical Correlation Calculation: For each detail subband (LH, HL, HH), compute the hierarchical correlation between level 1 and level 2 coefficients using the element-wise product:

Correlation = |Coef_lev1 × Coef_lev2|[6].Adaptive Threshold Determination:

- Generate correlation images for each detail subband using the formula from step 2.

- Find maximum correlation values in each row of the correlation image.

- Compute the mean (Mean_max) of these maximum values.

- Eliminate correlation values greater than 0.8 × Mean_max (considered signal).

- Calculate standard deviation (σ) of remaining correlation values.

- Set threshold: THR = 1.6 × σ [6].

Coefficient Thresholding: Apply the determined threshold to level 1 detail coefficients:

NewCoef_lev1 = { Coef_lev1, if |Coef_lev1 × Coef_lev2| ≥ THR; 0, otherwise }[6].Image Reconstruction: Perform inverse stationary wavelet transform using the level 2 approximation coefficients and the modified level 1 detail coefficients to reconstruct the denoised image.

UDWT Denoising Workflow: This diagram illustrates the step-by-step process for medical image denoising using a modified undecimated discrete wavelet transform approach with adaptive thresholding.

Protocol 2: Wavelet Radiomics Feature Extraction for Tumor Classification

This protocol describes a methodology for extracting wavelet-domain radiomics features from multiphase CT images to improve classification of hepatocellular carcinoma (HCC) versus non-HCC focal liver lesions [25].

Research Reagent Solutions

- Medical Imaging Data: Multiphase CT scans (non-contrast, arterial, venous, delayed phases).

- Segmentation Tools: ITK-SNAP or 3D Slicer for manual ROI delineation.

- Wavelet Transform: PyWavelets or MATLAB Wavelet Toolbox.

- Feature Selection: Logistic sparsity-based model with Bayesian optimization.

- Classification Algorithms: Support Vector Machines (SVM), Multilayer Perceptron (MLP).

Procedure

- Data Preparation and ROI Segmentation:

- Obtain multiphase CT scans following standardized acquisition protocols.

- Manually segment focal liver lesions across all phases to create 3D regions of interest (ROI) verified by experienced radiologists.

Multi-Domain Feature Extraction:

- Original Domain Features: Extract first-order statistics (mean, variance, skewness, kurtosis) and second-order texture features (GLCM, GLRLM) from each CT phase.

- Wavelet Domain Features: Apply 3D discrete wavelet transform to each ROI using Daubechies or Symlets filters. Extract identical feature sets from each wavelet subband (LLL, LLH, LHL, LHH, HLL, HLH, HHL, HHH).

Feature Combination and Selection:

- Concatenate original and wavelet-domain features into a comprehensive feature vector.

- Apply logistic sparsity-based feature selection with Bayesian optimization to identify the most discriminative features while handling the high feature-to-sample ratio.

Model Training and Validation:

- Train classifier models (SVM, MLP) using the selected wavelet radiomics features.

- Validate using cross-validation and independent test sets, evaluating performance with AUC, accuracy, sensitivity, and specificity metrics.

Wavelet Radiomics Analysis: This workflow demonstrates the process for extracting and analyzing wavelet-based radiomics features from multiphase CT images for hepatocellular carcinoma classification.

Protocol 3: Multi-Modal Medical Image Fusion

This protocol outlines a discrete wavelet transform-based approach for fusing CT and MRI images to combine complementary diagnostic information [23].

Research Reagent Solutions

- Input Images: Registered CT and MRI brain scans (spatially aligned).

- Wavelet Families: Haar (db1), bior1.1, rbio1.1 for optimal fusion quality.

- Fusion Rule: Maximum selection rule for coefficient combination.

- Evaluation Metrics: Qualitative assessment and quantitative metrics (classical and gradient information).

Procedure

- Image Registration: Ensure precise spatial alignment between CT and MRI images using rigid or deformable registration methods.

Wavelet Decomposition: Apply 2-level 2D discrete wavelet transform to both registered CT and MRI images using selected wavelet filters (e.g., Haar, bior1.1, rbio1.1).

Coefficient Fusion: Apply the maximum selection rule to corresponding wavelet coefficients:

- For approximation coefficients (low-frequency): Compute weighted average based on local energy.

- For detail coefficients (high-frequency): Select the coefficient with maximum absolute value.

Image Reconstruction: Perform inverse discrete wavelet transform on the fused coefficients to create the composite image.

Quality Assessment: Evaluate fusion quality through:

- Qualitative Analysis: Visual assessment of anatomical structure preservation and feature integration.

- Quantitative Analysis: Calculate classical metrics (entropy, mutual information) and gradient-based metrics to evaluate edge preservation and information transfer.

Advanced Applications in Medical Imaging

Medical Image Synthesis Using Wavelet Encoding

Recent advances in multi-modal medical image synthesis have incorporated wavelet transforms within deep learning architectures. The Dual-branch Wavelet Encoding and Deformable Feature Interaction GAN (DWFI-GAN) utilizes wavelet multi-scale downsampling (Wavelet-MS-Down) to decompose input modalities into low-frequency contours and high-frequency details [14]. This approach enables near-lossless feature dimensionality reduction while preserving global structural information and fine-grained textures, significantly improving the synthesis of missing modalities in incomplete clinical datasets.

Wavelet-Based Medical Image Compression

Hybrid DWT-Vector Quantization (DWT-VQ) techniques have demonstrated promising results in medical image compression, effectively balancing compression ratios with diagnostic quality preservation [24]. The process involves: (1) speckle noise reduction in ultrasound imagery using specialized filters, (2) discrete wavelet transform decomposition, (3) coefficient thresholding, (4) vector quantization, and (5) Huffman encoding of the quantized coefficients. This approach maintains medically tolerable perceptual quality while significantly reducing storage requirements.

Enhancement of Image Registration

Stationary Wavelet Transform (SWT) has been successfully integrated with mutual information for improved planning CT and cone beam-CT (CBCT) image registration in radiotherapy [26]. The translationally invariant property of SWT helps highlight edge features in noisy CBCT images, while the incorporation of gradient information compensates for the lack of spatial information in traditional mutual information approaches, resulting in enhanced registration accuracy and robustness.

Wavelet families offer diverse mathematical properties that can be strategically leveraged to address specific challenges in medical image processing. The Haar, Daubechies, and biorthogonal families provide distinct trade-offs between symmetry, support width, and vanishing moments that directly impact performance in denoising, feature extraction, and image fusion tasks. The experimental protocols detailed in this article provide researchers with standardized methodologies for implementing wavelet-based techniques, while the advanced applications demonstrate the ongoing innovation in integrating wavelet transforms with modern deep learning approaches. As medical imaging continues to evolve, wavelet-based methods remain essential tools for enhancing diagnostic capability, improving computational efficiency, and extracting clinically relevant information from complex medical image data.

Cutting-Edge Applications in Medical Image Analysis and AI

In medical imaging, the dual imperative of reducing noise while preserving crucial diagnostic features such as edges and textures is a fundamental challenge. Noise, introduced during image acquisition or transmission, can obscure subtle pathological details, potentially leading to misinterpretation. Within the broader context of wavelet transform-based techniques for medical imaging research, this document provides detailed application notes and experimental protocols. It is designed to assist researchers and scientists in implementing robust denoising frameworks that effectively balance noise suppression with the preservation of structural integrity, a balance critical for applications in diagnostics and drug development.

Performance Analysis of Denoising Techniques

The efficacy of denoising algorithms is quantitatively assessed using standard image quality metrics. The following tables summarize the performance of various techniques across different imaging scenarios, providing a basis for algorithmic selection.

Table 1: Comparative Performance of Denoising Algorithms on Medical Images (MRI & HRCT) [27]

| Algorithm | PSNR (dB) | SSIM | Computational Efficiency | Optimal Noise Level |

|---|---|---|---|---|

| BM3D | High | High | Moderate | Low, Moderate |

| DnCNN (Deep Learning) | High | High | Low | High |

| WNNM | Moderate | Moderate | Low | High |

| EPLL | Moderate | Moderate | Low | High |

| NLM | Moderate | Moderate | Low | Low |

| Bilateral Filter | Low | Low | High | Low |

| Guided Filter | Low | Low | High | Low |

| FoE | Low | Low | Moderate | Low |

Table 2: Quantitative Results of Multi-Scale Denoising Methods [28]

| Method | PSNR (dB) | SSIM | Computational Complexity (seconds) |

|---|---|---|---|

| Gaussian Pyramid (GP) | 36.80 | 0.94 | 0.0046 |

| Wavelet (Coiflet4) | 34.12 | 0.91 | 0.0081 |

| Wavelet (Daubechies db4) | 33.85 | 0.90 | 0.0079 |

| Wavelet (Haar) | 32.98 | 0.89 | 0.0075 |

| Wavelet (Symlet4) | 33.91 | 0.90 | 0.0080 |

Table 3: Wavelet Thresholding Functions for Image Denoising [29] [21]

| Threshold Function | Mathematical Form | Advantages | Disadvantages |

|---|---|---|---|

| Hard | $θ_H(x) = \begin{cases} 0 & \text{if } |x| \leq δ \ x & \text{if } |x| > δ \end{cases}$ | Simplicity, preserves strong edges | Introduces artifacts (e.g., pseudo-Gibbs phenomena) |

| Soft | $θ_S(x) = \begin{cases} 0 & \text{if } |x| \leq δ \ \text{sgn}(x)(|x| - δ) & \text{if } |x| > δ \end{cases}$ | Smoother results, fewer artifacts | Can over-smooth details, leading to edge blurring |

| Median (Recommended) | N/A | Stability and convenience, good detail preservation | - |

| Smooth Garrote | $θ_{SG}(x) = \dfrac{x^{2n+1}}{x^{2n} + δ^{2n}}$ | Compromise between hard and soft thresholding | Parameter $n$ requires tuning |

Experimental Protocols

Protocol 1: Wavelet-Gaussian Denoising with Adaptive Edge Detection (EDAW)

This protocol outlines the steps for a hybrid method that integrates wavelet denoising with an adaptive edge detection framework, particularly effective for images corrupted by Gaussian noise [29].

1. Image Denoising Module: - Input: Noisy medical image (e.g., MRI, CT). - Wavelet Decomposition: Decompose the noisy image using a selected wavelet family (e.g., Daubechies 'db4', Symlet 'sym4') over 3-5 decomposition levels to obtain approximation (LL) and detail (LH, HL, HH) coefficients [21]. - Thresholding: Apply the median thresholding function to the detail coefficients. Avoid hard thresholding to prevent the introduction of artifacts. - Reconstruction: Perform an inverse wavelet transform on the thresholded coefficients to generate a denoised image.

2. Gradient Calculation & Non-Maximum Suppression (NMS): - Gradient Computation: Compute the gradient magnitude (G) and direction (θ) of the denoised image using the Sobel operator. - $G = \sqrt{(GX^2 + GY^2)}$ - $θ = \arctan{(GY / GX)}$ - where $GX$ and $GY$ are the gradients in the X and Y directions obtained using the Sobel kernels [29]. - Non-Maximum Suppression (NMS): Thin the edges to a single-pixel width by comparing the gradient magnitude of each pixel with its neighbors along the gradient direction (θ). Retain a pixel only if its magnitude is a local maximum.

3. Adaptive Thresholding and Edge Linking: - OTSU's Method: Apply the OTSU algorithm to the gradient magnitude image to automatically determine an optimal global threshold (T) for separating edge and non-edge pixels [29]. - Hysteresis Thresholding: Use a dual-threshold approach (derived from the OTSU threshold) to identify strong and weak edge pixels. Finally, link weak edges to strong ones if they are connected, to form continuous edge contours.

Protocol 2: Statistical Wavelet Model for Denoising and Enhancement (SWM-DE)

This protocol describes a method that uses a statistical model within a Bayesian framework for joint denoising and enhancement, which automatically adapts to the image data without requiring explicit noise level estimation [30].

1. Wavelet Coefficient Modeling: - Decomposition: Perform a multi-level wavelet decomposition of the noisy input image. - MAP Estimation: Model the marginal distribution of the noise-free wavelet coefficients. Within a Bayesian framework, develop a Maximum A Posteriori (MAP) estimator. This estimator is used to derive the noise-free coefficient from the observed noisy coefficient, effectively suppressing noise while preserving signal.

2. Morphological Reconstruction for Enhancement: - Adjustable Morphological Model: Apply an adjustable morphological reconstruction model to the initial denoised image. This step targets the removal of residual structured or unknown noises that the statistical step may have missed, while simultaneously preserving and enhancing image details.

3. Multi-Scale Reconstruction: - Component Extraction: Decompose the processed image into several wavelet sub-bands to separate illumination (low-frequency) and detail (high-frequency) components. - Inverse Transformation: Reconstruct the final enhanced, noise-free image by applying an inverse wavelet transform. This process yields an image with improved contrast and clarity, as measured by high EME (Measure of Enhancement) values [30].

Workflow Visualization

Wavelet-Based Denoising and Edge Detection

Statistical Wavelet Model (SWM-DE) Workflow

The Scientist's Toolkit: Research Reagent Solutions

Table 4: Essential Research Reagents and Computational Tools

| Item / Tool | Function / Description | Example Use Case |

|---|---|---|

| Wavelet Toolbox (MATLAB/Python) | Provides libraries for performing DWT, thresholding, and reconstruction with various wavelet families. | Core component for implementing Protocols 1 & 2 [29] [30]. |

| Discrete Wavelet Transform (DWT) | Multi-resolution analysis tool to decompose an image into frequency sub-bands (LL, LH, HL, HH). | Image decomposition for noise separation in the transform domain [21] [8]. |

| Daubechies (dbN), Symlets (symN) | Wavelet families offering a balance between smoothness and localization; chosen based on image characteristics. | db4 is often used for its orthogonality and simplicity; sym4 for near-symmetry [21]. |

| Median Threshold Function | A stable thresholding function that avoids the artifacts of hard thresholding and over-smoothing of soft thresholding. | Recommended for compressing noisy wavelet coefficients in the denoising module [29]. |

| OTSU Thresholding Algorithm | Automatic, data-driven method for optimal global threshold selection by maximizing inter-class variance. | Adaptive thresholding in edge detection to binarize the gradient magnitude image [29]. |

| Sobel Operator Kernels | 3x3 convolution kernels used to approximate the image gradient in the horizontal and vertical directions. | Calculating gradient magnitude and direction for edge detection in Protocol 1 [29]. |

| BM3D Algorithm | A high-performance, non-deep learning denoising algorithm that uses collaborative filtering in 3D transform groups. | A strong benchmark for comparing the performance of wavelet-based denoising methods [27]. |

| Medical Image Datasets (e.g., LIDC-IDRI, SIDD) | Publicly available datasets of medical images (CT, MRI) and real-world noisy images for validation. | Training and quantitative evaluation of denoising algorithms using metrics like PSNR and SSIM [28] [8]. |

Multi-modal medical image fusion and synthesis have emerged as critical technologies in modern healthcare, addressing the inherent limitations of individual imaging modalities. In clinical practice, Positron Emission Tomography (PET) images excel at highlighting functional metabolic activity, such as tumor metabolism, but suffer from limited spatial resolution. Conversely, Computed Tomography (CT) provides high-resolution anatomical structures, including bone and dense tissues, but offers weak representation of low-density lesions. Magnetic Resonance Imaging (MRI) delivers superior soft-tissue contrast. Individually, each modality presents an incomplete picture; together, they provide complementary information essential for comprehensive diagnosis, treatment planning, and therapy monitoring [13].

The integration of these diverse data types through fusion and synthesis creates a more complete representation of pathology and physiology. This enables more accurate tumor localization, improved radiotherapy targeting, enhanced surgical planning, and better treatment response assessment. Within this technological landscape, wavelet transform-based techniques have proven particularly valuable due to their ability to efficiently separate and process an image's structural information (low-frequency components) from its fine details and textures (high-frequency components) [13] [8] [14]. This multi-resolution analysis capability makes wavelet methods ideally suited for handling the distinct, complementary information present in PET, CT, and MRI scans.

Wavelet Transform Fundamentals for Medical Imaging

Wavelet transforms provide a mathematical framework for decomposing images into multiple frequency sub-bands at different scales. Unlike traditional Fourier transforms that provide only frequency information, wavelets localize information in both frequency and space, making them exceptionally suitable for analyzing non-stationary signals like medical images [31].

The Discrete Wavelet Transform (DWT) decomposes an image into four primary components: approximation coefficients (LL) representing the low-frequency structural content, and detail coefficients capturing high-frequency information in horizontal (HL), vertical (LH), and diagonal (HH) directions [13]. This decomposition enables targeted processing of different image characteristics. For instance, in the WTA-Net framework, applying Spatial-Channel attention to these wavelet components resulted in significant quantitative improvements: information entropy (IE), average gradient (AG), and standard deviation (SD) increased by 34.76%, 30.5%, and 11.07% respectively for PET images, and 12.7%, 21.13%, and 4.54% for CT images [13].

Advanced variants like the Dual-Tree Complex Wavelet Transform (DTCWT) offer enhanced directional selectivity and approximate shift-invariance, providing more robust feature representation. When optimized using nature-inspired algorithms, this transform demonstrates superior performance in preserving anatomical boundaries and metabolic information during fusion tasks [31].

Application Notes: Technical Approaches and Performance

Wavelet-Based Architectures for Image Fusion and Synthesis

Recent advances in wavelet-based deep learning architectures have demonstrated remarkable capabilities in multi-modal medical image processing. The following table summarizes key technical approaches and their documented performance:

Table 1: Performance Metrics of Wavelet-Based Multi-modal Image Fusion Techniques

| Technique / Network | Modalities | Key Innovation | Reported Improvement Over Baseline | Primary Application |

|---|---|---|---|---|

| WTA-Net [13] | PET/CT, PET/MRI | Wavelet Attention + Cross-Modal Information Fusion Module | IE: 18.92%, AG: 14%, EN: 18.25% (Brain MRI-PET) [13] | Medical Image Fusion |

| DWFI-GAN [14] | Multi-contrast MRI | Dual-branch Wavelet Encoding + Deformable Feature Fusion | SSIM: ~3-5% improvement over non-wavelet baselines [14] | Medical Image Synthesis |

| ODTCWT with PF-HBSSO [31] | CT/MRI | Optimized DTCWT + Adaptive Weighted Average Fusion | Superior mutual information preservation [31] | Multimodal Image Fusion |

| WGSF-Net [32] | Various 2D modalities | Wavelet-Guided Spatial-Frequency Fusion | Dice: +1.5-13.9% in unseen domains [32] | Cross-Domain Segmentation |

The WTA-Net (Wavelet Transform with Spatial-Channel Attention Network) employs a dual-encoder, single-decoder architecture specifically designed to capture frequency domain features and enhance information flow between modalities. Its innovative Cross Modal Information Fusion Module (CMIFM) utilizes spatial attention to enhance local information within single modalities while employing Transformer mechanisms to enable global feature interaction between modalities [13].

For image synthesis tasks, the DWFI-GAN (Dual-branch Wavelet Encoding and Deformable Feature Interaction GAN) introduces a wavelet multi-scale downsampling (Wavelet-MS-Down) module that performs near-lossless feature dimensionality reduction through wavelet decomposition. The resulting low-frequency and high-frequency subbands are processed separately to preserve both global structural contours and fine-grained details, effectively mitigating the global information loss common in conventional CNN-based downsampling [14].

Quantitative Assessment Metrics

Rigorous evaluation of fusion and synthesis outcomes employs multiple quantitative metrics, as summarized below:

Table 2: Key Quantitative Metrics for Evaluating Fusion/Synthesis Quality

| Metric | Description | Interpretation | Ideal Value |

|---|---|---|---|

| Information Entropy (IE) [13] | Measures the amount of information contained in the fused image | Higher values indicate richer information content | Maximize |

| Structural Similarity Index (SSIM) [8] | Assesses perceptual similarity to reference images | Values closer to 1 indicate better structural preservation | 1.0 |

| Peak Signal-to-Noise Ratio (PSNR) [8] | Measures reconstruction quality in synthesized images | Higher values indicate better quality | Maximize |

| Average Gradient (AG) [13] | Evaluates image clarity and texture preservation | Higher values indicate sharper results | Maximize |

| Standard Deviation (SD) [13] | Reflects contrast and distribution of pixel intensities | Higher values suggest better contrast | Maximize |

| Spatial Frequency (SF) [13] | Measures overall activity level and clarity | Higher values indicate better quality | Maximize |

Experimental Protocols

Protocol 1: PET-CT Fusion via WTA-Net

Objective: To generate a fused PET-CT image that preserves both metabolic information (from PET) and anatomical structure (from CT) for improved tumor localization.

Materials:

- Pre-registered PET and CT image pairs

- WTA-Net implementation (PyTorch/TensorFlow)

- Computing resources: GPU with ≥8GB VRAM

- Evaluation software: Python with OpenCV, SciKit-Image

Procedure:

- Image Preprocessing:

- Normalize both PET and CT images to [0, 1] range

- Verify spatial registration using landmark-based or intensity-based methods

- Resize images to network input dimensions (typically 256×256 or 512×512)

Wavelet Decomposition:

- Apply Discrete Wavelet Transform (DWT) to both PET and CT inputs

- Use 'db4' (Daubechies 4) or 'sym4' (Symlet 4) wavelet basis functions

- Decompose into 4 sub-bands: LL (approximation), LH (horizontal detail), HL (vertical detail), HH (diagonal detail)

Wavelet Attention Processing:

- Process each sub-band through Spatial-Channel Attention modules

- Apply soft attention mechanisms to emphasize diagnostically relevant features

- For PET: Enhance metabolic activity regions in high-frequency components

- For CT: Preserve bone structures and anatomical boundaries

Cross-Modal Fusion:

- Feed attention-enhanced features to Cross Modal Information Fusion Module (CMIFM)

- Employ transformer-based mechanisms for global feature interaction

- Use spatial attention for local feature enhancement

Image Reconstruction:

- Reconstruct fused wavelet coefficients using Inverse DWT (IDWT)

- Apply post-processing for intensity consistency

- Output final fused image

Validation:

- Calculate IE, AG, EN, SD, and SF metrics

- Compare with ground truth where available

- Conduct qualitative assessment by clinical experts

Protocol 2: Multi-contrast MRI Synthesis via DWFI-GAN

Objective: To synthesize missing MRI sequences (e.g., T2 from T1, FLAIR from T1ce) using available modalities to complete multi-protocol datasets.

Materials:

- Paired multi-contrast MRI datasets (BraTS2020, IXI)

- DWFI-GAN implementation

- High-performance computing cluster

- Validation framework with segmentation metrics

Procedure:

- Data Preparation:

- Select source and target modality pairs (e.g., T1→T2, T1ce→FLAIR)

- Apply skull-stripping and bias field correction

- Normalize intensities per sequence using z-score normalization

Dual-Branch Wavelet Encoding:

- Process each input modality through separate wavelet encoders

- Employ Wavelet-MS-Down modules for multi-scale decomposition

- Extract low-frequency components (global anatomy) and high-frequency components (local details)

Deformable Feature Interaction:

- Apply Deformable Cross-Attention Feature Fusion (DCFF) modules at each encoding stage

- Align spatial features using deformable convolution

- Fuse cross-modal features through attention mechanisms

Frequency-Space Enhancement:

- Process bottleneck features through Frequency-Space Enhanced (FSE) modules

- Integrate Fast Fourier Transform (FFT) with State Space Models (SSM)

- Enhance multi-scale representation through joint frequency-spatial modeling

Image Generation and Discrimination:

- Decode fused features to generate target modality

- Employ multi-scale discriminators for adversarial training

- Use combination of adversarial, perceptual, and cycle-consistency losses

Validation:

- Calculate PSNR, SSIM, and MSE against ground truth

- Perform segmentation-based evaluation using synthetic images

- Conduct radiologist reader studies for clinical assessment

The Scientist's Toolkit: Research Reagent Solutions

Table 3: Essential Research Components for Wavelet-Based Medical Image Fusion

| Component / Resource | Type | Function / Application | Exemplars / Alternatives |

|---|---|---|---|

| Wavelet Transforms | Mathematical Tool | Multi-scale decomposition for feature separation | Discrete Wavelet Transform (DWT), Dual-Tree CWT [31], Stationary Wavelet Transform (SWT) |

| Attention Mechanisms | Algorithmic Component | Feature emphasis and selection | Spatial-Channel Attention [13], Wavelet Attention (WA) [13], Deformable Attention [14] |

| Fusion Modules | Architectural Component | Cross-modal information integration | Cross Modal Information Fusion Module (CMIFM) [13], Deformable Cross-Attention Feature Fusion (DCFF) [14] |

| Generative Models | Framework | Image synthesis and data generation | Generative Adversarial Networks (GANs) [14] [33], Conditional GANs (cGANs), Variational Autoencoders (VAEs) [8] |

| Optimization Algorithms | Computational Tool | Parameter tuning and performance enhancement | Hybridized heuristic algorithms [31], Probability of Fitness-based Honey Badger Squirrel Search Optimization (PF-HBSSO) [31] |

| Evaluation Metrics | Analytical Tool | Quantitative performance assessment | Information Entropy, SSIM, PSNR [13] [8], Task-specific metrics (e.g., Dice for segmentation) |

| Medical Imaging Datasets | Data Resource | Model training and validation | BraTS2020 [14], IXI [14], LIDC-IDRI [8], institution-specific collections |

Implementation Considerations and Best Practices

Successful implementation of wavelet-based multi-modal fusion and synthesis requires careful attention to several practical aspects. Computational resources must be adequate, with GPU acceleration being essential for training deep wavelet networks. Memory requirements can be substantial, particularly for 3D volumes or high-resolution data. Data preprocessing is critical, including rigorous intensity normalization, accurate spatial registration between modalities, and consistent resolution matching. Wavelet selection should be guided by the specific application—Daubechies wavelets ('db4', 'db8') offer good regularity for medical images, while Symlets ('sym4') provide higher symmetry for reduced phase distortion [31].

For clinical translation, validation must extend beyond quantitative metrics to include task-specific evaluations. For diagnostic applications, reader studies with clinical experts are essential. For downstream tasks like segmentation or radiation planning, performance should be measured on the ultimate clinical task. Regulatory considerations are increasingly important, particularly when using synthetic data for algorithm development or validation. The European Health Data Space (EHDS) framework provides guidance on synthetic data governance, emphasizing utility, transparency, and accountability [33].

Future directions in this field include the development of more efficient wavelet architectures, improved cross-modal alignment techniques, and enhanced evaluation methodologies that better correlate with clinical utility. As these technologies mature, wavelet-based multi-modal image fusion and synthesis are poised to become indispensable tools in precision medicine and personalized healthcare.

The exponential growth of medical imaging data presents a critical challenge for modern healthcare systems, balancing the competing demands of storage efficiency and diagnostic integrity [34]. Technologies such as Magnetic Resonance Imaging (MRI), Computed Tomography (CT), and positron emission tomography (PET) generate high-resolution images essential for accurate diagnosis but create substantial burdens for storage infrastructure and transmission bandwidth, particularly in telemedicine applications [8]. This challenge is especially pronounced in resource-limited settings where network capacity may be constrained [35].

Unlike natural image compression, medical image compression operates under fundamentally different constraints, prioritizing the preservation of subtle diagnostic details that are crucial for clinical decision-making over maximal compression ratios [36]. Even minor quality degradation can potentially impact diagnostic accuracy, necessitating specialized approaches that maintain structural integrity while achieving meaningful data reduction [37].

Wavelet transform-based techniques have emerged as a powerful solution to this challenge, offering multi-resolution analysis capabilities that align well with the structural characteristics of medical images [8]. By decomposing images into frequency sub-bands while preserving spatial information, wavelet transforms enable more efficient representation of structural and textural information, facilitating compression that maintains diagnostic relevance [38]. This foundation has enabled advanced hybrid approaches that combine the theoretical strengths of wavelet analysis with adaptive deep learning architectures [8].

Technical Foundation: Wavelet-Based Compression

Core Principles of Wavelet Transform in Medical Imaging

The Discrete Wavelet Transform (DWT) serves as a mathematical cornerstone for advanced medical image compression by performing multi-resolution analysis that decomposes images into hierarchical frequency components [8]. This decomposition generates approximation coefficients (representing low-frequency image content) and detail coefficients (capturing high-frequency information like edges and textures) across multiple scales [38]. For medical images, this frequency separation proves particularly valuable as diagnostically significant features often correspond to specific frequency components that can be prioritized during compression.

The fundamental advantage of wavelet transforms over traditional Fourier-based methods lies in their ability to localize both frequency and spatial information simultaneously [39]. This dual localization enables precise preservation of anatomical boundaries and pathological features that are essential for diagnostic interpretation. Furthermore, wavelet transforms demonstrate exceptional compatibility with the human visual system characteristics, making them ideal for medical imaging applications where perceptual quality correlates strongly with diagnostic utility [35].

Advanced Hybrid Architectures

Recent research has focused on integrating wavelet transforms with deep learning architectures to create hybrid systems that leverage both mathematical foundations and adaptive learning capabilities [8]. These approaches typically employ DWT for initial image decomposition, followed by neural networks that process the resulting sub-bands with attention to their diagnostic significance.

A notable implementation combines DWT with a Cross-Attention Learning (CAL) module that dynamically weights feature importance based on clinical relevance [8]. This architecture allows the compression system to prioritize regions containing potential lesions or tissue abnormalities while applying more aggressive compression to diagnostically neutral areas. The attention mechanism essentially learns to identify and preserve the feature characteristics that radiologists and other clinical specialists depend on for accurate interpretation.

Table 1: Performance Comparison of Wavelet-Based Compression Techniques

| Compression Method | PSNR (dB) | SSIM | Compression Ratio | Modality |

|---|---|---|---|---|

| DWT + CAL + VAE [8] | 24.23 | 0.98 | 25:1 | CT, MRI |

| Region-Based DWT [35] | 24.23 | 0.96 | 30:1 | MRI |

| EE-CLAHE + SPIHT [37] | 22.15 | 0.94 | 28:1 | MRI, CT, X-ray |

| Traditional JPEG2000 [8] | 20.50 | 0.91 | 20:1 | Various |

| Standard JPEG [35] | 16.01 | 0.87 | 15:1 | Various |

Application Notes: Implementation Frameworks

Region of Interest (ROI) Based Compression

ROI-based compression represents a sophisticated strategy for balancing compression efficiency with diagnostic integrity by applying different compression techniques to diagnostically critical regions versus background areas [37]. Implementation typically begins with segmentation using adaptive expectation maximization clustering (AEMC) enhanced with fuzzy c-means (FCM) and Otsu thresholding to accurately delineate ROI boundaries [37].

Following segmentation, optimized compression pipelines are applied separately to ROI and non-ROI regions. For ROI areas, lossless or near-lossless techniques such as modified SPIHT with Huffman coding preserve all diagnostic information [37]. For non-ROI regions, more aggressive lossy compression like Embedded Zerotree Wavelet (EZW) or fractal compression significantly reduces data volume while maintaining overall image context [35]. This selective approach achieves superior compression ratios without compromising the diagnostic value of critical image regions.

Cross-Attention Learning with Wavelet Decomposition

The integration of cross-attention mechanisms with wavelet decomposition represents a significant advancement in adaptive compression [8]. This approach employs a dual-branch architecture where wavelet transforms handle frequency decomposition while attention mechanisms identify spatially significant regions worthy of preservation.